Interior-point method for LP: Difference between revisions

No edit summary |

No edit summary |

||

| (44 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

Authors: Tomas Lopez Lauterio, Rohit Thakur and Sunil Shenoy (SysEn | Authors: Tomas Lopez Lauterio, Rohit Thakur and Sunil Shenoy (SysEn 5800 Fall 2020) <br> | ||

== Introduction == | == Introduction == | ||

Linear programming problems | Linear programming problems seek to optimize linear functions given linear constraints. There are several applications of linear programming including inventory control, production scheduling, transportation optimization and efficient manufacturing processes. Simplex method has been a very popular method to solve these linear programming problems and has served these industries well for a long time. But over the past 40 years, there have been significant number of advances in different algorithms that can be used for solving these types of problems in more efficient ways, especially where the problems become very large scale in terms of variables and constraints.<ref> "Practical Optimization - Algorithms and Engineering Applications" by Andreas Antoniou and Wu-Sheng Lu, ISBN-10: 0-387-71106-6 </ref> <ref> "Linear Programming - Foundations and Extensions - 3<sup>rd</sup> edition''" by Robert J Vanderbei, ISBN-113: 978-0-387-74387-5. </ref> In early 1980s Karmarkar (1984) <ref> N Karmarkar, "A new Polynomial - Time algorithm for linear programming", Combinatorica, VOl. 4, No. 8, 1984, pp. 373-395.</ref> published a paper introducing interior point methods to solve linear-programming problems. A simple way to look at differences between simplex method and interior point method is that a simplex method moves along the edges of a polytope towards a vertex having a lower value of the cost function, whereas an interior point method begins its iterations inside the polytope and moves towards the lowest cost vertex without regard for edges. This approach reduces the number of iterations needed to reach that vertex, thereby reducing computational time needed to solve the problem.<br><br> | ||

=== Lagrange Function === | === Lagrange Function === | ||

Before getting too deep into description of Interior point method, there are a few concepts that are helpful to understand. First key concept to understand is related to Lagrange function. Lagrange function incorporates the constraints into a modified objective function in such a way that a constrained minimizer (x | Before getting too deep into description of Interior point method, there are a few concepts that are helpful to understand. First key concept to understand is related to Lagrange function. Lagrange function incorporates the constraints into a modified objective function in such a way that a constrained minimizer <math> (x^{*}) </math> is connected to an unconstrained minimizer <math> \left \{x^{*},\lambda ^{*} \right \} </math> for the augmented objective function <math> L\left ( x , \lambda \right ) </math>, where the augmentation is achieved with <math> 'p' </math> Lagrange multipliers. <ref> Computational Experience with Primal-Dual Interior Point Method for Linear Programming''" by Irvin Lustig, Roy Marsten, David Shanno </ref><ref> "Practical Optimization - Algorithms and Engineering Applications" by Andreas Antoniou and Wu-Sheng Lu, ISBN-10: 0-387-71106-6 </ref> <br> | ||

To illustrate this point, | To illustrate this point, consider a simple an optimization problem:<br> | ||

minimize f(x) | minimize <math> f\left ( x \right ) </math><br> | ||

subject to: | subject to: <math> A \cdot x = b </math><br> | ||

where, <math> A \, \in \, R^{p\, \times \, n} </math> is assumed to have a full row rank | |||

Lagrange function can be laid out as:<br> | Lagrange function can be laid out as:<br> | ||

<math>L(x, \lambda ) = f(x) + \sum_{i=1}^{p}\lambda _{i}\cdot a_{i}(x)</math> <br> | |||

<math>L(x, \lambda ) = f(x) | where, <math> '\lambda ' </math> introduced in this equation is called Lagrange Multiplier. <br><br> | ||

where, ' | |||

=== Newton's Method === | === Newton's Method === | ||

Another key concept to understand is regarding solving linear and non-linear equations using Newton's methods. | Another key concept to understand is regarding solving linear and non-linear equations using Newton's methods. | ||

Assume | Assume an unconstrained minimization problem in the form: <br> | ||

minimize | minimize <math> g\left ( x \right ) </math> , where <math> g\left ( x \right ) </math> is a real valued function with <math> 'n' </math> variables. <br> | ||

A local minimum for this problem will satisfy the following system of equations:<br> | A local minimum for this problem will satisfy the following system of equations:<br> | ||

<math>\left [ \frac{\partial g(x)}{\partial x_{1}} ..... \frac{\partial g(x)}{\partial x_{n}}\right ]^{T} = \left [ 0 ... 0 \right ]</math> <br> | <math>\left [ \frac{\partial g(x)}{\partial x_{1}} ..... \frac{\partial g(x)}{\partial x_{n}}\right ]^{T} = \left [ 0 ... 0 \right ]</math> <br> | ||

| Line 29: | Line 27: | ||

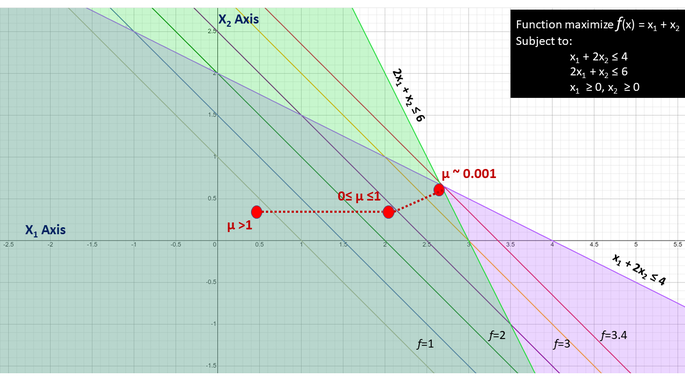

[[File:Visualization.png|685x685px|Visualization of Central Path method in Interior point|thumb]] | [[File:Visualization.png|685x685px|Visualization of Central Path method in Interior point|thumb]] | ||

Given a linear programming problem with constraint equations that have inequality terms, the inequality term is typically replaced with an equality term using slack variables. The new reformulation can be discontinuous in nature and to replace the discontinuous function with a smoother function, a logarithmic form of this reformulation is utilized. This nonlinear objective function is called "''Logarithmic Barrier Function''" | |||

The process involves starting with formation of a primal-dual pair of linear programs and then using "''Lagrangian function''" form on the "''Barrier function''" to convert the constrained problems into unconstrained problems. These unconstrained problems are then solved using Newton's method as shown above.<br> | |||

=== Problem Formulation === | === Problem Formulation === | ||

| Line 36: | Line 35: | ||

Consider a combination of primal-dual problem below:<br> | Consider a combination of primal-dual problem below:<br> | ||

('''Primal Problem formulation''') <br> | ('''Primal Problem formulation''') <br> | ||

→ minimize <math> c^{T}x </math> Subject to: <math> Ax = b </math> and <math> x \geq 0 </math> | → minimize <math> c^{T}x </math> <br> | ||

Subject to: <math> Ax = b </math> and <math> x \geq 0 </math> <br> | |||

('''Dual Problem formulation''') <br> | ('''Dual Problem formulation''') <br> | ||

→ maximize <math> b^{T}y </math> Subject to: <math> A^{T}y + \lambda = c </math> and < | → maximize <math> b^{T}y </math> <br> | ||

' | Subject to: <math> A^{T}y + \lambda = c </math> and <math> \lambda \geq 0 </math> <br> | ||

<math> '\lambda ' </math> vector introduced represents the slack variables.<br> | |||

The Lagrangian functional form is used to configure two equations using "''Logarithmic Barrier Function''" for both primal and dual forms mentioned above:<br> | |||

Lagrangian equation for Primal using Logarithm Barrier Function : <math> L_{p}(x,y) = c^{T}\cdot x - \mu \cdot \sum_{j=1}^{n}log(x_{j}) - y^{T}\cdot (Ax - b) </math> <br> | |||

Lagrangian equation for Dual using Logarithm Barrier Function : <math> L_{d}(x,y,\lambda ) = b^{T}\cdot y + \mu \cdot \sum_{j=1}^{n}log(\lambda _{j}) - x^{T}\cdot (A^{T}y +\lambda - c) </math> <br> | |||

Taking the partial derivatives of L<sub>p</sub> and L<sub>d</sub> with respect to variables 'x' | Taking the partial derivatives of L<sub>p</sub> and L<sub>d</sub> with respect to variables <math> 'x'\; '\lambda'\; 'y' </math>, and forcing these terms to zero, we get the following equations: <br> | ||

<math> Ax = b </math> and <math> x \geq 0 </math> | <math> Ax = b </math> and <math> x \geq 0 </math> <br> | ||

<math> A^{T}y + \lambda = c </math> and <math> \lambda \geq 0 </math> | <math> A^{T}y + \lambda = c </math> and <math> \lambda \geq 0 </math> <br> | ||

<math> x_{j}\cdot \lambda _{j} = \mu </math> for ''j''= 1,2,.......''n'' | <math> x_{j}\cdot \lambda _{j} = \mu </math> for ''j''= 1,2,.......''n'' <br> | ||

where, | where, <math> '\mu ' </math> is strictly positive scaler parameter. For each <math> \mu > 0 </math> , the vectors in the set <math> \left \{ x\left ( \mu \right ), y\left ( \mu \right ) , \lambda \left ( \mu \right )\right \} </math> satisfying above equations, can we viewed as set of points in <math> R^{n} </math> , <math> R^{p} </math>, <math> R^{n} </math> respectively, such that when <math> '\mu ' </math> varies, the corresponding points form a set of trajectories called ''"Central Path"''. The central path lies in the ''"Interior"'' of the feasible regions. There is a sample illustration of ''"Central Path"'' method in figure to right. Starting with a positive value of <math> '\mu ' </math> and as <math> '\mu ' </math> approaches 0, the optimal point is reached. <br> | ||

Let | Let Diagonal[...] denote a diagonal matrix with the listed elements on its diagonal. | ||

'''X''' = Diagonal | Define the following:<br> | ||

'''X''' = Diagonal [<math> x_{1}^{0}, .... x_{n}^{0} </math>]<br> | |||

<math> \lambda </math> = Diagonal (<math> \lambda _{1}^{0}, .... \lambda _{n}^{0} </math> )<br> | <math> \lambda </math> = Diagonal (<math> \lambda _{1}^{0}, .... \lambda _{n}^{0} </math> )<br> | ||

'''e<sup>T</sup>''' = (1 .....1) as vector of all 1's.<br> | '''e<sup>T</sup>''' = (1 .....1) as vector of all 1's.<br> | ||

Using these newly defined terms, the equation | Using these newly defined terms, the equation above can be written as: <br> | ||

<math> X\cdot \lambda \cdot e = \mu \cdot e </math> <br> | <math> X\cdot \lambda \cdot e = \mu \cdot e </math> <br> | ||

=== Iterations using Newton's Method === | === Iterations using Newton's Method === | ||

Employing the Newton's iterative method to solve the following equations: <br> | |||

<math> Ax - b = 0 </math> | <math> Ax - b = 0 </math> <br> | ||

<math> A^{T}y + \lambda = c </math> | <math> A^{T}y + \lambda = c </math> <br> | ||

<math> X\cdot \lambda \cdot e - \mu \cdot e = 0</math> | <math> X\cdot \lambda \cdot e - \mu \cdot e = 0</math> <br> | ||

With definition of starting point that lies within feasible region as <math> \left ( x^{0},y^{0},\lambda ^{0} \right ) </math> such that <math> x^{0}> 0 \, and \lambda ^{0}> 0 </math>. | |||

<math> \delta _{p} = b - A\cdot x^{0} </math> | Also defining 2 residual vectors for both the primal and dual equations: <br> | ||

<math> \delta _{d} = c - A^{0}\cdot y^{0} - \lambda ^{0} </math> | <math> \delta _{p} = b - A\cdot x^{0} </math> <br> | ||

<math> \delta _{d} = c - A^{0}\cdot y^{0} - \lambda ^{0} </math> <br> | |||

Applying Newton's Method to solve equations | Applying Newton's Method to solve above equations: <br> | ||

<math> \begin{bmatrix} | <math> \begin{bmatrix} | ||

A & 0 & 0\\ | A & 0 & 0\\ | ||

| Line 82: | Line 86: | ||

\end{bmatrix} | \end{bmatrix} | ||

</math><br> | </math><br> | ||

So a single iteration of Newton's method involves the following equations. For each iteration, we solve for the next value of | So a single iteration of Newton's method involves the following equations. For each iteration, we solve for the next value of <math> x^{k+1},y^{k+1},\lambda ^{k+1} </math>: <br> | ||

<math> (A\lambda ^{-1}XA^{T})\delta _{y} = b- \mu A\lambda^{-1} + A\lambda ^{-1}X\delta _{d} </math> | <math> (A\lambda ^{-1}XA^{T})\delta _{y} = b- \mu A\lambda^{-1} + A\lambda ^{-1}X\delta _{d} </math> <br> | ||

<math> \delta _{\lambda} = \delta _{d}\cdot A^{T}\delta _{y} </math> | <math> \delta _{\lambda} = \delta _{d}\cdot A^{T}\delta _{y} </math> <br> | ||

<math> \delta _{x} = \lambda ^{-1}\left [ \mu \cdot e - X\lambda e -\lambda \delta _{z}\right ] </math> | <math> \delta _{x} = \lambda ^{-1}\left [ \mu \cdot e - X\lambda e -\lambda \delta _{z}\right ] </math> <br> | ||

<math> \alpha _{p} = min\left \{ \frac{-x_{j}}{\delta _{x_{j}}} \right \} </math> for <math> \delta x_{j} < 0 </math> | <math> \alpha _{p} = min\left \{ \frac{-x_{j}}{\delta _{x_{j}}} \right \} </math> for <math> \delta x_{j} < 0 </math> <br> | ||

<math> \alpha _{d} = min\left \{ \frac{-\lambda_{j}}{\delta _{\lambda_{j}}} \right \} </math> for <math> \delta \lambda_{j} < 0 </math> | <math> \alpha _{d} = min\left \{ \frac{-\lambda_{j}}{\delta _{\lambda_{j}}} \right \} </math> for <math> \delta \lambda_{j} < 0 </math> <br><br> | ||

The value of the the following variables for next iteration (+1) is determined by: <br> | The value of the the following variables for next iteration (+1) is determined by: <br> | ||

| Line 94: | Line 98: | ||

<math> \lambda^{k+1} = \lambda^{k} + \alpha _{d}\cdot \delta _{\lambda} </math> <br> | <math> \lambda^{k+1} = \lambda^{k} + \alpha _{d}\cdot \delta _{\lambda} </math> <br> | ||

The quantities | The quantities <math> \alpha _{p} </math> and <math> \alpha _{d} </math> are positive with <math> 0\leq \alpha _{p},\alpha _{d}\leq 1 </math>. <br> | ||

After each iteration of Newton's method, we assess the duality gap that is given by the expression below and compare it against a small value <big>ε</big> <br> | After each iteration of Newton's method, we assess the duality gap that is given by the expression below and compare it against a small value <big>ε</big> <br> | ||

<math> \frac{c^{T}x^{k}-b^{T}y^{k}}{1+\left | b^{T}y^{k} \right |} \leq \varepsilon </math> <br> | <math> \frac{c^{T}x^{k}-b^{T}y^{k}}{1+\left | b^{T}y^{k} \right |} \leq \varepsilon </math> <br> | ||

The value of <big>ε</big> can be chosen to be something small 10<sup>-6</sup>, which essentially is the permissible duality gap for the problem. <br> | The value of <big>ε</big> can be chosen to be something small 10<sup>-6</sup>, which essentially is the permissible duality gap for the problem. <br> | ||

== Numerical Example == | == Numerical Example == | ||

Maximize<br> | |||

<math> 3X_{1} + 3X_{2} </math><br> | |||

such that<br> | |||

<math> X_{1} + X_{2} \leq 4, </math><br> | |||

<math> X_{1} \geq 0, </math><br> | |||

<math> X_{2} \geq 0, </math><br> | |||

Barrier form of the above primal problem is as written below: | Barrier form of the above primal problem is as written below: | ||

P(X, | <math> P(X,\mu) = 3X_{1} + 3X_{2} + \mu.log(4-X_{1} - X_{2}) + \mu.log(X_{1}) + \mu.log(X_{2})</math><br> | ||

The Barrier function is always concave, since the problem is a maximization problem, there will be one and only one solution. In order to find the maximum point on the concave function we take a derivate and set it to zero. | The Barrier function is always concave, since the problem is a maximization problem, there will be one and only one solution. In order to find the maximum point on the concave function we take a derivate and set it to zero. | ||

Taking partial derivative and setting to | Taking partial derivative and setting to zero, we get the below equations | ||

<math> \frac{\partial P(X,\mu)}{\partial X_{ | <math> \frac{\partial P(X,\mu)}{\partial X_{1}} = 3 - \frac{\mu}{(4-X_{1}-X_{2})} + \frac{\mu}{X_{1}} = 0</math> <br> | ||

<math> \frac{\partial P(X,\mu)}{\partial X_{2}} = 3 - \frac{\mu}{(4-X_{1}-X_{2})} + \frac{\mu}{X_{2}} = 0</math> <br> | |||

Using above equations the following can be derived: <math> X_{1} = X_{2}</math> <br> | |||

Hence the following can be concluded | |||

<math> 3 - \frac{\mu}{(4-2X_{1})} + \frac{\mu}{X_{1}} = 0 </math><br> | <math> 3 - \frac{\mu}{(4-2X_{1})} + \frac{\mu}{X_{1}} = 0 </math><br> | ||

| Line 144: | Line 145: | ||

Taking only take the positive value of | Taking only take the positive value of <math> X_{1} </math> and <math> X_{2} </math> from the above equation as <math> X_{1} \geq 0 </math> and <math> X_{2} \geq 0</math> we can solve <math>X_{1}</math> and <math>X_{2}</math> for different values of <math>\mu</math>. The outcome of such iterations is listed in the table below. | ||

{| class="wikitable" | {| class="wikitable" | ||

|+ Objective | |+ Objective & Barrier Function w.r.t <math>X_{1}</math>, <math>X_{2}</math> and <math>\mu</math> | ||

|- | |- | ||

! | ! <math>\mu</math> !! <math>X_{1}</math> !! <math>X_{2}</math> !! <math>P(X, \mu)</math> !! <math>f(x)</math> | ||

|- | |- | ||

| 0 || 2 || 2 || 12 || 12 | | 0 || 2 || 2 || 12 || 12 | ||

|- | |- | ||

| 0.01 || 1.998 || 1.998 || 11.947 || 11.990 | | 0.01 || 1.998 || 1.998 || 11.947 || 11.990 | ||

|- | |- | ||

| 0.1 || 1.984 || 1.984 || 11.697 || 11.902 | | 0.1 || 1.984 || 1.984 || 11.697 || 11.902 | ||

|- | |- | ||

| 1 || 1.859 || 1.859 || 11.128 || 11.152 | | 1 || 1.859 || 1.859 || 11.128 || 11.152 | ||

|- | |- | ||

| 10|| 1.486 || 1.486 || 17.114 || 8.916 | | 10 || 1.486 || 1.486 || 17.114 || 8.916 | ||

|- | |- | ||

| 100|| 1.351 || 1.351 || 94.357 || 8.105 | | 100 || 1.351 || 1.351 || 94.357 || 8.105 | ||

|- | |- | ||

| 1000|| 1.335 || 1.335|| 871.052 || 8.011 | | 1000 || 1.335 || 1.335 || 871.052 || 8.011 | ||

|} | |} | ||

From the above table it can be seen that: | From the above table it can be seen that: | ||

# as | # as <math>\mu</math> gets close to zero, the Barrier Function becomes tight and close to the original function. | ||

# at | # at <math>\mu=0</math> the optimal solution is achieved. | ||

Summary: | Summary: | ||

Maximum Value of Objective function =12 <br> | Maximum Value of Objective function <math>=12</math> <br> | ||

Optimal points | Optimal points <math>X_{1} = 2 </math> and <math>X_{2} = 2</math> | ||

The Newton's Method can also be applied to solve linear programming problems as indicated in the "Theory and Algorithm" section above. The solution to linear programming problems as indicated in this section "Numerical Example", will be similar to quadratic equation as obtained above and will converge in one iteration. | |||

== Applications == | == Applications == | ||

Primal-Dual interior-point (PDIP) methods are commonly used in optimal power flow (OPF), in this case what is being looked is to maximize user utility and minimize operational cost satisfying operational and physical constraints. The solution to the OPF needs to be available to grid operators in few minutes or seconds due changes fluctuations in loads | Primal-Dual interior-point (PDIP) methods are commonly used in optimal power flow (OPF), in this case what is being looked is to maximize user utility and minimize operational cost satisfying operational and physical constraints. The solution to the OPF needs to be available to grid operators in few minutes or seconds due to changes and fluctuations in loads during power generation. Newton-based primal-dual interior point can achieve fast convergence in this OPF optimization problem. <ref> A. Minot, Y. M. Lu and N. Li, "A parallel primal-dual interior-point method for DC optimal power flow," 2016 Power Systems Computation Conference (PSCC), Genoa, 2016, pp. 1-7, doi: 10.1109/PSCC.2016.7540826. </ref> | ||

Another application of the PDIP is for the minimization of losses and cost in the generation and transmission in hydroelectric power systems. <ref> L. M. Ramos Carvalho and A. R. Leite Oliveira, "Primal-Dual Interior Point Method Applied to the Short Term Hydroelectric Scheduling Including a Perturbing Parameter," in IEEE Latin America Transactions, vol. 7, no. 5, pp. 533-538, Sept. 2009, doi: 10.1109/TLA.2009.5361190. </ref> | |||

PDIP are commonly used in imaging processing. One these applications is for image deblurring, in this case the constrained deblurring problem is formulated as primal-dual. The constrained primal-dual is solved using a semi-smooth Newton’s method. <ref> D. Krishnan, P. Lin and A. M. Yip, "A Primal-Dual Active-Set Method for Non-Negativity Constrained Total Variation Deblurring Problems," in IEEE Transactions on Image Processing, vol. 16, no. 11, pp. 2766-2777, Nov. 2007, doi: 10.1109/TIP.2007.908079. </ref> | |||

PDIP can be utilized to obtain a general formula for a shape derivative of the potential energy describing the energy release rate for curvilinear cracks. Problems on cracks and their evolution have important application in engineering and mechanical sciences. <ref> V. A. Kovtunenko, Primal–dual methods of shape sensitivity analysis for curvilinear cracks with nonpenetration, IMA Journal of Applied Mathematics, Volume 71, Issue 5, October 2006, Pages 635–657 </ref> | |||

== Conclusion == | == Conclusion == | ||

| Line 195: | Line 195: | ||

== References == | == References == | ||

<references /> | <references /> | ||

Latest revision as of 06:31, 21 December 2020

Authors: Tomas Lopez Lauterio, Rohit Thakur and Sunil Shenoy (SysEn 5800 Fall 2020)

Introduction

Linear programming problems seek to optimize linear functions given linear constraints. There are several applications of linear programming including inventory control, production scheduling, transportation optimization and efficient manufacturing processes. Simplex method has been a very popular method to solve these linear programming problems and has served these industries well for a long time. But over the past 40 years, there have been significant number of advances in different algorithms that can be used for solving these types of problems in more efficient ways, especially where the problems become very large scale in terms of variables and constraints.[1] [2] In early 1980s Karmarkar (1984) [3] published a paper introducing interior point methods to solve linear-programming problems. A simple way to look at differences between simplex method and interior point method is that a simplex method moves along the edges of a polytope towards a vertex having a lower value of the cost function, whereas an interior point method begins its iterations inside the polytope and moves towards the lowest cost vertex without regard for edges. This approach reduces the number of iterations needed to reach that vertex, thereby reducing computational time needed to solve the problem.

Lagrange Function

Before getting too deep into description of Interior point method, there are a few concepts that are helpful to understand. First key concept to understand is related to Lagrange function. Lagrange function incorporates the constraints into a modified objective function in such a way that a constrained minimizer Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle (x^{*}) }

is connected to an unconstrained minimizer Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \left \{x^{*},\lambda ^{*} \right \} }

for the augmented objective function Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle L\left ( x , \lambda \right ) }

, where the augmentation is achieved with Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 'p' }

Lagrange multipliers. [4][5]

To illustrate this point, consider a simple an optimization problem:

minimize Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f\left ( x \right ) }

subject to: Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle A \cdot x = b }

where, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle A \, \in \, R^{p\, \times \, n} }

is assumed to have a full row rank

Lagrange function can be laid out as:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle L(x, \lambda ) = f(x) + \sum_{i=1}^{p}\lambda _{i}\cdot a_{i}(x)}

where, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle '\lambda ' }

introduced in this equation is called Lagrange Multiplier.

Newton's Method

Another key concept to understand is regarding solving linear and non-linear equations using Newton's methods.

Assume an unconstrained minimization problem in the form:

minimize Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle g\left ( x \right ) }

, where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle g\left ( x \right ) }

is a real valued function with Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 'n' }

variables.

A local minimum for this problem will satisfy the following system of equations:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \left [ \frac{\partial g(x)}{\partial x_{1}} ..... \frac{\partial g(x)}{\partial x_{n}}\right ]^{T} = \left [ 0 ... 0 \right ]}

The Newton's iteration looks like:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x^{k+1} = x^{k} - \left [ \nabla ^{2} g(x^{k}) \right ]^{-1}\cdot \nabla g(x^{k})}

Theory and algorithm

Given a linear programming problem with constraint equations that have inequality terms, the inequality term is typically replaced with an equality term using slack variables. The new reformulation can be discontinuous in nature and to replace the discontinuous function with a smoother function, a logarithmic form of this reformulation is utilized. This nonlinear objective function is called "Logarithmic Barrier Function"

The process involves starting with formation of a primal-dual pair of linear programs and then using "Lagrangian function" form on the "Barrier function" to convert the constrained problems into unconstrained problems. These unconstrained problems are then solved using Newton's method as shown above.

Problem Formulation

Consider a combination of primal-dual problem below:

(Primal Problem formulation)

→ minimize Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle c^{T}x }

Subject to: Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle Ax = b }

and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x \geq 0 }

(Dual Problem formulation)

→ maximize Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle b^{T}y }

Subject to: Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle A^{T}y + \lambda = c }

and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \lambda \geq 0 }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle '\lambda ' }

vector introduced represents the slack variables.

The Lagrangian functional form is used to configure two equations using "Logarithmic Barrier Function" for both primal and dual forms mentioned above:

Lagrangian equation for Primal using Logarithm Barrier Function : Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle L_{p}(x,y) = c^{T}\cdot x - \mu \cdot \sum_{j=1}^{n}log(x_{j}) - y^{T}\cdot (Ax - b) }

Lagrangian equation for Dual using Logarithm Barrier Function : Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle L_{d}(x,y,\lambda ) = b^{T}\cdot y + \mu \cdot \sum_{j=1}^{n}log(\lambda _{j}) - x^{T}\cdot (A^{T}y +\lambda - c) }

Taking the partial derivatives of Lp and Ld with respect to variables Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 'x'\; '\lambda'\; 'y' }

, and forcing these terms to zero, we get the following equations:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle Ax = b }

and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x \geq 0 }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle A^{T}y + \lambda = c }

and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \lambda \geq 0 }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_{j}\cdot \lambda _{j} = \mu }

for j= 1,2,.......n

where, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle '\mu ' }

is strictly positive scaler parameter. For each Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mu > 0 }

, the vectors in the set Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \left \{ x\left ( \mu \right ), y\left ( \mu \right ) , \lambda \left ( \mu \right )\right \} }

satisfying above equations, can we viewed as set of points in Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle R^{n} }

, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle R^{p} }

, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle R^{n} }

respectively, such that when Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle '\mu ' }

varies, the corresponding points form a set of trajectories called "Central Path". The central path lies in the "Interior" of the feasible regions. There is a sample illustration of "Central Path" method in figure to right. Starting with a positive value of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle '\mu ' }

and as Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle '\mu ' }

approaches 0, the optimal point is reached.

Let Diagonal[...] denote a diagonal matrix with the listed elements on its diagonal.

Define the following:

X = Diagonal [Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_{1}^{0}, .... x_{n}^{0} }

]

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \lambda }

= Diagonal (Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \lambda _{1}^{0}, .... \lambda _{n}^{0} }

)

eT = (1 .....1) as vector of all 1's.

Using these newly defined terms, the equation above can be written as:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X\cdot \lambda \cdot e = \mu \cdot e }

Iterations using Newton's Method

Employing the Newton's iterative method to solve the following equations:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle Ax - b = 0 }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle A^{T}y + \lambda = c }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X\cdot \lambda \cdot e - \mu \cdot e = 0}

With definition of starting point that lies within feasible region as Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \left ( x^{0},y^{0},\lambda ^{0} \right ) }

such that Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x^{0}> 0 \, and \lambda ^{0}> 0 }

.

Also defining 2 residual vectors for both the primal and dual equations:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \delta _{p} = b - A\cdot x^{0} }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \delta _{d} = c - A^{0}\cdot y^{0} - \lambda ^{0} }

Applying Newton's Method to solve above equations:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{bmatrix} A & 0 & 0\\ 0 & A^{T} & 1\\ \lambda & 0 & X \end{bmatrix} \cdot \begin{bmatrix} \delta _{x}\\ \delta _{y}\\ \delta _{\lambda } \end{bmatrix} = \begin{bmatrix} \delta _{p}\\ \delta _{d}\\ \mu \cdot e - X\cdot \lambda \cdot e \end{bmatrix} }

So a single iteration of Newton's method involves the following equations. For each iteration, we solve for the next value of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x^{k+1},y^{k+1},\lambda ^{k+1} }

:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle (A\lambda ^{-1}XA^{T})\delta _{y} = b- \mu A\lambda^{-1} + A\lambda ^{-1}X\delta _{d} }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \delta _{\lambda} = \delta _{d}\cdot A^{T}\delta _{y} }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \delta _{x} = \lambda ^{-1}\left [ \mu \cdot e - X\lambda e -\lambda \delta _{z}\right ] }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha _{p} = min\left \{ \frac{-x_{j}}{\delta _{x_{j}}} \right \} }

for Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \delta x_{j} < 0 }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha _{d} = min\left \{ \frac{-\lambda_{j}}{\delta _{\lambda_{j}}} \right \} }

for Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \delta \lambda_{j} < 0 }

The value of the the following variables for next iteration (+1) is determined by:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x^{k+1} = x^{k} + \alpha _{p}\cdot \delta _{x} }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle y^{k+1} = y^{k} + \alpha _{d}\cdot \delta _{y} }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \lambda^{k+1} = \lambda^{k} + \alpha _{d}\cdot \delta _{\lambda} }

The quantities Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha _{p} }

and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha _{d} }

are positive with Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0\leq \alpha _{p},\alpha _{d}\leq 1 }

.

After each iteration of Newton's method, we assess the duality gap that is given by the expression below and compare it against a small value ε

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \frac{c^{T}x^{k}-b^{T}y^{k}}{1+\left | b^{T}y^{k} \right |} \leq \varepsilon }

The value of ε can be chosen to be something small 10-6, which essentially is the permissible duality gap for the problem.

Numerical Example

Maximize

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 3X_{1} + 3X_{2} }

such that

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X_{1} + X_{2} \leq 4, }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X_{1} \geq 0, }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X_{2} \geq 0, }

Barrier form of the above primal problem is as written below:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle P(X,\mu) = 3X_{1} + 3X_{2} + \mu.log(4-X_{1} - X_{2}) + \mu.log(X_{1}) + \mu.log(X_{2})}

The Barrier function is always concave, since the problem is a maximization problem, there will be one and only one solution. In order to find the maximum point on the concave function we take a derivate and set it to zero.

Taking partial derivative and setting to zero, we get the below equations

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \frac{\partial P(X,\mu)}{\partial X_{1}} = 3 - \frac{\mu}{(4-X_{1}-X_{2})} + \frac{\mu}{X_{1}} = 0}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \frac{\partial P(X,\mu)}{\partial X_{2}} = 3 - \frac{\mu}{(4-X_{1}-X_{2})} + \frac{\mu}{X_{2}} = 0}

Using above equations the following can be derived: Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X_{1} = X_{2}}

Hence the following can be concluded

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 3 - \frac{\mu}{(4-2X_{1})} + \frac{\mu}{X_{1}} = 0 }

The above equation can be converted into a quadratic equation as below:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 6X_{1}^2 - 3X_{1}(4-\mu)-4\mu = 0}

The solution to the above quadratic equation can be written as below:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X_{1} = \frac{3(4-\mu)\pm(\sqrt{9(4-\mu)^2 + 96\mu} }{12} = X_{2}}

Taking only take the positive value of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X_{1} }

and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X_{2} }

from the above equation as Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X_{1} \geq 0 }

and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X_{2} \geq 0}

we can solve Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X_{1}}

and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X_{2}}

for different values of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mu}

. The outcome of such iterations is listed in the table below.

| Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mu} | Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X_{1}} | Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X_{2}} | Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle P(X, \mu)} | Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(x)} |

|---|---|---|---|---|

| 0 | 2 | 2 | 12 | 12 |

| 0.01 | 1.998 | 1.998 | 11.947 | 11.990 |

| 0.1 | 1.984 | 1.984 | 11.697 | 11.902 |

| 1 | 1.859 | 1.859 | 11.128 | 11.152 |

| 10 | 1.486 | 1.486 | 17.114 | 8.916 |

| 100 | 1.351 | 1.351 | 94.357 | 8.105 |

| 1000 | 1.335 | 1.335 | 871.052 | 8.011 |

From the above table it can be seen that:

- as Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mu} gets close to zero, the Barrier Function becomes tight and close to the original function.

- at Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mu=0} the optimal solution is achieved.

Summary:

Maximum Value of Objective function Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle =12}

Optimal points Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X_{1} = 2 }

and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X_{2} = 2}

The Newton's Method can also be applied to solve linear programming problems as indicated in the "Theory and Algorithm" section above. The solution to linear programming problems as indicated in this section "Numerical Example", will be similar to quadratic equation as obtained above and will converge in one iteration.

Applications

Primal-Dual interior-point (PDIP) methods are commonly used in optimal power flow (OPF), in this case what is being looked is to maximize user utility and minimize operational cost satisfying operational and physical constraints. The solution to the OPF needs to be available to grid operators in few minutes or seconds due to changes and fluctuations in loads during power generation. Newton-based primal-dual interior point can achieve fast convergence in this OPF optimization problem. [6]

Another application of the PDIP is for the minimization of losses and cost in the generation and transmission in hydroelectric power systems. [7]

PDIP are commonly used in imaging processing. One these applications is for image deblurring, in this case the constrained deblurring problem is formulated as primal-dual. The constrained primal-dual is solved using a semi-smooth Newton’s method. [8]

PDIP can be utilized to obtain a general formula for a shape derivative of the potential energy describing the energy release rate for curvilinear cracks. Problems on cracks and their evolution have important application in engineering and mechanical sciences. [9]

Conclusion

The primal-dual interior point method is a good alternative to the simplex methods for solving linear programming problems. The primal dual method shows superior performance and convergence on many large complex problems. simplex codes are faster on small to medium problems, interior point primal-dual are much faster on large problems.

References

- ↑ "Practical Optimization - Algorithms and Engineering Applications" by Andreas Antoniou and Wu-Sheng Lu, ISBN-10: 0-387-71106-6

- ↑ "Linear Programming - Foundations and Extensions - 3rd edition" by Robert J Vanderbei, ISBN-113: 978-0-387-74387-5.

- ↑ N Karmarkar, "A new Polynomial - Time algorithm for linear programming", Combinatorica, VOl. 4, No. 8, 1984, pp. 373-395.

- ↑ Computational Experience with Primal-Dual Interior Point Method for Linear Programming" by Irvin Lustig, Roy Marsten, David Shanno

- ↑ "Practical Optimization - Algorithms and Engineering Applications" by Andreas Antoniou and Wu-Sheng Lu, ISBN-10: 0-387-71106-6

- ↑ A. Minot, Y. M. Lu and N. Li, "A parallel primal-dual interior-point method for DC optimal power flow," 2016 Power Systems Computation Conference (PSCC), Genoa, 2016, pp. 1-7, doi: 10.1109/PSCC.2016.7540826.

- ↑ L. M. Ramos Carvalho and A. R. Leite Oliveira, "Primal-Dual Interior Point Method Applied to the Short Term Hydroelectric Scheduling Including a Perturbing Parameter," in IEEE Latin America Transactions, vol. 7, no. 5, pp. 533-538, Sept. 2009, doi: 10.1109/TLA.2009.5361190.

- ↑ D. Krishnan, P. Lin and A. M. Yip, "A Primal-Dual Active-Set Method for Non-Negativity Constrained Total Variation Deblurring Problems," in IEEE Transactions on Image Processing, vol. 16, no. 11, pp. 2766-2777, Nov. 2007, doi: 10.1109/TIP.2007.908079.

- ↑ V. A. Kovtunenko, Primal–dual methods of shape sensitivity analysis for curvilinear cracks with nonpenetration, IMA Journal of Applied Mathematics, Volume 71, Issue 5, October 2006, Pages 635–657