Stochastic programming: Difference between revisions

| (39 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

Authors: | Authors: Roohi Menon, Hangyu Zhou, Gerald Ogbonna, Vikram Raghavan (SYSEN 6800 Fall 2021) | ||

== Introduction == | == Introduction == | ||

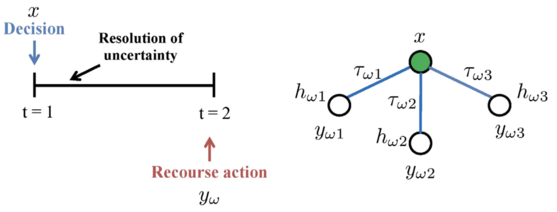

[[File:Screen Shot 2021-11-28 at 10.02.36 AM.png|thumb|557x557px|Two-stage stochastic programming scheme: conceptual representation is on the left; scenario tree is on the right, where <math>x</math> denotes the first stage decisions, <math>y_{\omega}</math> denotes the second stage decisions for each scenario <math>\omega</math>. <math>\tau_{\omega}, h_{\omega}</math> denotes the probability and the constraints of each scenario, respectively <ref name=": | [[File:Screen Shot 2021-11-28 at 10.02.36 AM.png|thumb|557x557px|Two-stage stochastic programming scheme: conceptual representation is on the left; scenario tree is on the right, where <math>x</math> denotes the first stage decisions, <math>y_{\omega}</math> denotes the second stage decisions for each scenario <math>\omega</math>. <math>\tau_{\omega}, h_{\omega}</math> denotes the probability and the constraints of each scenario, respectively. <ref name=":0">Li Can, Grossmann Ignacio E. [https://doi.org/10.3389/fceng.2020.622241 “A Review of Stochastic Programming Methods for Optimization of Process Systems Under Uncertainty,”] ''Frontiers in Chemical Engineering'' 2021, Vol. 2</ref>]] | ||

Stochastic Programming | |||

Stochastic Programming is a mathematical framework to help decision-making under uncertainty. <ref name=":0" /> Deterministic optimization frameworks like the linear program (LP), nonlinear program (NLP), mixed-integer program (MILP), or mixed-integer nonlinear program (MINLP) are well-studied, playing a vital role in solving all kinds of optimization problems. But this sort of formulation assumes that one has perfect knowledge of the future outcome of the system, and the entire system is deterministic. <ref name=":1">J. Schif [https://www.eecs.tufts.edu/~aloupis/comp150/projects/Jeremy-S/2sLP-Report.pdf "The L Shaped Algorithm"]</ref> They tend to produce sub-optimal results in some real-world situations where uncertainties significantly impact the system behavior. To address this problem, stochastic programming extends the deterministic optimization methodology by introducing random variables that model the uncertain nature of real-world problems, trying to hedge against risks and find the optimal decision with the best-expected performance under all possible situations. With uncertainties being widespread, this general stochastic framework finds applications in a broad spectrum of problems across sectors, from electricity generation, financial planning, supply chain management to process systems engineering, mitigation of climate change, and pollution control, and many others. <ref name=":0" /> | |||

Since its origin in the 1950s, the stochastic programming framework has been studied extensively. <ref name=":2">Dantzig, George B. [https://doi.org/10.1287/mnsc.1.3-4.197 “Linear Programming under Uncertainty.”] ''Management Science'', vol. 1, no. 3-4, 1955, pp. 197–206. </ref> A significant number of theoretical and algorithmic developments have been made to tackle uncertainty under different situations. To make an in-depth and fruitful investigation, we limited our topic to two-stage stochastic programming, the simplest form that focuses on situations with only one decision-making step. We will examine the most popular algorithm for solving such programs and discuss other aspects of this fascinating optimization framework. | |||

== Theory, methodology, and/or algorithmic discussion == | == Theory, methodology, and/or algorithmic discussion == | ||

Modeling through stochastic programming is often adopted because of its proactive-reactive decision-making feature to address uncertainty <ref name=": | Modeling through stochastic programming is often adopted because of its proactive-reactive decision-making feature to address uncertainty. <ref name=":3"> Chu, Yunfei, and Fengqi You. [https://doi.org/10.1021/ie402621t “Integration of Scheduling and Dynamic Optimization of Batch Processes under Uncertainty: Two-Stage Stochastic Programming Approach and Enhanced Generalized Benders Decomposition Algorithm.”] ''Industrial & Engineering Chemistry Research'', vol. 52, no. 47, 2013, pp. 16851–16869.</ref> Two-stage stochastic programming (TSP) is helpful when a problem requires the analysis of policy scenarios, however, the associated system information is inherently characterized with uncertainty. <ref name=":0" /> In a typical TSP, decision variables of an optimization problem under uncertainty are categorized into two periods. Decisions regarding the first-stage variables need to be made before the realization of the uncertain parameters. The first-stage decision variables are proactive for they hedge against uncertainty and ensure that the solution performs well under all uncertainty realizations. <ref name=":3" /> Once the uncertain events have unfolded/realized, it is possible to further design improvements or make operational adjustments through the values of the second-stage variables, also known as recourse, at a given cost. The second-stage variables are reactive in their response to the observed realization of uncertainty. <ref name=":3" /> Thus, optimal decisions should be made on data that is available at the time the decision is being made. In such a setup, future observations are not taken into consideration. <ref name=":4">Barik, S.K., Biswal, M.P. & Chakravarty, D. [https://doi.org/10.1007/s12597-012-0078-1 "Two-stage stochastic programming problems involving interval discrete random variables."] ''OPSEARCH'' 49, 280–298 (2012).</ref> Two-stage stochastic programming is suited for problems with a hierarchical structure, such as integrated process design, and planning and scheduling. <ref name=":3" /> | ||

=== Methodology === | === Methodology === | ||

The classical two-stage stochastic linear program with fixed recourse <ref name=": | The classical two-stage stochastic linear program with fixed recourse <ref name=":5">Soares, Joao, et al. [https://doi.org/10.1109/tia.2017.2723339 “Two-Stage Stochastic Model Using Benders’ Decomposition for Large-Scale Energy Resource Management in Smart Grids.”] ''IEEE Transactions on Industry Applications'', vol. 53, no. 6, 2017, pp. 5905–5914.</ref> is given below: | ||

<math> \min z=c^Tx + E_{\xi}[\min q(\omega)^Ty(\omega)] </math> | <math> \min z=c^Tx + E_{\xi}[\min q(\omega)^Ty(\omega)] </math> | ||

| Line 26: | Line 25: | ||

Where <math>c</math> is a known vector in <math>\mathbb{R}^{n_1}</math>, <math>b</math> is a known vector in <math>\mathbb{R}^{m_1}</math>. <math>A</math> and <math>W</math> are known matrices of size <math>m_1 \times n_1</math> and <math>m_2 \times n_2</math> respectively. <math>W</math> is known as the recourse matrix. | Where <math>c</math> is a known vector in <math>\mathbb{R}^{n_1}</math>, <math>b</math> is a known vector in <math>\mathbb{R}^{m_1}</math>. <math>A</math> and <math>W</math> are known matrices of size <math>m_1 \times n_1</math> and <math>m_2 \times n_2</math> respectively. <math>W</math> is known as the recourse matrix. | ||

The first-stage decisions are represented by the <math>n_1 \times 1</math> vector <math>x</math>. Corresponding to <math>x</math> are the first-stage vectors and matrices <math>c</math>, <math>b</math>, and <math>A</math>. In the second stage, a number of random events <math>\omega \in \Omega</math> may realize. For a given realization <math>\omega</math>, the second-stage problem data <math>q(\omega)</math>, <math>h(\omega)</math>, and <math>T(\omega)</math> become known<ref name=":10" /> | The first-stage decisions are represented by the <math>n_1 \times 1</math> vector <math>x</math>. Corresponding to <math>x</math> are the first-stage vectors and matrices <math>c</math>, <math>b</math>, and <math>A</math>. In the second stage, a number of random events <math>\omega \in \Omega</math> may realize. For a given realization <math>\omega</math>, the second-stage problem data <math>q(\omega)</math>, <math>h(\omega)</math>, and <math>T(\omega)</math> become known. <ref name=":6">Birge, John R., and François Louveaux. [https://link.springer.com/book/10.1007/978-1-4614-0237-4 "Introduction to Stochastic Programming."] Springer, 2011. </ref> | ||

=== Algorithm discussion === | === Algorithm discussion === | ||

To solve problems related to Two-Stage Linear Stochastic Programming more effectively algorithms | To solve problems related to Two-Stage Linear Stochastic Programming more effectively, algorithms such as Benders decomposition or the Lagrangean decomposition can be used. Benders decomposition was presented by J.F Benders and is a decomposition method used widely to solve mixed-integer problems. The algorithm is based on the principle of decomposing the main problem into sub-problems. The master problem is defined with only the first-stage decision variables. Once the first-stage decisions are fixed at an optimal solution of the master problem, thereafter, the subproblems are solved and valid inequalities of x are derived and added to the master problem. On solving the master problem again, the algorithm iterates until the upper and lower bound converge. <ref name=":0" /> The Benders master problem is defined as being linear or mixed-integer and having fewer technical constraints, while the sub-problems could be linear or nonlinear in nature. The subproblems’ primary aim is to validate the feasibility of the master problem’s solution. <ref name=":5" /> Benders decomposition is also known as L-shaped decomposition because once the first stage variables, <math>x</math>, are fixed then the rest of the problem has a structure of a block-diagonal. This structure can be decomposed by scenario and solved independently. <ref name=":0" /> | ||

... | ... | ||

We assume that the random vector <math>\xi</math> has finite support | We assume that the random vector <math>\xi</math> has finite support. Let <math>k=1,\ldots,K</math> index possible second stage realizations and let <math>p_k</math> be the corresponding probabilities. With that, we could write down the deterministic equivalent of the stochastic program. This form is created by associating one set of second-stage decisions (<math>y_k</math>) to each realization of <math>\xi</math>, i.e., to each realization of <math>q_k</math>, <math>h_k</math>, and <math>T_k</math>. This large-scale deterministic counterpart of the original stochastic program is known as the ''extensive form'': | ||

<math> | |||

<math> | |||

<math> \ | |||

<math> | |||

<div class="center" style="width: auto; margin-left: auto; margin-right: auto;"> | |||

<math> | |||

\begin{align} | |||

\min c^T x + \sum_{k=1}^{K} p_k & q_k^T y_k \\ | |||

s.t. \qquad \quad Ax &= b \\ | |||

T_kx + W_{y_k} &= h_k, &k=1,...,K \\ | |||

x \ge 0, y_k &\ge 0, &k=1,...,K \\ | |||

\end{align} | |||

</math> | |||

</div> | |||

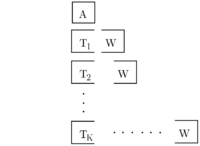

It is equivalent with the following formulation. The L-shape block structure of this extensive form gives rise to the name, L-shaped method. | |||

<div class="center" style="width: auto; margin-left: auto; margin-right: auto;"> | |||

[[File:Lshape.png|thumb|200px|Block structure of the two-stage extensive form <ref name=":6" />]] | |||

<math> | <math> | ||

\begin{array}{lccccccccccccc} | \begin{array}{lccccccccccccc} | ||

\min & c^T x & + & p_1 q_1^T y_1 & + & p_2q_2^T y_2 & + & \cdots & + & | \min & c^T x & + & p_1 q_1^T y_1 & + & p_2q_2^T y_2 & + & \cdots & + & p_K q_K^T y_K & & \\ | ||

s.t. & Ax & & & & & & & & & = & b \\ | s.t. & Ax & & & & & & & & & = & b \\ | ||

& T_1 x & + & W_1 y_1 & & & & & & & = & h_1 \\ | & T_1 x & + & W_1 y_1 & & & & & & & = & h_1 \\ | ||

& T_2 x & + & & & W_2y_2 & & & & & = & h_2 \\ | & T_2 x & + & & & W_2y_2 & & & & & = & h_2 \\ | ||

& \vdots & & & & & & \ddots & & & & \vdots \\ | & \vdots & & & & & & \ddots & & & & \vdots \\ | ||

& T_s x & + & & & & & & & | & T_s x & + & & & & & & & W_K y_K & = & h_K \\ | ||

& x\ge 0 & , & y_1 \ge 0 & , & y_2 \ge 0 & & \ldots & & | & x\ge 0 & , & y_1 \ge 0 & , & y_2 \ge 0 & & \ldots & & y_K \ge 0 \\ | ||

\end{array} | \end{array} | ||

</math> | </math> | ||

</div> | |||

==== L-Shaped Algorithm ==== | ==== L-Shaped Algorithm ==== | ||

| Line 76: | Line 81: | ||

Let <math>(x^k, \theta ^k)</math> be an optimal solution. If there is no constraint (3), set <math>\theta ^k = -\infty</math>, <math>x^k</math> is defined by the remaining constraints. | Let <math>(x^k, \theta ^k)</math> be an optimal solution. If there is no constraint (3), set <math>\theta ^k = -\infty</math>, <math>x^k</math> is defined by the remaining constraints. | ||

Step 2. | Step 2. For <math>k = 1,\ldots,K</math> solve the following linear program: | ||

<div class="center" style="width: auto; margin-left: auto; margin-right: auto;"> | |||

<math> | |||

\begin{align} | |||

\min &w' = e^Tv^+ + e^Tv^- \\ | |||

s.t. &Wy + Iv^+ - Iv^- = h_k - T_kx^v \\ | |||

&y \ge 0, v^+ \ge 0, v^- \ge 0 \\ | |||

\end{align} | |||

</math> | |||

</div> | |||

where <math>e^T = (1,\ldots,1)</math>, <math>I</math> is the identity matrix. Until for some <math>k</math> the optimal value <math>w' > 0</math>. In this case, let <math>\sigma ^v</math> be the associated simplex multipliers and define | |||

<div class="center" style="width: auto; margin-left: auto; margin-right: auto;"> | |||

<math>D_{r+1} = (\sigma ^v)^T T_k</math> | |||

<math>d_{r+1} = (\sigma ^v)^T h_k</math> | |||

</div> | |||

to generate a constraint (called a <em>feasibility cut</em>) of type (2). Set <math>r=r+1</math>, add the constraint to the constraint set (2), and return to Step 1. If for all <math>k</math>, <math>w' = 0</math>, go to Step 3. | |||

Step 3: For <math>k=1,\ldots,K</math> solve the linear program | |||

<div class="center" style="width: auto; margin-left: auto; margin-right: auto;"> | |||

<math> | |||

\begin{align} | |||

\min &w = q^T_k y \\ | |||

s.t. &Wy = h_k - T_k x^v & & (4)\\ | |||

&y \ge 0 | |||

\end{align} | |||

</math> | |||

</div> | |||

Let <math>\pi ^v_k</math> be the simplex multipliers associated with the optimal solution of Problem <math>k</math> of type (4). Define | |||

<div class="center" style="width: auto; margin-left: auto; margin-right: auto;"> | |||

<math>E_{s+1} = \sum_{k=1}^K p_k(\pi ^v_k)^T T_k</math> | |||

<math>e_{s+1} = \sum_{k=1}^K p_k(\pi ^v_k)^T h_k</math> | |||

</div> | |||

Let <math>w^v = e_{s+1}-E_{s+1}x^v</math>. If <math>\theta ^v \ge w^v</math>, stop; <math>x^v</math> is an optimal solution. Otherwise, set <math>s = s+1</math>, add to the constraint set (3) and return to Step 1. | |||

This method approximates <math>\mathcal{L}</math> using an outer linearization. This approximation is achieved by the master program (1)-(4). It finds a proposal <math>x</math>, then sent it to the second stage. Two types of constraints are sequentially added: (i) <em>feasibility cuts</em> (2) determining <math>{x|\mathcal{L}(x) < +\infty}</math> and (ii) <em>optimality cuts</em> (3), which are linear approximations to <math>\mathcal{L}</math> on its domain of finiteness. | |||

== Numerical Example == | |||

To illustrate the algorithm mentioned above, let's take a look at the following numerical example. | |||

<math> | |||

\begin{align} | |||

z = \min 10{x_1} + 15&{x_2} + E_\xi (q_1y_1 + q_2y_2) \\ | |||

s.t. x_1 + x_2 &\le 12 \\ | |||

6y_1 + 10y_2 &\le 60x_1 \\ | |||

8y_1 + 5y_2 &\le 80x_2 \\ | |||

y_1 &\le d_1, y_2 \le d_2 \\ | |||

x_1 &\ge 4, x_2 \ge 2 \\ | |||

y_1, y_2 &\ge 0 | |||

\end{align} | |||

</math> | |||

where <math>\xi ^T = (d_1, d_2, q_1, q_2)</math> has 0.4 probability to be <math>\xi _1 = (50, 10, -2.4, -2.8)</math> and 0.6 probability to be <math>\xi _2 = (30, 30, -2.8, -3.2)</math>. | |||

We should note that in this case, as <math>x \ge 0</math>, <math>d \ge 0</math> and <math>y_1, y_2 \ge 0</math>, the second stage is always feasible, which means <math> x \in K_2</math> always hold true. So we could skip the feasibility cuts (step 2) in all iterations. The <em>L</em>-shaped method iterations are shown below: | |||

''' Iteration 1:''' | |||

<em>Step 1.</em> With no <math>\theta </math>, the master program is <math>z = min\{10x_1 + 15x_2 | x_1 + x_2 \le 12, x_1 \ge 4, x_2 \ge 2\}</math>. Result is <math>x^1 = (4, 2)^T</math>. Set <math>\theta ^1 = -\infty </math>. | |||

<em>Step 2.</em> No feasibility cut is needed. | |||

<em>Step 3.</em> | |||

* For <math>\xi = \xi _1</math>, solve: | |||

:<math> w = min \{-2.4y_1 - 2.8y_2 | 6y_1 + 10y_2 \le 240, 8y_1 + 5y_2 \le 160, 0\le y_1 \le 50, 0\le y_2 \le 10 \} </math> | |||

<math>\ | :The solution is <math>w_1 = -61, y^T = (13.75, 10), \pi _1^T = (0, -0.3, 0, -1.3)</math> | ||

<math> \ | * For <math>\xi = \xi _2</math>, solve: | ||

:<math> w = min \{-2.8y_1 - 3.2y_2 | 6y_1 + 10y_2 \le 240, 8y_1 + 5y_2 \le 160, 0\le y_1 \le 30, 0\le y_2 \le 30 \} </math> | |||

<math> | :The solution is <math>w_2 = -83.84, y^T = (8, 19.2), \pi _2^T = (-0.232, -0.176, 0, 0)</math> | ||

<math> \ | : Since <math>h_1 = (0, 0, 50, 10)^T, h_2 = (0, 0, 30, 30)^T </math>, we have | ||

:<math>e_1 = 0.4\cdot \pi _1^T \cdot h_1 + 0.6\cdot \pi _2^T \cdot h_2 = 0.4 \times (-13) + 0.6 \times (0) = -5.2</math> | |||

: Here, the matrix <math>T</math> is the same in these two scenarios, which is <math>\begin{bmatrix} -60 & 0 \\ 0 & -80 \\ 0 & 0 \\ 0 & 0 \end{bmatrix}</math>. Therefore, we have | |||

<math> | :<math>E_1 = 0.4\cdot \pi _1^T \cdot T + 0.6\cdot \pi _2^T \cdot T = 0.4 \times (0, 24) + 0.6 \times (13.92, 14.08) = (8.352, 18.048)</math> | ||

<math>\ | : Thus, <math>w^1 = -5.2 - (8.352, 18.048) \cdot x^1 = -74.704 > \theta ^1 = -\infty </math>, we add the cut | ||

:<math>8.352x_1 + 18.048x_2 + \theta \ge -5.2</math> | |||

''' Iteration 2:''' | |||

<math>\ | <em>Step 1.</em> The master program is | ||

:<math> | |||

\begin{align} | |||

z = min\{10x_1 + 15x_2 + \theta | x_1 + x_2 \le 12&, x_1 \ge 4, x_2 \ge 2, \\ | |||

&8.352x_1 + 18.048x_2 + \theta \ge -5.2\} | |||

\end{align} | |||

</math> | |||

:Result is <math>z = -22.992, x^2 = (4, 8)^T, \theta ^2 = -182.992</math>. | |||

<em>Step 2.</em> No feasibility cut is needed. | |||

<em>Step 3.</em> | |||

* For <math>\xi = \xi _1</math>, solve: | |||

:<math> w = min \{-2.4y_1 - 2.8y_2 | 6y_1 + 10y_2 \le 240, 8y_1 + 5y_2 \le 640, 0\le y_1 \le 50, 0\le y_2 \le 10 \} </math> | |||

:The solution is <math>w_1 = -96, y^T = (40, 0), \pi _1^T = (-0.4, 0, 0, 0)</math> | |||

* For <math>\xi = \xi _2</math>, solve: | |||

:<math> w = min \{-2.8y_1 - 3.2y_2 | 6y_1 + 10y_2 \le 240, 8y_1 + 5y_2 \le 640, 0\le y_1 \le 30, 0\le y_2 \le 30 \} </math> | |||

:The solution is <math>w_2 = -103.2, y^T = (30, 6), \pi _2^T = (-0.32, 0, -0.88, 0)</math> | |||

: Thus, | |||

:<math> e_2 = 0.4\cdot \pi _1^T \cdot h_1 + 0.6\cdot \pi _2^T \cdot h_2 = 0.4 \times (0) + 0.6 \times (-26.4) = -15.84</math> | |||

:<math>E_2 = 0.4\cdot \pi _1^T \cdot T + 0.6\cdot \pi _2^T \cdot T = 0.4 \times (24, 0) + 0.6 \times (19.2, 0) = (21.12, 0)</math> | |||

: Since <math>w_2 = -15.84 - 21.12 \cdot 4 = -100.32 > -182.992</math>, add the cut | |||

:<math>21.12x_1 + \theta \ge -15.84</math> | |||

''' Iteration 3:''' | |||

<em>Step 1.</em> The master program is | |||

:<math> | |||

\begin{align} | |||

z = min\{10x_1 &+ 15x_2 + \theta | x_1 + x_2 \le 12, x_1 \ge 4, x_2 \ge 2, \\ | |||

&8.352x_1 + 18.048x_2 + \theta \ge -5.2, 21.12x_1 + \theta \ge -15.84\} | |||

\end{align} | |||

</math> | |||

:Result is <math>z = -10.39375, x^3 = (6.6828, 5.3172)^T, \theta ^3 = -156.97994</math>. | |||

<em>Step 2.</em> No feasibility cut is needed. | |||

<em>Step 3.</em> | |||

* For <math>\xi = \xi _1</math>, solve: | |||

:<math> w = min \{-2.4y_1 - 2.8y_2 | 6y_1 + 10y_2 \le 400.968, 8y_1 + 5y_2 \le 425.376, 0\le y_1 \le 50, 0\le y_2 \le 10 \} </math> | |||

:The solution is <math>w_1 = -140.6128, y^T = (46.922, 10), \pi _1^T = (0, -0.3, 0, -1.3)</math> | |||

* For <math>\xi = \xi _2</math>, solve: | |||

:<math> w = min \{-2.8y_1 - 3.2y_2 | 6y_1 + 10y_2 \le 400.968, 8y_1 + 5y_2 \le 425.376, 0\le y_1 \le 30, 0\le y_2 \le 30 \} </math> | |||

:The solution is <math>w_2 = -154.7098, y^T = (30, 22.0968), \pi _2^T = (-0.32, 0, -0.88, 0)</math> | |||

: Thus, | |||

:<math> e_3 = 0.4\cdot \pi _1^T \cdot h_1 + 0.6\cdot \pi _2^T \cdot h_2 = 0.4 \times (-13) + 0.6 \times (-26.4) = -21.04</math> | |||

:<math>E_3 = 0.4\cdot \pi _1^T \cdot T + 0.6\cdot \pi _2^T \cdot T = 0.4 \times (0, 24) + 0.6 \times (19.2, 0) = (11.52, 9.6)</math> | |||

: Since <math>w_3 = -21.04 - 11.52 \cdot 6.6828 - 9.6 \cdot 5.3172 = -149.070976 > -156.97994</math>, add the cut | |||

:<math>11.52x_1 + 9.6x_2 + \theta \ge -21.04</math> | |||

''' Iteration 4:''' | |||

<em>Step 1.</em> The master program is | |||

:<math> | |||

\begin{align} | |||

z = min\{10x_1 &+ 15x_2 + \theta | x_1 + x_2 \le 12, x_1 \ge 4, x_2 \ge 2, \\ | |||

&8.352x_1 + 18.048x_2 + \theta \ge -5.2, 21.12x_1 + \theta \ge -15.84, \\ | |||

&11.52x_1 + 9.6x_2 + \theta \ge -21.04\} | |||

\end{align} | |||

</math> | |||

:Result is <math>z = -8.895, x^4 = (4, 3.375)^T, \theta ^4 = -99.52</math>. | |||

<em>Step 2.</em> No feasibility cut is needed. | |||

<em>Step 3.</em> | |||

* For <math>\xi = \xi _1</math>, solve: | |||

:<math> w = min \{-2.4y_1 - 2.8y_2 | 6y_1 + 10y_2 \le 240, 8y_1 + 5y_2 \le 270, 0\le y_1 \le 50, 0\le y_2 \le 10 \} </math> | |||

:The solution is <math>w_1 = -88.8, y^T = (30, 6), \pi _1^T = (-0.208, -0.144, 0, 0)</math> | |||

* For <math>\xi = \xi _2</math>, solve: | |||

:<math> w = min \{-2.8y_1 - 3.2y_2 | 6y_1 + 10y_2 \le 240, 8y_1 + 5y_2 \le 270, 0\le y_1 \le 30, 0\le y_2 \le 30 \} </math> | |||

: There are multiple optimal solutions. Selecting one of them, we have | |||

:<math> e_4 = 0</math> | |||

:<math>E_4 = (13.344, 13.056)</math> | |||

: Since <math>w_3 = 0 - 13.344 \cdot 4 - 13.056 \cdot 3.375 = -97.44 > -99.52</math>, add the cut | |||

:<math>13.344x_1 + 13.056x_2 + \theta \ge 0</math> | |||

''' Iteration 5:''' | |||

<em>Step 1.</em> The master program is | |||

:<math> | |||

\begin{align} | |||

z = min\{10x_1 &+ 15x_2 + \theta | x_1 + x_2 \le 12, x_1 \ge 4, x_2 \ge 2, \\ | |||

&8.352x_1 + 18.048x_2 + \theta \ge -5.2, 21.12x_1 + \theta \ge -15.84, \\ | |||

&11.52x_1 + 9.6x_2 + \theta \ge -21.04, 13.344x_1 + 13.056x_2 + \theta \ge 0\} | |||

\end{align} | |||

</math> | |||

<math>\ | :Result is <math>z = -8.5583, x^5 = (4.6667, 3.625)^T, \theta ^5 = -109.6</math>. | ||

< | <em>Step 2.</em> No feasibility cut is needed. | ||

<math>\ | <em>Step 3.</em> | ||

* For <math>\xi = \xi _1</math>, solve: | |||

:<math> w = min \{-2.4y_1 - 2.8y_2 | 6y_1 + 10y_2 \le 280, 8y_1 + 5y_2 \le 290, 0\le y_1 \le 50, 0\le y_2 \le 10 \} </math> | |||

<math>\ | :The solution is <math>w_1 = -100, y^T = (30, 10), \pi _1^T = (0, -0.3, 0, -1.3)</math> | ||

<math>\ | * For <math>\xi = \xi _2</math>, solve: | ||

:<math> w = min \{-2.8y_1 - 3.2y_2 | 6y_1 + 10y_2 \le 280, 8y_1 + 5y_2 \le 290, 0\le y_1 \le 30, 0\le y_2 \le 30 \} </math> | |||

<math>\ | :The solution is <math>w_2 = -116, y^T = (30, 10), \pi _2^T = (-0.232, -0.176, 0, 0)</math> | ||

: Thus, | |||

<math>\ | :<math> e_5 = 0.4\cdot \pi _1^T \cdot h_1 + 0.6\cdot \pi _2^T \cdot h_2 = 0.4 \times (-13) + 0.6 \times (0) = -5.2</math> | ||

:<math>E_5 = 0.4\cdot \pi _1^T \cdot T + 0.6\cdot \pi _2^T \cdot T = 0.4 \times (0, 24) + 0.6 \times (13.92, 14.08) = (8.352, 18.048)</math> | |||

: Since <math>w_5 = -5.2 - 8.352 \cdot 4.6667 - 18.048 \cdot 3.625 = -109.6 = \theta ^5</math>, stop. | |||

:<math>x_5 = (4.6667, 3.625)^T</math> is the optimal solution. | |||

== Applications == | == Applications == | ||

Apart from the process industry, two-stage linear stochastic programming finds application in other fields as well. For instance, in the optimal design of distributed energy systems, there are various uncertainties that need to be considered. Uncertainty related to aspects such as | Apart from the process industry, two-stage linear stochastic programming finds application in other fields as well. For instance, in the optimal design of distributed energy systems, there are various uncertainties that need to be considered. Uncertainty is related to aspects such as demand and supply of energy, economic factors like unit investment cost and energy price, and uncertainty related to technical parameters like efficiency. <ref name=":7">Zhe Zhou, Jianyun Zhang, Pei Liu, Zheng Li, Michael C. Georgiadis, Efstratios N. Pistikopoulos, [https://doi.org/10.1016/j.apenergy.2012.09.019 “A two-stage stochastic programming model for the optimal design of distributed energy systems,”] ''Applied Energy'', Volume 103, 2013, Pages 135-144, ISSN 0306-2619.</ref>, developed a two-stage stochastic programming model for the optimal design of a distributed energy system with a stage decomposition-based solution strategy. The authors accounted for both demand and supply uncertainty. They used the genetic algorithm on the first stage variables and the Monte Carlo method on the second-stage variables. | ||

Another application of the two-stage linear stochastic programming is in the bike-sharing system (BSS). The system needs to ensure that bikes are available at all stations per the given demand. To ensure this balance, redistribution trucks are used to transfer bikes from one bike surplus station to a bike deficient station. Such a problem is referred to as the bike repositioning problem (BRS) in the aforementioned system <ref name=": | Another application of the two-stage linear stochastic programming is in the bike-sharing system (BSS). The system needs to ensure that bikes are available at all stations per the given demand. To ensure this balance, redistribution trucks are used to transfer bikes from one bike surplus station to a bike deficient station. Such a problem is referred to as the bike repositioning problem (BRS) in the aforementioned system. <ref name=":8">Qiong Tang, Zhuo Fu, Dezhi Zhang, Hao Guo, Minyi Li, [https://doi.org/10.1155/2020/8868892 "Addressing the Bike Repositioning Problem in Bike Sharing System: A Two-Stage Stochastic Programming Model,"] ''Scientific Programming'', vol. 2020, Article ID 8868892, 12 pages, 2020.</ref> Another challenge related to BRP is the aspect related to the holding cost of the depot. While transferring bikes from one station to another they could get damaged or be lost in the process which could lead to an imbalance between demand and supply in the BSS. As for bikes that cannot be balanced among the stations of the BSS are either placed back at the depot to increase the holding cost of the depot. To address the stated concerns, researchers developed a two-stage stochastic program that would capture the uncertainty related to redistribution of demand within the system. <ref name=":8" /> In the first stage, before the realization of redistribution demand, a decision regarding routing was made. In the second stage, decisions regarding loading/unloading at each station and depot are made. Holding cost is incorporated into the model, such that the model’s primary objective is to determine the best routes of the repositioning truck and the optimal loading/unloading quantities at each station and depot. The model is framed to minimize the expected total sum of transportation cost, the penalty cost of all stations, and the holding cost of the depot. | ||

</ref> | |||

== Conclusion == | == Conclusion == | ||

From the previous examples it is evident that two-stage linear stochastic programming finds applicability across many areas, such as petrochemical, pharmaceutical industry, carbon capture and energy storage among others <ref name=": | From the previous examples, it is evident that two-stage linear stochastic programming finds applicability across many areas, such as the petrochemical, pharmaceutical industry, carbon capture, and energy storage among others. <ref name=":0" /> Stochastic programming can primarily be used to model two types of uncertainties: 1) exogenous uncertainty, which is the most widely considered one, and 2) endogenous uncertainty, where realization regarding uncertainty depends on the decision taken. The main challenge, with respect to stochastic programming, is that the type of problems that can be solved is limited. An ‘ideal’ problem would be multi-stage stochastic mixed-integer nonlinear programming under both exogenous and endogenous uncertainty with an arbitrary probability distribution that is stagewise dependent. <ref name=":0" /> However, current algorithms, in terms of development and computation resources, are still limited with respect to the ability to solve the ‘ideal’ problem. | ||

== References == | == References == | ||

Latest revision as of 23:59, 15 December 2021

Authors: Roohi Menon, Hangyu Zhou, Gerald Ogbonna, Vikram Raghavan (SYSEN 6800 Fall 2021)

Introduction

Stochastic Programming is a mathematical framework to help decision-making under uncertainty. [1] Deterministic optimization frameworks like the linear program (LP), nonlinear program (NLP), mixed-integer program (MILP), or mixed-integer nonlinear program (MINLP) are well-studied, playing a vital role in solving all kinds of optimization problems. But this sort of formulation assumes that one has perfect knowledge of the future outcome of the system, and the entire system is deterministic. [2] They tend to produce sub-optimal results in some real-world situations where uncertainties significantly impact the system behavior. To address this problem, stochastic programming extends the deterministic optimization methodology by introducing random variables that model the uncertain nature of real-world problems, trying to hedge against risks and find the optimal decision with the best-expected performance under all possible situations. With uncertainties being widespread, this general stochastic framework finds applications in a broad spectrum of problems across sectors, from electricity generation, financial planning, supply chain management to process systems engineering, mitigation of climate change, and pollution control, and many others. [1]

Since its origin in the 1950s, the stochastic programming framework has been studied extensively. [3] A significant number of theoretical and algorithmic developments have been made to tackle uncertainty under different situations. To make an in-depth and fruitful investigation, we limited our topic to two-stage stochastic programming, the simplest form that focuses on situations with only one decision-making step. We will examine the most popular algorithm for solving such programs and discuss other aspects of this fascinating optimization framework.

Theory, methodology, and/or algorithmic discussion

Modeling through stochastic programming is often adopted because of its proactive-reactive decision-making feature to address uncertainty. [4] Two-stage stochastic programming (TSP) is helpful when a problem requires the analysis of policy scenarios, however, the associated system information is inherently characterized with uncertainty. [1] In a typical TSP, decision variables of an optimization problem under uncertainty are categorized into two periods. Decisions regarding the first-stage variables need to be made before the realization of the uncertain parameters. The first-stage decision variables are proactive for they hedge against uncertainty and ensure that the solution performs well under all uncertainty realizations. [4] Once the uncertain events have unfolded/realized, it is possible to further design improvements or make operational adjustments through the values of the second-stage variables, also known as recourse, at a given cost. The second-stage variables are reactive in their response to the observed realization of uncertainty. [4] Thus, optimal decisions should be made on data that is available at the time the decision is being made. In such a setup, future observations are not taken into consideration. [5] Two-stage stochastic programming is suited for problems with a hierarchical structure, such as integrated process design, and planning and scheduling. [4]

Methodology

The classical two-stage stochastic linear program with fixed recourse [6] is given below:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \min z=c^Tx + E_{\xi}[\min q(\omega)^Ty(\omega)] }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s.t. \quad Ax = b }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \qquad \quad T(\omega)x + Wy(\omega) = h(\omega) }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \qquad \quad x \ge 0, y(\omega) \ge 0 }

Where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle c} is a known vector in Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathbb{R}^{n_1}} , Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle b} is a known vector in Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathbb{R}^{m_1}} . Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle A} and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle W} are known matrices of size Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle m_1 \times n_1} and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle m_2 \times n_2} respectively. Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle W} is known as the recourse matrix.

The first-stage decisions are represented by the Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle n_1 \times 1} vector Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x} . Corresponding to Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x} are the first-stage vectors and matrices Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle c} , Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle b} , and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle A} . In the second stage, a number of random events Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \omega \in \Omega} may realize. For a given realization Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \omega} , the second-stage problem data Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle q(\omega)} , Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle h(\omega)} , and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle T(\omega)} become known. [7]

Algorithm discussion

To solve problems related to Two-Stage Linear Stochastic Programming more effectively, algorithms such as Benders decomposition or the Lagrangean decomposition can be used. Benders decomposition was presented by J.F Benders and is a decomposition method used widely to solve mixed-integer problems. The algorithm is based on the principle of decomposing the main problem into sub-problems. The master problem is defined with only the first-stage decision variables. Once the first-stage decisions are fixed at an optimal solution of the master problem, thereafter, the subproblems are solved and valid inequalities of x are derived and added to the master problem. On solving the master problem again, the algorithm iterates until the upper and lower bound converge. [1] The Benders master problem is defined as being linear or mixed-integer and having fewer technical constraints, while the sub-problems could be linear or nonlinear in nature. The subproblems’ primary aim is to validate the feasibility of the master problem’s solution. [6] Benders decomposition is also known as L-shaped decomposition because once the first stage variables, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x} , are fixed then the rest of the problem has a structure of a block-diagonal. This structure can be decomposed by scenario and solved independently. [1]

... We assume that the random vector Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \xi} has finite support. Let Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle k=1,\ldots,K} index possible second stage realizations and let Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle p_k} be the corresponding probabilities. With that, we could write down the deterministic equivalent of the stochastic program. This form is created by associating one set of second-stage decisions (Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle y_k} ) to each realization of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \xi} , i.e., to each realization of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle q_k} , Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle h_k} , and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle T_k} . This large-scale deterministic counterpart of the original stochastic program is known as the extensive form:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{align} \min c^T x + \sum_{k=1}^{K} p_k & q_k^T y_k \\ s.t. \qquad \quad Ax &= b \\ T_kx + W_{y_k} &= h_k, &k=1,...,K \\ x \ge 0, y_k &\ge 0, &k=1,...,K \\ \end{align} }

It is equivalent with the following formulation. The L-shape block structure of this extensive form gives rise to the name, L-shaped method.

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{array}{lccccccccccccc} \min & c^T x & + & p_1 q_1^T y_1 & + & p_2q_2^T y_2 & + & \cdots & + & p_K q_K^T y_K & & \\ s.t. & Ax & & & & & & & & & = & b \\ & T_1 x & + & W_1 y_1 & & & & & & & = & h_1 \\ & T_2 x & + & & & W_2y_2 & & & & & = & h_2 \\ & \vdots & & & & & & \ddots & & & & \vdots \\ & T_s x & + & & & & & & & W_K y_K & = & h_K \\ & x\ge 0 & , & y_1 \ge 0 & , & y_2 \ge 0 & & \ldots & & y_K \ge 0 \\ \end{array} }

L-Shaped Algorithm

Step 0. Set Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle r=s=v=0 } Step 1. Set Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle v = v+1 } . Solve the following linear program (master program)

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{align} \min z =c^Tx &+ \theta\\ s.t. Ax &=b & & & (1) \\ D_{\ell}x &\ge d_{\ell}, & \ell = 1, \ldots, r & & (2)\\ E_{\ell}x + \theta &\ge e_{\ell}, & \ell = 1, \ldots, s & & (3) \\ x &\ge 0, & \theta \in \mathbb{R} & & (4) \end{align} }

Let Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle (x^k, \theta ^k)} be an optimal solution. If there is no constraint (3), set Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \theta ^k = -\infty} , Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x^k} is defined by the remaining constraints.

Step 2. For Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle k = 1,\ldots,K} solve the following linear program:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{align} \min &w' = e^Tv^+ + e^Tv^- \\ s.t. &Wy + Iv^+ - Iv^- = h_k - T_kx^v \\ &y \ge 0, v^+ \ge 0, v^- \ge 0 \\ \end{align} }

where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle e^T = (1,\ldots,1)} , Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle I} is the identity matrix. Until for some Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle k} the optimal value Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w' > 0} . In this case, let Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \sigma ^v} be the associated simplex multipliers and define

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle D_{r+1} = (\sigma ^v)^T T_k}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle d_{r+1} = (\sigma ^v)^T h_k}

to generate a constraint (called a feasibility cut) of type (2). Set Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle r=r+1} , add the constraint to the constraint set (2), and return to Step 1. If for all Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle k} , Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w' = 0} , go to Step 3.

Step 3: For Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle k=1,\ldots,K} solve the linear program

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{align} \min &w = q^T_k y \\ s.t. &Wy = h_k - T_k x^v & & (4)\\ &y \ge 0 \end{align} }

Let Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \pi ^v_k} be the simplex multipliers associated with the optimal solution of Problem Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle k} of type (4). Define

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle E_{s+1} = \sum_{k=1}^K p_k(\pi ^v_k)^T T_k}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle e_{s+1} = \sum_{k=1}^K p_k(\pi ^v_k)^T h_k}

Let Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w^v = e_{s+1}-E_{s+1}x^v} . If Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \theta ^v \ge w^v} , stop; Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x^v} is an optimal solution. Otherwise, set Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s = s+1} , add to the constraint set (3) and return to Step 1.

This method approximates Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{L}} using an outer linearization. This approximation is achieved by the master program (1)-(4). It finds a proposal Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x} , then sent it to the second stage. Two types of constraints are sequentially added: (i) feasibility cuts (2) determining Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle {x|\mathcal{L}(x) < +\infty}} and (ii) optimality cuts (3), which are linear approximations to Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{L}} on its domain of finiteness.

Numerical Example

To illustrate the algorithm mentioned above, let's take a look at the following numerical example.

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{align} z = \min 10{x_1} + 15&{x_2} + E_\xi (q_1y_1 + q_2y_2) \\ s.t. x_1 + x_2 &\le 12 \\ 6y_1 + 10y_2 &\le 60x_1 \\ 8y_1 + 5y_2 &\le 80x_2 \\ y_1 &\le d_1, y_2 \le d_2 \\ x_1 &\ge 4, x_2 \ge 2 \\ y_1, y_2 &\ge 0 \end{align} }

where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \xi ^T = (d_1, d_2, q_1, q_2)} has 0.4 probability to be Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \xi _1 = (50, 10, -2.4, -2.8)} and 0.6 probability to be Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \xi _2 = (30, 30, -2.8, -3.2)} .

We should note that in this case, as Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x \ge 0} , Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle d \ge 0} and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle y_1, y_2 \ge 0} , the second stage is always feasible, which means Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x \in K_2} always hold true. So we could skip the feasibility cuts (step 2) in all iterations. The L-shaped method iterations are shown below:

Iteration 1:

Step 1. With no Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \theta } , the master program is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle z = min\{10x_1 + 15x_2 | x_1 + x_2 \le 12, x_1 \ge 4, x_2 \ge 2\}} . Result is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x^1 = (4, 2)^T} . Set Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \theta ^1 = -\infty } .

Step 2. No feasibility cut is needed.

Step 3.

- For Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \xi = \xi _1} , solve:

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w = min \{-2.4y_1 - 2.8y_2 | 6y_1 + 10y_2 \le 240, 8y_1 + 5y_2 \le 160, 0\le y_1 \le 50, 0\le y_2 \le 10 \} }

- The solution is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w_1 = -61, y^T = (13.75, 10), \pi _1^T = (0, -0.3, 0, -1.3)}

- For Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \xi = \xi _2} , solve:

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w = min \{-2.8y_1 - 3.2y_2 | 6y_1 + 10y_2 \le 240, 8y_1 + 5y_2 \le 160, 0\le y_1 \le 30, 0\le y_2 \le 30 \} }

- The solution is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w_2 = -83.84, y^T = (8, 19.2), \pi _2^T = (-0.232, -0.176, 0, 0)}

- Since Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle h_1 = (0, 0, 50, 10)^T, h_2 = (0, 0, 30, 30)^T } , we have

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle e_1 = 0.4\cdot \pi _1^T \cdot h_1 + 0.6\cdot \pi _2^T \cdot h_2 = 0.4 \times (-13) + 0.6 \times (0) = -5.2}

- Here, the matrix Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle T} is the same in these two scenarios, which is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{bmatrix} -60 & 0 \\ 0 & -80 \\ 0 & 0 \\ 0 & 0 \end{bmatrix}} . Therefore, we have

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle E_1 = 0.4\cdot \pi _1^T \cdot T + 0.6\cdot \pi _2^T \cdot T = 0.4 \times (0, 24) + 0.6 \times (13.92, 14.08) = (8.352, 18.048)}

- Thus, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w^1 = -5.2 - (8.352, 18.048) \cdot x^1 = -74.704 > \theta ^1 = -\infty } , we add the cut

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 8.352x_1 + 18.048x_2 + \theta \ge -5.2}

Iteration 2:

Step 1. The master program is

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{align} z = min\{10x_1 + 15x_2 + \theta | x_1 + x_2 \le 12&, x_1 \ge 4, x_2 \ge 2, \\ &8.352x_1 + 18.048x_2 + \theta \ge -5.2\} \end{align} }

- Result is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle z = -22.992, x^2 = (4, 8)^T, \theta ^2 = -182.992} .

Step 2. No feasibility cut is needed.

Step 3.

- For Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \xi = \xi _1} , solve:

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w = min \{-2.4y_1 - 2.8y_2 | 6y_1 + 10y_2 \le 240, 8y_1 + 5y_2 \le 640, 0\le y_1 \le 50, 0\le y_2 \le 10 \} }

- The solution is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w_1 = -96, y^T = (40, 0), \pi _1^T = (-0.4, 0, 0, 0)}

- For Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \xi = \xi _2} , solve:

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w = min \{-2.8y_1 - 3.2y_2 | 6y_1 + 10y_2 \le 240, 8y_1 + 5y_2 \le 640, 0\le y_1 \le 30, 0\le y_2 \le 30 \} }

- The solution is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w_2 = -103.2, y^T = (30, 6), \pi _2^T = (-0.32, 0, -0.88, 0)}

- Thus,

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle e_2 = 0.4\cdot \pi _1^T \cdot h_1 + 0.6\cdot \pi _2^T \cdot h_2 = 0.4 \times (0) + 0.6 \times (-26.4) = -15.84}

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle E_2 = 0.4\cdot \pi _1^T \cdot T + 0.6\cdot \pi _2^T \cdot T = 0.4 \times (24, 0) + 0.6 \times (19.2, 0) = (21.12, 0)}

- Since Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w_2 = -15.84 - 21.12 \cdot 4 = -100.32 > -182.992} , add the cut

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 21.12x_1 + \theta \ge -15.84}

Iteration 3:

Step 1. The master program is

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{align} z = min\{10x_1 &+ 15x_2 + \theta | x_1 + x_2 \le 12, x_1 \ge 4, x_2 \ge 2, \\ &8.352x_1 + 18.048x_2 + \theta \ge -5.2, 21.12x_1 + \theta \ge -15.84\} \end{align} }

- Result is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle z = -10.39375, x^3 = (6.6828, 5.3172)^T, \theta ^3 = -156.97994} .

Step 2. No feasibility cut is needed.

Step 3.

- For Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \xi = \xi _1} , solve:

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w = min \{-2.4y_1 - 2.8y_2 | 6y_1 + 10y_2 \le 400.968, 8y_1 + 5y_2 \le 425.376, 0\le y_1 \le 50, 0\le y_2 \le 10 \} }

- The solution is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w_1 = -140.6128, y^T = (46.922, 10), \pi _1^T = (0, -0.3, 0, -1.3)}

- For Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \xi = \xi _2} , solve:

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w = min \{-2.8y_1 - 3.2y_2 | 6y_1 + 10y_2 \le 400.968, 8y_1 + 5y_2 \le 425.376, 0\le y_1 \le 30, 0\le y_2 \le 30 \} }

- The solution is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w_2 = -154.7098, y^T = (30, 22.0968), \pi _2^T = (-0.32, 0, -0.88, 0)}

- Thus,

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle e_3 = 0.4\cdot \pi _1^T \cdot h_1 + 0.6\cdot \pi _2^T \cdot h_2 = 0.4 \times (-13) + 0.6 \times (-26.4) = -21.04}

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle E_3 = 0.4\cdot \pi _1^T \cdot T + 0.6\cdot \pi _2^T \cdot T = 0.4 \times (0, 24) + 0.6 \times (19.2, 0) = (11.52, 9.6)}

- Since Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w_3 = -21.04 - 11.52 \cdot 6.6828 - 9.6 \cdot 5.3172 = -149.070976 > -156.97994} , add the cut

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 11.52x_1 + 9.6x_2 + \theta \ge -21.04}

Iteration 4:

Step 1. The master program is

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{align} z = min\{10x_1 &+ 15x_2 + \theta | x_1 + x_2 \le 12, x_1 \ge 4, x_2 \ge 2, \\ &8.352x_1 + 18.048x_2 + \theta \ge -5.2, 21.12x_1 + \theta \ge -15.84, \\ &11.52x_1 + 9.6x_2 + \theta \ge -21.04\} \end{align} }

- Result is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle z = -8.895, x^4 = (4, 3.375)^T, \theta ^4 = -99.52} .

Step 2. No feasibility cut is needed.

Step 3.

- For Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \xi = \xi _1} , solve:

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w = min \{-2.4y_1 - 2.8y_2 | 6y_1 + 10y_2 \le 240, 8y_1 + 5y_2 \le 270, 0\le y_1 \le 50, 0\le y_2 \le 10 \} }

- The solution is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w_1 = -88.8, y^T = (30, 6), \pi _1^T = (-0.208, -0.144, 0, 0)}

- For Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \xi = \xi _2} , solve:

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w = min \{-2.8y_1 - 3.2y_2 | 6y_1 + 10y_2 \le 240, 8y_1 + 5y_2 \le 270, 0\le y_1 \le 30, 0\le y_2 \le 30 \} }

- There are multiple optimal solutions. Selecting one of them, we have

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle e_4 = 0}

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle E_4 = (13.344, 13.056)}

- Since Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w_3 = 0 - 13.344 \cdot 4 - 13.056 \cdot 3.375 = -97.44 > -99.52} , add the cut

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 13.344x_1 + 13.056x_2 + \theta \ge 0}

Iteration 5:

Step 1. The master program is

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{align} z = min\{10x_1 &+ 15x_2 + \theta | x_1 + x_2 \le 12, x_1 \ge 4, x_2 \ge 2, \\ &8.352x_1 + 18.048x_2 + \theta \ge -5.2, 21.12x_1 + \theta \ge -15.84, \\ &11.52x_1 + 9.6x_2 + \theta \ge -21.04, 13.344x_1 + 13.056x_2 + \theta \ge 0\} \end{align} }

- Result is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle z = -8.5583, x^5 = (4.6667, 3.625)^T, \theta ^5 = -109.6} .

Step 2. No feasibility cut is needed.

Step 3.

- For Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \xi = \xi _1} , solve:

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w = min \{-2.4y_1 - 2.8y_2 | 6y_1 + 10y_2 \le 280, 8y_1 + 5y_2 \le 290, 0\le y_1 \le 50, 0\le y_2 \le 10 \} }

- The solution is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w_1 = -100, y^T = (30, 10), \pi _1^T = (0, -0.3, 0, -1.3)}

- For Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \xi = \xi _2} , solve:

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w = min \{-2.8y_1 - 3.2y_2 | 6y_1 + 10y_2 \le 280, 8y_1 + 5y_2 \le 290, 0\le y_1 \le 30, 0\le y_2 \le 30 \} }

- The solution is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w_2 = -116, y^T = (30, 10), \pi _2^T = (-0.232, -0.176, 0, 0)}

- Thus,

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle e_5 = 0.4\cdot \pi _1^T \cdot h_1 + 0.6\cdot \pi _2^T \cdot h_2 = 0.4 \times (-13) + 0.6 \times (0) = -5.2}

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle E_5 = 0.4\cdot \pi _1^T \cdot T + 0.6\cdot \pi _2^T \cdot T = 0.4 \times (0, 24) + 0.6 \times (13.92, 14.08) = (8.352, 18.048)}

- Since Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w_5 = -5.2 - 8.352 \cdot 4.6667 - 18.048 \cdot 3.625 = -109.6 = \theta ^5} , stop.

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_5 = (4.6667, 3.625)^T} is the optimal solution.

Applications

Apart from the process industry, two-stage linear stochastic programming finds application in other fields as well. For instance, in the optimal design of distributed energy systems, there are various uncertainties that need to be considered. Uncertainty is related to aspects such as demand and supply of energy, economic factors like unit investment cost and energy price, and uncertainty related to technical parameters like efficiency. [8], developed a two-stage stochastic programming model for the optimal design of a distributed energy system with a stage decomposition-based solution strategy. The authors accounted for both demand and supply uncertainty. They used the genetic algorithm on the first stage variables and the Monte Carlo method on the second-stage variables.

Another application of the two-stage linear stochastic programming is in the bike-sharing system (BSS). The system needs to ensure that bikes are available at all stations per the given demand. To ensure this balance, redistribution trucks are used to transfer bikes from one bike surplus station to a bike deficient station. Such a problem is referred to as the bike repositioning problem (BRS) in the aforementioned system. [9] Another challenge related to BRP is the aspect related to the holding cost of the depot. While transferring bikes from one station to another they could get damaged or be lost in the process which could lead to an imbalance between demand and supply in the BSS. As for bikes that cannot be balanced among the stations of the BSS are either placed back at the depot to increase the holding cost of the depot. To address the stated concerns, researchers developed a two-stage stochastic program that would capture the uncertainty related to redistribution of demand within the system. [9] In the first stage, before the realization of redistribution demand, a decision regarding routing was made. In the second stage, decisions regarding loading/unloading at each station and depot are made. Holding cost is incorporated into the model, such that the model’s primary objective is to determine the best routes of the repositioning truck and the optimal loading/unloading quantities at each station and depot. The model is framed to minimize the expected total sum of transportation cost, the penalty cost of all stations, and the holding cost of the depot.

Conclusion

From the previous examples, it is evident that two-stage linear stochastic programming finds applicability across many areas, such as the petrochemical, pharmaceutical industry, carbon capture, and energy storage among others. [1] Stochastic programming can primarily be used to model two types of uncertainties: 1) exogenous uncertainty, which is the most widely considered one, and 2) endogenous uncertainty, where realization regarding uncertainty depends on the decision taken. The main challenge, with respect to stochastic programming, is that the type of problems that can be solved is limited. An ‘ideal’ problem would be multi-stage stochastic mixed-integer nonlinear programming under both exogenous and endogenous uncertainty with an arbitrary probability distribution that is stagewise dependent. [1] However, current algorithms, in terms of development and computation resources, are still limited with respect to the ability to solve the ‘ideal’ problem.

References

- ↑ 1.0 1.1 1.2 1.3 1.4 1.5 1.6 1.7 Li Can, Grossmann Ignacio E. “A Review of Stochastic Programming Methods for Optimization of Process Systems Under Uncertainty,” Frontiers in Chemical Engineering 2021, Vol. 2

- ↑ J. Schif "The L Shaped Algorithm"

- ↑ Dantzig, George B. “Linear Programming under Uncertainty.” Management Science, vol. 1, no. 3-4, 1955, pp. 197–206.

- ↑ 4.0 4.1 4.2 4.3 Chu, Yunfei, and Fengqi You. “Integration of Scheduling and Dynamic Optimization of Batch Processes under Uncertainty: Two-Stage Stochastic Programming Approach and Enhanced Generalized Benders Decomposition Algorithm.” Industrial & Engineering Chemistry Research, vol. 52, no. 47, 2013, pp. 16851–16869.

- ↑ Barik, S.K., Biswal, M.P. & Chakravarty, D. "Two-stage stochastic programming problems involving interval discrete random variables." OPSEARCH 49, 280–298 (2012).

- ↑ 6.0 6.1 Soares, Joao, et al. “Two-Stage Stochastic Model Using Benders’ Decomposition for Large-Scale Energy Resource Management in Smart Grids.” IEEE Transactions on Industry Applications, vol. 53, no. 6, 2017, pp. 5905–5914.

- ↑ 7.0 7.1 Birge, John R., and François Louveaux. "Introduction to Stochastic Programming." Springer, 2011.

- ↑ Zhe Zhou, Jianyun Zhang, Pei Liu, Zheng Li, Michael C. Georgiadis, Efstratios N. Pistikopoulos, “A two-stage stochastic programming model for the optimal design of distributed energy systems,” Applied Energy, Volume 103, 2013, Pages 135-144, ISSN 0306-2619.

- ↑ 9.0 9.1 Qiong Tang, Zhuo Fu, Dezhi Zhang, Hao Guo, Minyi Li, "Addressing the Bike Repositioning Problem in Bike Sharing System: A Two-Stage Stochastic Programming Model," Scientific Programming, vol. 2020, Article ID 8868892, 12 pages, 2020.