Particle swarm optimization: Difference between revisions

m (Finalize references. Edited "hyperparameters".) |

(Updated Particle Swarm Optimization (PSO) flowchart.) |

||

| (77 intermediate revisions by the same user not shown) | |||

| Line 7: | Line 7: | ||

==Introduction== | ==Introduction== | ||

Particle Swarm Optimization (PSO) is inspired by nature and groups or swarms of natural creatures. It uses multiple “particles” distributed across a solution space to slowly converge upon a global, optimized solution. This algorithm does not require calculation of the function’s gradient or slope, instead using simple weights and the contribution of the rest of the swarm to provide a “fitness” value | Particle Swarm Optimization (PSO) is inspired by nature and groups or swarms of natural creatures. It uses multiple “particles” distributed across a solution space to slowly converge upon a global, optimized solution. This algorithm does not require calculation of the function’s gradient or slope, instead using simple weights and the contribution of the rest of the swarm to provide a “fitness” value | ||

<ref> Vyas, Ajay Kumar (2022) Artificial Intelligence for Renewable Energy Systems, John Wiley & Sons </ref> | <ref> Vyas, Ajay Kumar (2022) Artificial Intelligence for Renewable Energy Systems, John Wiley & Sons </ref> | ||

to leading to a technique dependent only on simple calculations and a number of starting points. | to leading to a technique dependent only on simple calculations and a number of starting points. | ||

The PSO algorithm was formulated and initially presented by Kennedy and Eberhart specifically to find solutions to discontinuous, but non-differentiable functions. The concept was presented at the IEEE conference on neural networks in 1995 | The PSO algorithm was formulated and initially presented by Kennedy and Eberhart specifically to find solutions to discontinuous, but non-differentiable functions. The concept was presented at the IEEE conference on neural networks in 1995 | ||

<ref name=Kennedy1> Kennedy, J. and Eberhart, R. (1995) Particle Swarm Optimization, Proceedings of the IEEE International Conference on Neural Networks</ref>. | <ref name=Kennedy1> Kennedy, J. and Eberhart, R. (1995) Particle Swarm Optimization, ''Proceedings of the IEEE International Conference on Neural Networks'', (pp. 1942-1945), doi: 10.1109/ICNN.1995.488968 </ref>. | ||

The pair later published a book Swarm Intelligence in 2001 that further expanded on the concept | The pair later published a book Swarm Intelligence in 2001 that further expanded on the concept | ||

<ref> Kennedy, J. and Eberhart, R. (2001) Swarm Intelligence, Academic Press </ref>. | <ref> Kennedy, J. and Eberhart, R. (2001) Swarm Intelligence 1st ed., Academic Press </ref>. | ||

Others have summarized the algorithm | Others have summarized the algorithm | ||

<ref> Rao, Singiresu (2020) Engineering Optimization - Theory and Practice (5th Edition), John Wiley and Sons </ref> | <ref> Rao, Singiresu (2020) Engineering Optimization - Theory and Practice (5th Edition), John Wiley and Sons </ref> | ||

<ref> Belyadi, Hoss and Haghighat, Alizera (2021) Machine Learning Guides for Oil and Gas Using Python - A Step-by-Step Breakdown with Data, Algorithms, Codes, and Applications, Elsevier </ref> | <ref> Belyadi, Hoss and Haghighat, Alizera (2021) Machine Learning Guides for Oil and Gas Using Python - A Step-by-Step Breakdown with Data, Algorithms, Codes, and Applications, Elsevier </ref> | ||

<ref name=MIT> Hassan, Rania (2004) Particle Swarm Optimization: Methods and Applications, https://dspace.mit.edu/bitstream/handle/1721.1/68163/16-888-spring-2004/contents/lecture-notes/l13_msdo_pso.pdf </ref>. | <ref name=MIT> Hassan, Rania (2004) Particle Swarm Optimization: Methods and Applications, Retrieved 2024 from https://dspace.mit.edu/bitstream/handle/1721.1/68163/16-888-spring-2004/contents/lecture-notes/l13_msdo_pso.pdf </ref>. | ||

This algorithm does not leverage formal methodology or mathematical rigor to identify an ideal point but instead uses a heuristic approach to explore a wide range of starting locations and conditions. These features allow the PSO algorithm to efficiently optimize complex functions. | This algorithm does not leverage formal methodology or mathematical rigor to identify an ideal point but instead uses a heuristic approach to explore a wide range of starting locations and conditions. These features allow the PSO algorithm to efficiently optimize complex functions. | ||

The biological analogy for a PSO algorithm is a flock of birds, a school of fish, or a colony of ants trying to find a food resource. Each animal, or particle, is an independent entity that is conducting its own search routine for an optimal result. However, the particles will also be influenced and guided by motions of the larger swarm. The results any particle has already seen, as well as the influence of the larger swarm helps all the particles converge to an optimal solution. | The biological analogy for a PSO algorithm is a flock of birds, a school of fish, or a colony of ants trying to find a food resource. Each animal, or particle, is an independent entity that is conducting its own search routine for an optimal result. However, the particles will also be influenced and guided by motions of the larger swarm. The results any particle has already seen, as well as the influence of the larger swarm helps all the particles converge to an optimal solution. | ||

In a more technical dissection, a PSO algorithm must be initially set-up with how many particles and iterations the user wants to use. More particles improve the convergence likelihood and help ensure the global optimum can be found due to a more complete search at the cost of | In a more technical dissection, a PSO algorithm must be initially set-up with how many particles and iterations the user wants to use. More particles improve the convergence likelihood and help ensure the global optimum can be found due to a more complete search at the cost of computing resources required. The PSO algorithm allows a user to set different “weights”, step sizes, and speeds for the particles to control how influenced each particle is by its past points (cognitive) and by the other nearby points (social) in the swarm and how large of steps will be taken. These weights allow algorithm adjustment to tailor the performance and computational requirements. | ||

The goals of studying this topic are to learn more about an algorithm capable of solving nondifferentiable functions that are relevant to modern problems. Many optimization algorithms use the gradient function | The goals of studying this topic are to learn more about an algorithm capable of solving nondifferentiable functions that are relevant to modern problems. Many optimization algorithms use the gradient function, so it is interesting to see an algorithm that does not require gradient computations. Additionally, the algorithm is still in active use in many fields | ||

<ref name=MIT/> | <ref name=MIT/> | ||

<ref> Poli, Richard (2008) Analysis of the Publications on the Applications of Particle Swarm Optimization, Journal of Artificial Evolution and Applications </ref>. | <ref> Poli, Richard (2008) Analysis of the Publications on the Applications of Particle Swarm Optimization, ''Journal of Artificial Evolution and Applications'' (pp. 685175-685185), doi: https://doi.org/10.1155/2008/685175 </ref>. | ||

PSO is regularly used to solve optimization problems in the gas and oil industry, antenna design | PSO is regularly used to solve optimization problems in the gas and oil industry, antenna design | ||

<ref> Jin, Nanbo and Rahmet-Samii, Yahya (2008) Particle Swarm Optimization for Antenna Designs in Engineering Electromagnetics, Journal of Artificial Evolution and Applications </ref>, | <ref> Jin, Nanbo and Rahmet-Samii, Yahya (2008) Particle Swarm Optimization for Antenna Designs in Engineering Electromagnetics, ''Journal of Artificial Evolution and Applications'' (pp. 728929-728939), doi: https://doi.org/10.1155/2008/728929</ref>, | ||

and electric power distribution | and electric power distribution | ||

<ref> Sharmeela, C. et al (2022) IoT, Machine Learning and Blockchain Technologies for Renewable Energy and Modern Hybrid Power Systems, River Publishers </ref>. | <ref> Sharmeela, C. et al (2022) IoT, Machine Learning and Blockchain Technologies for Renewable Energy and Modern Hybrid Power Systems, River Publishers </ref>. | ||

As functions get very complex in the real-world, the PSO algorithm is still capable of performing an effective search | As functions get very complex in the real-world, the PSO algorithm is still capable of performing an effective search within very complex functions due to its heuristic and operability on non-differentiable functions. | ||

==Algorithm Discussion== | ==Algorithm Discussion== | ||

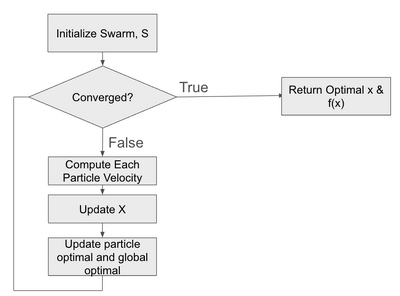

The structure of the algorithm is simple. The algorithm seeks to find an optimum of an objective function, f(x). A set of particles, X with members x X , forming a swarm, is initialized randomly. The algorithm runs for a number of iterations. At each iteration, for each particle in the swarm, each particle’s position is updated to be | The structure of the algorithm is simple. The algorithm seeks to find an optimum of an objective function, <math> f(x) </math>. A set of particles, <math> X </math> with members <math> x \in X </math>, forming a swarm, is initialized randomly. The algorithm runs for a number of iterations. At each iteration, for each particle in the swarm, each particle’s position is updated to be <math> x_{ i+1, p} = x_{i, p} + v_{i, p} </math>. Subscript <math> i </math> indicates the iteration of the algorithm. Subscript <math> p </math> indicates the index of the particle in the swarm. Both the optimal solution for a particular particle, <math> x_{best, p} </math>, and a global optimum, <math> x_{best, g} </math> are tracked. <math> f(x_{best, p}) </math> is the best solution to the objective function that a particular particle, <math> p </math>, has experienced over all iterations. <math> f(x_{best, g}) </math> is the best solution to the objective function for all particles, at all iterations. The algorithm is halted when either a fixed number of iterations is reached or a stopping criteria is reached. A typical stopping criteria is when the change between iterations is below a user-set threshold, demonstrating that the particles are not moving from the identified solution point. The algorithm’s control flow is shown in the following flow chart. | ||

[[File:PSO Flow.png|center|frameless|400x400px|Particle Swarm Optimization Algorithm Flow Chart]] | |||

<div style="text-align:center;font-size:1em;"> | |||

Figure 1: Algorithm Flow Chart | |||

</div> | |||

A velocity, <math> v_{i, p} </math> is independently computed for each particle <math> x_{i,p} </math>. The velocity is the result of the sum of the preceding velocity, a cognitive component and a social component. The cognitive component is <math> rand() *w_c* (x_{best, p} - x_{i, d}) </math> and pulls the solution towards the best point observed by this particle. The social component is <math> rand() *w_s* (x_{best, g} - x_{i, d}) </math> and pulls the solution towards the best point observed across the entire swarm. Each of the social component and cognitive component are weighed by a factor. The state that must be tracked across loop iterations is; <math> x_{i,p}</math>, <math>x_{best, p}</math>,<math> v_{i, p} </math> for each particle and <math> x_{best, g}</math>. | |||

A velocity, | |||

The convergence of the algorithm can be checked a few different ways. The original papers were based on training neural networks, so the optimization was halted when the neural network was sufficiently able to make predictions | The convergence of the algorithm can be checked in a few different ways. The original papers were based on training neural networks, so the optimization was halted when the neural network was sufficiently able to make predictions | ||

<ref name=Kennedy1/> | <ref name=Kennedy1/> | ||

<ref name=Kennedy2> Kennedy, J. (1997) The Particle Swarm: Social Adaptation of Knowledge, Proceedings of the IEEE International Conference on Evolutionary Computation</ref>. | <ref name=Kennedy2> Kennedy, J. (1997) The Particle Swarm: Social Adaptation of Knowledge, ''Proceedings of the IEEE International Conference on Evolutionary Computation'' (pp. 303-308), doi: 10.1109/ICEC.1997.592326</ref>. | ||

This approach is flawed because the designer of the algorithm used a priori knowledge of how good the neural network will perform. More generally, this approach incorporates application specific logic to stop when the optimal solution is good enough. Alternatively, the algorithm can be terminated following a predetermined number of iterations. Another approach is to stop when the solution's improvement at a given iteration stops | This approach is flawed because the designer of the algorithm used a priori knowledge of how good the neural network will perform. More generally, this approach incorporates application specific logic to stop when the optimal solution is good enough. Alternatively, the algorithm can be terminated following a predetermined number of iterations. Another approach is to stop when the solution's improvement at a given iteration stops | ||

<ref> MathWorks (2024) Particle Swarm Options, https://www.mathworks.com/help/gads/particle-swarm-options.html </ref>. | <ref> MathWorks (2024) Particle Swarm Options, Retrieved 2024 from https://www.mathworks.com/help/gads/particle-swarm-options.html </ref>. | ||

The pseudocode for the PSO algorithm attempting to minimize a solution follows. The algorithm can be modified to search for a maximum by changing the initialization of <math> x_{best, p} </math> and <math> x_{best, g} </math> along with their updates. | |||

<div style="border-style:solid;border-width:2px;background:#F5F5F5;font-size:1em;"> | |||

SWARM is initialized randomly | SWARM is initialized randomly | ||

<math> v_{particle} </math> initialized to 0 | |||

<math> x_{best, p} </math> initialized to <math> \infty </math> | |||

<math> x_{best, g} </math> initialized to <math> \infty </math> | |||

For i in MAX_ITERATIONS: | For i in MAX_ITERATIONS: | ||

<div style="text-indent:5em;"> | |||

For particle in SWARM: | |||

<div style="text-indent:10em;"> | |||

<math> cognitive := rand() *w_c * (x_{best, p}- X_{id}) </math> | |||

<math> social := rand() *w_s* (x_{best, g} - X_{id}) </math> | |||

<math> v_{particle} = v_{particle} + cognitive + social </math> | |||

<math> x_{i+1} = x_{i} + v_{i} </math> | |||

if <math> f(x_{i+1}) < f(x_{best, p}) </math> | |||

<div style="text-indent:15em;"> | |||

<math> x_{best, p}= x_{i+1}</math> | |||

</div> | |||

if <math> f(x_{i+1}) < f(P_{ig} ) </math> | |||

<div style="text-indent:15em;"> | |||

<math>x_{best, g}= x_{i+1} </math> | |||

</div> | |||

</div></div> | |||

PRINT “Local optimal at <math> x_{best, g} </math>” | |||

</div> | |||

<div style="text-align:center;font-size:1em;"> | |||

Figure 2: Algorithm Pseudocode | Figure 2: Algorithm Pseudocode | ||

</div> | |||

The algorithm has a few hyperparameters including; number of particles in the swarm, weights of the cognitive update on the velocity, weights on the social update on the velocity. The Pymoo Python implementation suggests a swarm size of 25, velocity weight of 0.8, a social update weight of 2.0 and a cognitive update of 2.0 | The algorithm has a few hyperparameters including; number of particles in the swarm, weights of the cognitive update on the velocity, weights on the social update on the velocity. The Pymoo Python implementation suggests a swarm size of 25, velocity weight of 0.8, a social update weight of 2.0 and a cognitive update of 2.0 | ||

<ref name=pymoo> Blank, Justin ( | <ref name=pymoo> Blank, Justin (2020) Multi-Objective Optimization in Python, Retrieved 2024 from https://pymoo.org/algorithms/soo/pso.html</ref>. | ||

Practitioners will need to adjust parameters and determine how their problem reacts to modifications of the hyperparameters. Heuristics may be used to adjust the various weights between iterations of the algorithm. Algorithms that adjust the hyperparameters based on heuristics are called Adaptive Particle Swarm Optimization (APSO) | Practitioners will need to adjust parameters and determine how their problem reacts to modifications of the hyperparameters. Heuristics may be used to adjust the various weights between iterations of the algorithm. Algorithms that adjust the hyperparameters based on heuristics are called Adaptive Particle Swarm Optimization (APSO) | ||

<ref> Zhan, Z. et al (2009) Adaptive Particle Swarm Optimization, IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics) </ref>. | <ref> Zhan, Z. et al (2009) Adaptive Particle Swarm Optimization, ''IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics)'', vol. 39, no. 6, (pp.1362-1381) doi: 10.1109/TSMCB.2009.2015956</ref>. | ||

PSO makes very few assumptions. The algorithm can be trivially adopted to work with real, integer or mixed functions. The algorithm does not rely on gradients or hessians, so there are no assumptions or requirements on the function being continuous or differentiable. However, the lack of assumptions comes at a cost, there is no proof that the algorithm will find an optimal solution. One of the original papers by Kennedy in 1997 | PSO makes very few assumptions. The algorithm can be trivially adopted to work with real, integer or mixed functions. The algorithm does not rely on gradients or hessians, so there are no assumptions or requirements on the function being continuous or differentiable. However, the lack of assumptions comes at a cost, there is no proof that the algorithm will find an optimal solution. One of the original papers by Kennedy in 1997 | ||

<ref name=Kennedy2/> | <ref name=Kennedy2/> | ||

indicated a tendency for the optimization to get stuck in local minimum. Adjustment of the algorithm’s hyperparameters is a performance vs robustness trade that may have avoided sticking in a local optimum. | indicated a tendency for the optimization to get stuck in local minimum. Adjustment of the algorithm’s hyperparameters is a performance vs robustness trade that may have avoided sticking in a local optimum. | ||

==Numerical Example== | ==Numerical Example== | ||

| Line 81: | Line 104: | ||

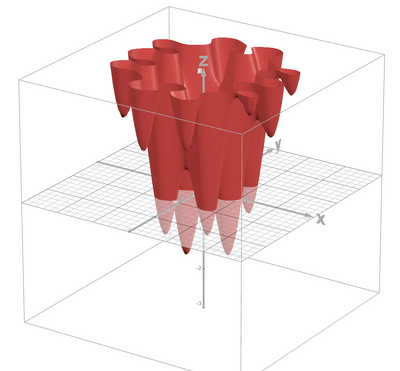

Given a non-convex function with multiple local optima: | Given a non-convex function with multiple local optima: | ||

<div style="text-align:center;font-size:1em;"> | |||

<math> min f(x,y) = sin(5x+1)+cos(7y-3)+x^2+y^2 \, | <math> min f(x,y) = sin(5x+1)+cos(7y-3)+x^2+y^2 \, | ||

</math> | </math> | ||

[[File:3D ObjectiveFunction.png|400px|frameless|3D Graph of Objective Function]] | |||

Figure 3: 3D Graph of Function: <math> f(x,y) = sin(5x+1)+cos(7y-3)+x^2+y^2 \, | |||

</math> | |||

[[File:2D Graph of 3D Objective Function.png|400px|frameless|2D Graph of Objective Function]] | |||

Figure 4: 2D Graph of Function: <math> f(x,y) = sin(5x+1)+cos(7y-3)+x^2+y^2 \, | |||

</math> | |||

</div> | |||

===Step 1: Define the Objective Function === | ===Step 1: Define the Objective Function === | ||

<math> f(x,y) = sin(5x+1)+cos(7y-3)+x^2+y^2 \, | <div style="text-align:center;font-size:1em;"> | ||

Objective Function: <math> f(x,y) = sin(5x+1)+cos(7y-3)+x^2+y^2 \, | |||

</math> | </math> | ||

</div> | |||

===Step 2: Define Key Variables === | ===Step 2: Define Key Variables === | ||

{| class="wikitable" | |||

|- | |||

! ''Symbol'' | |||

! style="width: 300px;" | Description | |||

! style="width: 80px;" | Value | |||

! style="width: 300px;" | Additional Notes | |||

|- | |||

! P | |||

| style="text-align: center;" | Total Number of Particles used to search the function | |||

| style="text-align: center;" | 25 | |||

| style="text-align: center;" | Larger number means greater chance of finding the global optimum, but larger computational load | |||

|- | |||

! <math> r_1 \,</math> | |||

| style="text-align: center;" | random number between 0 and 1 | |||

| style="text-align: center;" | (0,1) | |||

| style="text-align: center;" | to be randomly generated at each evaluation | |||

|- | |||

! <math> r_2 \,</math> | |||

| style="text-align: center;" | random number between 0 and 1 | |||

| style="text-align: center;" | (0,1) | |||

| style="text-align: center;" | to be randomly generated at each evaluation | |||

|- | |||

! <math> w \,</math> | |||

| style="text-align: center;" | Inertia Weight Constant (0,1) | |||

| style="text-align: center;" | 0.7 | |||

| style="text-align: center;" | | |||

|- | |||

! <math> c_1 \,</math> | |||

| style="text-align: center;" | Cognitive Coefficient | |||

(Independence, prioritize the self) | |||

| style="text-align: center;" | 0.1* | |||

| style="text-align: center;" | recommend <math> c_1 \cong c_2 \,</math> & sufficiently low value for slower smoother operation | |||

|- | |||

! <math> c_2 \,</math> | |||

| style="text-align: center;" | Social Coefficient | |||

(Codependence, prioritize the group) | |||

| style="text-align: center;" | 0.15* | |||

| style="text-align: center;" | recommend <math> c_1 \cong c_2 \,</math> & sufficiently low value for slower smoother operation | |||

|} | |||

*The algorithm is highly sensitive to c1 and c2. Recommend referencing the search space and using 1/30 to 1/60 of the total range. Ex: target search range is -3 to 3 by -3 to 3, use a value 0.1 to 0.2 to prevent “overshooting” and oscillations of the individual particles. | *The algorithm is highly sensitive to c1 and c2. Recommend referencing the search space and using 1/30 to 1/60 of the total range. Ex: target search range is -3 to 3 by -3 to 3, use a value 0.1 to 0.2 to prevent “overshooting” and oscillations of the individual particles. | ||

===Step 3: Define Stopping Criteria === | ===Step 3: Define Stopping Criteria === | ||

For this example | For this example select a maximum number of iterations (t): | ||

<div style="text-align:center;font-size:1em;"> | |||

<math> t = 50 \, | <math> t = 50 \, | ||

</math> | </math> | ||

</div> | |||

It is important to note that there are alternative stopping criteria such as checking for convergence of the particles - such as a minimum delta between points or using a Confidence Interval on the X and Y coordinates as your threshold. This could lead to more, or less, iterations depending upon what threshold is used. Care should be taken to ensure the stopping criteria can actually be met. This wiki does not provide an example of this methodology at this point in time. | It is important to note that there are alternative stopping criteria such as checking for convergence of the particles - such as a minimum delta between points or using a Confidence Interval on the X and Y coordinates as your threshold. This could lead to more, or less, iterations depending upon what threshold is used. Care should be taken to ensure the stopping criteria can actually be met. This wiki does not provide an example of this methodology at this point in time. | ||

===Step 4: Setup Particle Data=== | ===Step 4: Setup Particle Data=== | ||

Each particle has three main properties | |||

===Step 5: Evaluate at | *Position of particle <math>i \,</math>, at iteration <math>t\,</math>, denoted as <math>X^i (t)\,</math> where: | ||

<div style="text-align:center;font-size:1em;"> | |||

<math>X^i (t) = (x^i (t), y^i (t)) \,</math> or <math>\begin{bmatrix} x^i(t) \\ y^i(t) \end{bmatrix}</math> | |||

</div> | |||

*Velocity Vector denoted as: | |||

<div style="text-align:center;font-size:1em;"> | |||

<math>V^i (t) = (v_x^i(t), v_y^i(t))\,</math> or <math>\begin{bmatrix} V_x^i(t) \\ V_y^i(t) \end{bmatrix}</math> | |||

</div> | |||

*Objective Function Value of: | |||

<div style="text-align:center;font-size:1em;"> | |||

<math>f(X^i (t))=f(x^i (t),y^i (t))\,</math> | |||

</div> | |||

Where <math>P\,</math> equals total number of particles, cumulatively these would be represented as: | |||

<div style="text-align:center;font-size:1em;"> | |||

<math>X^i (t) = [X^0 (t), \cdots, X^{P-1} (t)] = \begin{bmatrix} x^0(t), & \cdots , & x^{P-1}(t) \\ y^0(t), & \cdots , & y^{P-1}(t) \end{bmatrix}</math> | |||

<math>V^i (t) = [V^0 (t), \cdots, V^{P-1} (t)] = \begin{bmatrix} V_x^0(t), & \cdots , & V_x^{P-1}(t) \\ V_y^0(t), & \cdots , & V_y^{P-1}(t) \end{bmatrix}</math> | |||

<math>f(X^i (t))=[f(X^0 (t)), \cdots , f(X^{P-1}] (t))]=[f(x^0 (t),y^0 (t)), \cdots,f(x^{P-1} (t),y^{P-1} (t))\,</math> | |||

</div> | |||

This wiki uses <math> X^{P-1} </math> not <math> X^P </math> due to starting at <math> X^0 </math> not <math> X^1 </math> | |||

Important Note <math>X^i (t)\,</math> only tracks the current iteration of particle <math>i\,</math>, not all values of a particle over <math>m\,</math> iterations: | |||

<div style="text-align:center;font-size:0.7em;"> | |||

<math>X^7(t) \neq \begin{bmatrix} x^7(0), & \cdots , & x^7(m) \\ y^7(0), & \cdots , & y^7(m) \end{bmatrix}</math> and <math> V^7(t) \neq \begin{bmatrix} V_x^7(0), & \cdots , & V_x^7(m) \\ V_y^7(0), & \cdots , & V_y^7(m) \end{bmatrix}</math> and <math> f(X^7(t)) \neq [f(x^7(0),y^7(0), \cdots, f(x^7(m),y^7(m)] </math> | |||

</div> | |||

This wiki guide will use randomly generated initialization for particle position and velocity. There are alternative initialization methods available that are not covered in this guide such as using a set of points in a grid pattern. | |||

For <math> X^i(0) \,</math> and <math> V^i(0) \, </math> , define initialization data for all particles given and define a range to search within: | |||

<div style="text-align:center;font-size:1em;"> | |||

<math> X^i(t) = (x^i(t),y^i(t)) \,</math> | |||

<math> -3 \le x \le 3 </math> | |||

<math> -3 \le y \le 3 </math> | |||

</div> | |||

To ensure an even distribution and less initial clustering of the particles, this example will use a uniform distribution to create surrogate data to initialize the position. Depending on how the code is set up, scaling and offset factors may be required. | |||

This example will use a normal distribution to create surrogate data to initialize the Velocity vector. Depending how the code is set up, a scaling factor may be required. | |||

Example Particle Data, Particle # 7: | |||

*Particle Position Coordinates at time (<math> t </math>) : | |||

<div style="text-align:center;font-size:1em;"> | |||

<math> X^i(t) = \begin{bmatrix} x^i(t) \\ y^i(t) \end{bmatrix} \rightarrow X^7(0) = \begin{bmatrix} x^7(0) \\ y^7(0) \end{bmatrix} = \begin{bmatrix} 2.1970 \\ -2.6096 \end{bmatrix}</math> | |||

</div> | |||

*Particle Velocity Vector at time (<math> t </math>) : | |||

<div style="text-align:center;font-size:1em;"> | |||

<math> V^i(t) = \begin{bmatrix} V_x^i(t) \\ V_y^i(t) \end{bmatrix} \rightarrow V^7(0) = \begin{bmatrix} V_x^7(0) \\ V_y^7(0) \end{bmatrix} = \begin{bmatrix} 0.1057 \\ -0.0035 \end{bmatrix} </math> | |||

</div> | |||

*Particle Objective Value at time (<math> t </math>) : | |||

<div style="text-align:center;font-size:1em;"> | |||

<math> f(X^i(t)) = f(x^i(t),y^i(t)) \rightarrow f(X^7(0)) = f(x^7(0),y^7(0)) = 10.4267 </math> | |||

</div> | |||

===Step 5: Evaluate Function at Positions === | |||

For each particle, evaluate the objective function at their position: | |||

Objective Function: <math> f(x,y) = sin(5x+1)+cos(7y-3)+x^2+y^2 \,</math> | |||

From Step 2, the defined number of particles is <math> P = 25 \,</math> | |||

<div style="text-align:center;font-size:1em;"> | |||

<math>f(X^i(0))=\begin{bmatrix} f(X^0(0)) \\ \vdots \\ f(X^{P-1}(0) \end{bmatrix} =\begin{bmatrix} f(X^0(0)) \\ \vdots \\ f(X^{24}(0) \end{bmatrix} = \begin{bmatrix} f(x^0(0),y^0(0)) \\ \vdots \\ f(x^{24}(0),y^{24}(0)) \end{bmatrix} =\begin{bmatrix} f(-0.4914, 0.2226) \\ \vdots \\ f(-0.4875, 0.1907) \end{bmatrix} =\begin{bmatrix} -1.6941 \\ \vdots \\ -1.7126 \end{bmatrix} </math> | |||

</div> | |||

==== Step 5.1 - Calculate Local Optima ==== | |||

For each particle, determine the best value in its own history. This will be called "Personal Best Objective Function" and notated as <math> pbo^i \,</math>. At initialization, there is only one value. | |||

In effect the program is tracking the position and value of each particle through all iterations and choosing the optimal value - in this example the minimum. As such, rather than tracking all values, the computational memory needs can be reduced by only tracking the optima found so far and comparing to the next iteration’s value: | |||

<div style="text-align:center;font-size:1em;"> | |||

<math> pbo^i = min[f^i(X^i(0)), \cdots, f^i(X^i(m))] \rightarrow min[f^i(X^i(t_{previous best}^i)), f^i(X^i(t))] = [f^i(X^i(t_{best}^i))] \,</math> | |||

</div> | |||

Note: <math> (t_{best}^i) </math> becomes <math> (t_{previous best}^i) </math> in the next iteration. | |||

Cumulatively this can also be written as: | |||

<div style="text-align:center;font-size:1em;"> | |||

<math> pbo = [min[f^0(X^0(t_{previous best}^0)), f^0(X^0(t))], \cdots, min[f^{P-1}(X^{P-1}(t_{previous best}^{P-1})), f^{P-1}(X^{P-1}(t))]] = \,</math> | |||

<math> pbo = [f^0(X^0(t_best^0)), \cdots , f^{P-1}(X^{P-1}(t_{best}^{P-1}))] = \,</math> | |||

<math> pbo = [-1.6941, \cdots, -1.7126] \,</math> | |||

</div> | |||

Note: Shown is at <math> t=0 </math>. All "best" values occur at <math> t=0 </math> upon initialization. | |||

The location of the optimal objective function value (<math> pbo^i </math>) at (<math> t_{best} </math>) needs to be recorded. (<math> pb^i </math>) will be used to notate the "Personal Best Location" or the positional coordinates that return the best (<math> f(X) </math>) ever explored by particle (<math> i </math>) . | |||

<div style="text-align:center;font-size:1em;"> | |||

<math> pb^i = [X^i(0), \cdots, X^i(m)] \rightarrow [X^i(t_{best}^i)] = \begin{bmatrix} x^i(t_{best}^i) \\ y^i(t_{best}^i) \end{bmatrix} \,</math> | |||

</div> | |||

Cumulatively written as: | |||

<div style="text-align:center;font-size:1em;"> | |||

<math> pb = [X^0(t_{best}^0), \cdots, X^{P-1}(t_{best}^{P-1})] = \begin{bmatrix} x^0(t_{best}^0), & \cdots , & x^{P-1}(t_{best}^{P-1}) \\ y^0(t_{best}^0), & \cdots , & y^{P-1}(t_{best}^{P-1}) \end{bmatrix}\,</math> | |||

</div> | |||

==== Step 5.2 - Calculate Global Optima ==== | |||

Now that the personal best for all particles is known, the next step is to compare all particles to determine the best global value. The "Global Best Objective Function Value" will be notated as <math> gbo </math>, this represent the best <math> f(X) </math> explored by all particles. | |||

<div style="text-align:center;font-size:1em;"> | |||

<math> gbo = min[pbo] = f(X(t_{best})) </math> | |||

<math> gbo = min [-1.6941, \cdots , -1.7126] </math> | |||

<math> gbo = [-1.7137] </math> | |||

</div> | |||

<math> -1.7137 </math> correlates to <math> pbo^{12} </math> or particle 12 of 24 (25 particles = particle 0 <math> (i=0) </math>, ... particle 24 <math> (i=24) </math>) | |||

The Global Best Objective Function Value was determined in the previous step, next the coordinates that achieved that value needs to be recorded. "Global Best Location" is notated as <math> gb </math> to represent the position that gives the best <math> f(X) </math> explored by all particles. | |||

<div style="text-align:center;font-size:1em;"> | |||

<math> gb =[X(t_{best})] </math> | |||

<math> gb = pb^{12}=[X^{12}(t_{best}^{12})] =\begin{bmatrix} x^{12}(t_{best}^{12}) \\ y^{12}(t_{best}^{12}) \end{bmatrix} = \begin{bmatrix} -0.4832 \\ 0.1907 \end{bmatrix} </math> | |||

</div> | |||

===Step 6: Calculate Next Iteration Position, Velocity, Value=== | ===Step 6: Calculate Next Iteration Position, Velocity, Value=== | ||

Calculate the next iteration values by using the following rules: | |||

Position: | |||

<div style="text-align:center;font-size:1em;"> | |||

<math> X^i(t+1) = X^i(t)+V^i(t+1) = \begin{bmatrix} x^i(t)+v_x^i(t+1) \\ y^i(t)+v_y^i(t+1) \end{bmatrix} </math> | |||

</div> | |||

Velocity: | |||

Note: need to generate a random value for <math> r_1 </math> and <math> r_2 </math> at this step | |||

<div style="text-align:center;font-size:1em;"> | |||

<math> V^i(t+1)=wV^i(t)+c_1 r_1 (pb^i - X^i(t))+ c_2 r_2 (gb-X^i(t)) </math> | |||

</div> | |||

Example: | |||

Determine <math> X^5(t+1) </math> where <math> t=3 </math>: | |||

<div style="text-align:center;font-size:1em;"> | |||

<math> X^5(4) = X^5(3)+V^5(4) = \begin{bmatrix} x^5(3)+v_x^5(4) \\ y^5(3)+v_y^5(4) \end{bmatrix}</math> | |||

</div> | |||

Requires determining <math> V^5(4) </math> first: | |||

<div style="text-align:center;font-size:1em;"> | |||

<math> V^5(4)=wV^5(3)+c_1 r_1 (pb^5 - X^5(3))+ c_2 r_2 (gb-X^5(3)) </math> | |||

</div> | |||

Define Variables: | |||

<div style="text-align:center;font-size:1em;"> | |||

<math> w=0.8 , c_1=0.1 , c_2=0.15, r_1=0.3180, r_2=0.1100</math> | |||

<math> V^5(3) = \begin{bmatrix} 0.2024 \\ -0.0449 \end{bmatrix}, pb^5 = \begin{bmatrix} -1.8202 \\ -0.5951 \end{bmatrix}, X^5(3) = \begin{bmatrix} -1.6177 \\ 0.5502 \end{bmatrix}, gb = \begin{bmatrix} 0.78606 \\ 0.27157 \end{bmatrix} </math> | |||

</div> | |||

Solve for <math> V^5(4) </math> : | |||

<div style="text-align:center;font-size:1em;"> | |||

<math> | |||

V^5(4) = 0.8 * \begin{bmatrix} 0.2024 \\ -0.0449 \end{bmatrix} | |||

+ 0.1 * 0.3180 * \begin{pmatrix} \begin{bmatrix} -1.8202 \\ -0.5951 \end{bmatrix} - \begin{bmatrix} 0.78606 \\ 0.27157 \end{bmatrix} \end{pmatrix} | |||

+ 0.15 * 0.1100* \begin{pmatrix} \begin{bmatrix} 0.78606 \\ 0.27157 \end{bmatrix} - \begin{bmatrix} -1.6177 \\ 0.5502 \end{bmatrix} \end{pmatrix} </math> | |||

<math> | |||

V^5(4) = 0.8 * \begin{bmatrix} 0.2024 \\ -0.0449 \end{bmatrix} | |||

+ 0.03180 * \begin{bmatrix} -2.60626 \\ 0.32353 \end{bmatrix} | |||

+ 0.0165* \begin{bmatrix} 2.40376 \\ -0.27863 \end{bmatrix} | |||

</math> | |||

<math> | |||

V^5(4) = \begin{bmatrix} 0.16192 \\ -0.03592 \end{bmatrix} | |||

+ \begin{bmatrix} -0.08288 \\ 0.01028 \end{bmatrix} | |||

+ \begin{bmatrix} 0.03966 \\ -0.0046 \end{bmatrix} | |||

</math> | |||

<math> | |||

V^5(4) = \begin{bmatrix} 0.118703 \\ -0.03023 \end{bmatrix} | |||

</math> | |||

</div> | |||

Solve for <math> X^5(4) </math> : | |||

<div style="text-align:center;font-size:1em;"> | |||

<math> | |||

X^5(4) = X^5(3) + V^5(4) | |||

= \begin{bmatrix} -1.6177 \\ 0.5502 \end{bmatrix} | |||

+ \begin{bmatrix} 0.118703 \\ -0.03023 \end{bmatrix} | |||

</math> | |||

<math> | |||

X^5(4) | |||

= \begin{bmatrix} -1.7989 \\ 0.5199 \end{bmatrix} | |||

</math> | |||

</div> | |||

Reevaluate <math> f(X^i(t+1)) </math> : | |||

<div style="text-align:center;font-size:1em;"> | |||

<math> f(x,y)=sin(5x+1)+cos(7y-3)+x^2+y^2 </math> | |||

<math> f(X^5(4))=sin(5*(-1.7989)+1)+cos(7*(0.5199)-3)+(-1.7989)^2+(0.5199)^2 </math> | |||

<math> f(X^5(4))=3.3189 </math> | |||

</div> | |||

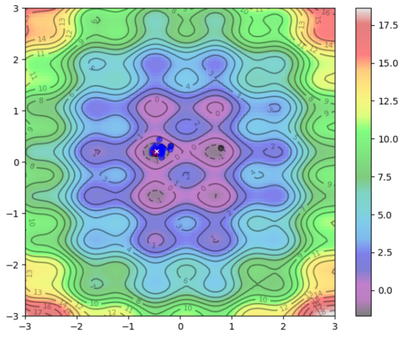

===Step 7: Iteratively Solve=== | ===Step 7: Iteratively Solve=== | ||

Calculate next optima, position, velocity, and function values for each particle. | Calculate next optima, position, velocity, and function values for each particle. | ||

Repeat until | Repeat until stopping criteria has been met. | ||

Final Results after <math> t=50 </math> iterations: | |||

<div style="text-align:center;font-size:1em;"> | |||

Global Best Value: <math> (gbo) </math> : <math> -1.7137 </math> | |||

Global Best Position: <math> (gb) </math> : <math> \begin{bmatrix} -0.4545 \\ 0.2121 \end{bmatrix} </math> | |||

</div> | |||

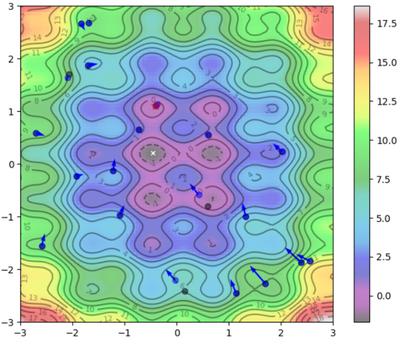

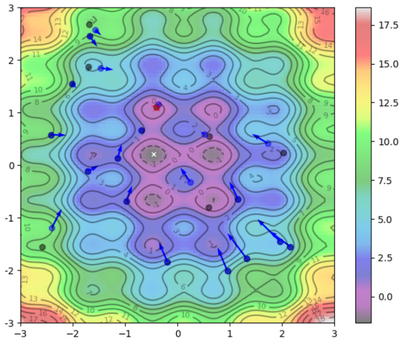

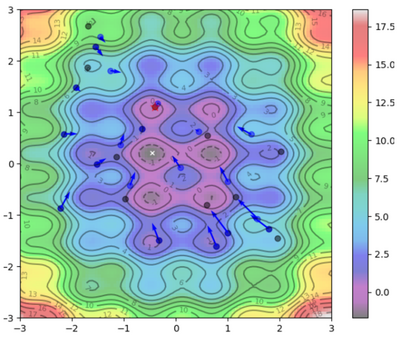

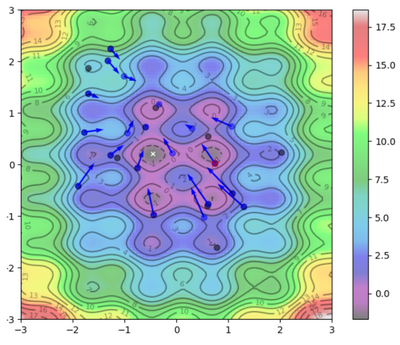

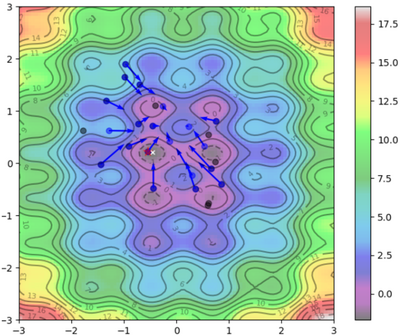

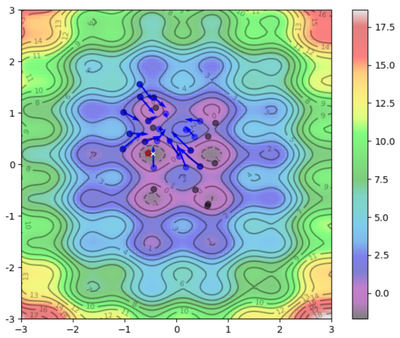

==== Visual Representation ==== | |||

To help visualize what's going on: | |||

Blue Dots = Each particle's current position | |||

Blue Arrows = Each particle's velocity | |||

Black Dots = Each particle's best position | |||

{| class="wikitable" | |||

|- | |||

! ''Iteration'' | |||

! style="width: 300px;" | Image | |||

|- | |||

! <math> 0 </math> | |||

| style="text-align: center;" | [[File:PSO Iteration0.png|400px|frameless|PSO - Iteration 0]] | |||

|- | |||

! <math> 1 </math> | |||

| style="text-align: center;" | [[File:PSO Iteration1.png|400px|frameless|PSO - Iteration 1]] | |||

|- | |||

! <math> 2 </math> | |||

| style="text-align: center;" | [[File:PSO Iteration2.png|400px|frameless|PSO - Iteration 2]] | |||

|- | |||

! <math> 3 </math> | |||

| style="text-align: center;" | [[File:PSO Iteration3.png|400px|frameless|PSO - Iteration 3]] | |||

|- | |||

! <math> 4 </math> | |||

| style="text-align: center;" | [[File:PSO Iteration4.png|400px|frameless|PSO - Iteration 4]] | |||

|- | |||

! <math> 5 </math> | |||

| style="text-align: center;" | [[File:PSO Iteration5.png|400px|frameless|PSO - Iteration 5]] | |||

|- | |||

! <math> \cdots </math> | |||

| style="text-align: center;" | <math> \cdots </math> | |||

|- | |||

! <math> 25 </math> | |||

| style="text-align: center;" |[[File:PSO Iteration25.png|400px|frameless|PSO - Iteration 25]] | |||

|} | |||

==Applications== | ==Applications== | ||

| Line 121: | Line 474: | ||

PSO was originally used to train neural networks (NN)<ref name=Kennedy1/>. Neural networks frequently have highly non-linear loss functions and it is therefore difficult to find a global optimum | PSO was originally used to train neural networks (NN)<ref name=Kennedy1/>. Neural networks frequently have highly non-linear loss functions and it is therefore difficult to find a global optimum | ||

<ref> Want, C. et al (2024) cuPSO: GPU Parallelization for Particle Swarm Optimization Algorithms, https://arxiv.org/pdf/2205.01313 </ref>. | <ref> Want, C. et al (2024) cuPSO: GPU Parallelization for Particle Swarm Optimization Algorithms, arXiv:2205.01313v2, Retrieved 2024 from https://arxiv.org/pdf/2205.01313 </ref>. | ||

Several papers have noted an improvement in NN accuracy using PSO optimization when compared to back-propagation due to the ability to avoid local optimum | Several papers have noted an improvement in NN accuracy using PSO optimization when compared to back-propagation due to the ability to avoid local optimum | ||

<ref> Gudise, V.G. and Venayagamoorthy, G.K. (2003) Comparison of particle swarm optimization and backpropagation as training algorithms for neural networks, Proceedings of the 2003 IEEE Swarm Intelligence Symposium </ref>. | <ref> Gudise, V.G. and Venayagamoorthy, G.K. (2003) Comparison of particle swarm optimization and backpropagation as training algorithms for neural networks, ''Proceedings of the 2003 IEEE Swarm Intelligence Symposium'', (pp.110-117) doi: 10.1109/SIS.2003.1202255</ref>. | ||

However, the current state of the current practice for training deep neural networks remains the back-propagation algorithm. Back-propagation is more efficient in the context of neural networks because for each particle at each iteration, there will need to be an error function computation. For example, a swarm size of 25 requires 25 times more error function evaluations | However, the current state of the current practice for training deep neural networks remains the back-propagation algorithm. Back-propagation is more efficient in the context of neural networks because for each particle at each iteration, there will need to be an error function computation. For example, a swarm size of 25 requires 25 times more error function evaluations | ||

<ref name=wihartiko> Wihartiko, F. (2018) Performance comparison of gentitic algorithms and particle swarm optimization for model integer programming bus timetabling problem, IOP Conference Series: Materials Science and Engineering </ref>. | <ref name=wihartiko> Wihartiko, F. (2018) Performance comparison of gentitic algorithms and particle swarm optimization for model integer programming bus timetabling problem, ''IOP Conference Series: Materials Science and Engineering'', doi:10.1088/1757-899X/332/1/012020 </ref>. | ||

In addition to identifying weights in the Neural Networks (training), PSO has been used in selecting | In addition to identifying weights in the Neural Networks (training), PSO has been used in selecting hyperparameters for neural networks. In these applications PSO is used to maximize the neural network’s accuracy by varying parameters including number of nodes and structure of the networks | ||

<ref> Lorenzo, Pablo et al (2017) Particle swarm optimization for | <ref> Lorenzo, Pablo et al (2017) Particle swarm optimization for hyperparameter selection in deep neural networks, ''Proceedings of the Genetic and Evolutionary Computation Conference (pp. 481-488) https://doi.org/10.1145/3071178.3071208</ref>. | ||

PSO fits this application well because this function is nonlinear and the gradient is non-continuous. | PSO fits this application well because this function is nonlinear and the gradient is non-continuous. Hyperparameters for NNs may be real, integer or binary values. | ||

PSO is most often compared to other heuristic based algorithms such as; genetic algorithms differential evolution simulated annealing. One study for a bus scheduling application did find the PSO was better than genetic algorithms with respect to the optimality of solution and run-time | PSO is most often compared to other heuristic based algorithms such as; genetic algorithms differential evolution simulated annealing. One study for a bus scheduling application did find the PSO was better than genetic algorithms with respect to the optimality of solution and run-time | ||

<ref name=wihartiko/>. | <ref name=wihartiko/>. | ||

The results in the paper are difficult to generalize, as results will be sensitive to the application as well as the algorithm’s | The results in the paper are difficult to generalize, as results will be sensitive to the application as well as the algorithm’s hyperparameters. The recommended choice of heuristic optimization algorithms remains up to the practitioner’s judgement. | ||

A number of different implementations are available as libraries. Pyswarms is a Python API | A number of different implementations are available as libraries. Pyswarms is a Python API | ||

<ref> James, Lester ( | <ref> James, Lester (2017) PySwarms, Retrieved 2024 from https://pyswarms.readthedocs.io/en/latest/ </ref> | ||

Pymoo is an alternative Python implementation | Pymoo is an alternative Python implementation | ||

<ref name=pymoo/>. | <ref name=pymoo/>. | ||

Pyswarms is a compact implementation specifically focused on PSO, whereas Pymoo is a more complete library with multiple | Pyswarms is a compact implementation specifically focused on PSO, whereas Pymoo is a more complete library with multiple optimization algorithm implementations. Matlab does have PSO implementation as part of the optimization toolbox | ||

<ref> MathWorks (2024) particleswarm, https://www.mathworks.com/help/gads/particleswarm.html </ref> | <ref> MathWorks (2024) particleswarm, Retrieved 2024 from https://www.mathworks.com/help/gads/particleswarm.html </ref> | ||

There is a 3rd party Matlab implementation available for free | There is a 3rd party Matlab implementation available for free | ||

<ref> Birge, | <ref> Birge, Brian (2024) Particle Swarm Optimization Toolbox, https://www.mathworks.com/matlabcentral/fileexchange/7506-particle-swarm-optimization-toolbox, MATLAB Central File Exchange. </ref>. | ||

However, the algorithm is simple so many prefer to implement the algorithm directly. | However, the algorithm is simple so many prefer to implement the algorithm directly. | ||

==Conclusions== | ==Conclusions== | ||

The PSO algorithm is a heuristic based optimization algorithm. The heuristics are loosely based on the behavior of a flock of seagulls. The algorithm’s strengths are in its simplicity, lack of reliance on a gradient, and robustness against getting stuck in local optimum. The drawback is that the “swarm” requires a large number of function evaluations, making the algorithm slow. Additionally, the algorithm has no guarantee of convergence. The ability to find an optimum is sensitive to starting conditions and | The PSO algorithm is a heuristic based optimization algorithm. The heuristics are loosely based on the behavior of a flock of seagulls. The algorithm’s strengths are in its simplicity, lack of reliance on a gradient, and robustness against getting stuck in local optimum. The drawback is that the “swarm” requires a large number of function evaluations, making the algorithm slow. Additionally, the algorithm has no guarantee of convergence. The ability to find an optimum is sensitive to starting conditions and hyperparameters. | ||

The algorithm is easy to get up and running with several public implementations available in the Python and Matlab programming languages. Alternatively, the algorithm is so simple that some will implement the algorithm themselves. | The algorithm is easy to get up and running with several public implementations available in the Python and Matlab programming languages. Alternatively, the algorithm is so simple that some will implement the algorithm themselves. | ||

| Line 153: | Line 506: | ||

When using the algorithm, practitioners need to select several weights that control the algorithms. A cognitive weight determines to what extent a particle’s previous optimum affects future searches. A social weight determines to what extent all particle’s global optimum affects future searches. These parameters and the swarm size need to be adjusted carefully to balance robustness against computational efficiency. | When using the algorithm, practitioners need to select several weights that control the algorithms. A cognitive weight determines to what extent a particle’s previous optimum affects future searches. A social weight determines to what extent all particle’s global optimum affects future searches. These parameters and the swarm size need to be adjusted carefully to balance robustness against computational efficiency. | ||

The PSO algorithm continues to be developed. There is research into how to adjust the algorithm’s | The PSO algorithm continues to be developed. There is research into how to adjust the algorithm’s hyperparameters at various iterations of the algorithm. These algorithms are called adaptive particle swarm optimization (APSO) and take some guesswork the algorithm. The algorithm has also been combined with genetic optimization algorithms, though the algorithms remain heuristic based and do not guarantee optimum results. | ||

PSO continues to be used in applications that are non-linear or are non-differentiable. Recent (2024) studies have used PSO for RF in optimizing the placement of terminals | PSO continues to be used in applications that are non-linear or are non-differentiable. Recent (2024) studies have used PSO for RF in optimizing the placement of terminals | ||

<ref> Urbanus Mwanzia Ngei, et al (2024) Optimal sizing and placement of STATCOM, TCSC and UPFC using a novel hybrid genetic algorithm-improved particle swarm optimization, https:// | <ref> Urbanus Mwanzia Ngei, et al (2024) Optimal sizing and placement of STATCOM, TCSC and UPFC using a novel hybrid genetic algorithm-improved particle swarm optimization, ''Heliyon'' vol. 10, issue 23, https://doi.org/10.1016/j.heliyon.2024.e40682 </ref> | ||

and for predicting landslides | and for predicting landslides | ||

<ref> Zhang, Qing (2024) Spatial distribution prediction of landslide susceptibility based on integrated particle swarm optimization, Frontiers in Earth Science </ref>. | <ref> Zhang, Qing (2024) Spatial distribution prediction of landslide susceptibility based on integrated particle swarm optimization, ''Frontiers in Earth Science'' vol. 12, doi=10.3389/feart.2024.1516615</ref>. | ||

==References== | ==References== | ||

<references /> | <references /> | ||

Latest revision as of 18:59, 14 December 2024

Author: David Schluneker (dms565), Thomas Ploetz (tep52), Michael Sorensen (mds385), Amrith Kumaar (ak836), Andrew Duffy (ajd296) (ChemE 6800 Fall 2024)

Stewards: Nathan Preuss, Wei-Han Chen, Tianqi Xiao, Guoqing Hu

Introduction

Particle Swarm Optimization (PSO) is inspired by nature and groups or swarms of natural creatures. It uses multiple “particles” distributed across a solution space to slowly converge upon a global, optimized solution. This algorithm does not require calculation of the function’s gradient or slope, instead using simple weights and the contribution of the rest of the swarm to provide a “fitness” value [1] to leading to a technique dependent only on simple calculations and a number of starting points.

The PSO algorithm was formulated and initially presented by Kennedy and Eberhart specifically to find solutions to discontinuous, but non-differentiable functions. The concept was presented at the IEEE conference on neural networks in 1995 [2]. The pair later published a book Swarm Intelligence in 2001 that further expanded on the concept [3]. Others have summarized the algorithm [4] [5] [6]. This algorithm does not leverage formal methodology or mathematical rigor to identify an ideal point but instead uses a heuristic approach to explore a wide range of starting locations and conditions. These features allow the PSO algorithm to efficiently optimize complex functions.

The biological analogy for a PSO algorithm is a flock of birds, a school of fish, or a colony of ants trying to find a food resource. Each animal, or particle, is an independent entity that is conducting its own search routine for an optimal result. However, the particles will also be influenced and guided by motions of the larger swarm. The results any particle has already seen, as well as the influence of the larger swarm helps all the particles converge to an optimal solution.

In a more technical dissection, a PSO algorithm must be initially set-up with how many particles and iterations the user wants to use. More particles improve the convergence likelihood and help ensure the global optimum can be found due to a more complete search at the cost of computing resources required. The PSO algorithm allows a user to set different “weights”, step sizes, and speeds for the particles to control how influenced each particle is by its past points (cognitive) and by the other nearby points (social) in the swarm and how large of steps will be taken. These weights allow algorithm adjustment to tailor the performance and computational requirements.

The goals of studying this topic are to learn more about an algorithm capable of solving nondifferentiable functions that are relevant to modern problems. Many optimization algorithms use the gradient function, so it is interesting to see an algorithm that does not require gradient computations. Additionally, the algorithm is still in active use in many fields [6] [7]. PSO is regularly used to solve optimization problems in the gas and oil industry, antenna design [8], and electric power distribution [9]. As functions get very complex in the real-world, the PSO algorithm is still capable of performing an effective search within very complex functions due to its heuristic and operability on non-differentiable functions.

Algorithm Discussion

The structure of the algorithm is simple. The algorithm seeks to find an optimum of an objective function, . A set of particles, with members , forming a swarm, is initialized randomly. The algorithm runs for a number of iterations. At each iteration, for each particle in the swarm, each particle’s position is updated to be . Subscript indicates the iteration of the algorithm. Subscript indicates the index of the particle in the swarm. Both the optimal solution for a particular particle, , and a global optimum, are tracked. is the best solution to the objective function that a particular particle, , has experienced over all iterations. is the best solution to the objective function for all particles, at all iterations. The algorithm is halted when either a fixed number of iterations is reached or a stopping criteria is reached. A typical stopping criteria is when the change between iterations is below a user-set threshold, demonstrating that the particles are not moving from the identified solution point. The algorithm’s control flow is shown in the following flow chart.

Figure 1: Algorithm Flow Chart

A velocity, is independently computed for each particle . The velocity is the result of the sum of the preceding velocity, a cognitive component and a social component. The cognitive component is and pulls the solution towards the best point observed by this particle. The social component is and pulls the solution towards the best point observed across the entire swarm. Each of the social component and cognitive component are weighed by a factor. The state that must be tracked across loop iterations is; , , for each particle and .

The convergence of the algorithm can be checked in a few different ways. The original papers were based on training neural networks, so the optimization was halted when the neural network was sufficiently able to make predictions [2] [10]. This approach is flawed because the designer of the algorithm used a priori knowledge of how good the neural network will perform. More generally, this approach incorporates application specific logic to stop when the optimal solution is good enough. Alternatively, the algorithm can be terminated following a predetermined number of iterations. Another approach is to stop when the solution's improvement at a given iteration stops [11].

The pseudocode for the PSO algorithm attempting to minimize a solution follows. The algorithm can be modified to search for a maximum by changing the initialization of and along with their updates.

SWARM is initialized randomly

initialized to 0

initialized to

initialized to

For i in MAX_ITERATIONS:

For particle in SWARM:

if

if

PRINT “Local optimal at ”

Figure 2: Algorithm Pseudocode

The algorithm has a few hyperparameters including; number of particles in the swarm, weights of the cognitive update on the velocity, weights on the social update on the velocity. The Pymoo Python implementation suggests a swarm size of 25, velocity weight of 0.8, a social update weight of 2.0 and a cognitive update of 2.0 [12]. Practitioners will need to adjust parameters and determine how their problem reacts to modifications of the hyperparameters. Heuristics may be used to adjust the various weights between iterations of the algorithm. Algorithms that adjust the hyperparameters based on heuristics are called Adaptive Particle Swarm Optimization (APSO) [13].

PSO makes very few assumptions. The algorithm can be trivially adopted to work with real, integer or mixed functions. The algorithm does not rely on gradients or hessians, so there are no assumptions or requirements on the function being continuous or differentiable. However, the lack of assumptions comes at a cost, there is no proof that the algorithm will find an optimal solution. One of the original papers by Kennedy in 1997 [10] indicated a tendency for the optimization to get stuck in local minimum. Adjustment of the algorithm’s hyperparameters is a performance vs robustness trade that may have avoided sticking in a local optimum.

Numerical Example

This section will walk through a numerical example of solving a complex objective function using Particle Swarm Optimization.

Given a non-convex function with multiple local optima:

Step 1: Define the Objective Function

Objective Function:

Step 2: Define Key Variables

| Symbol | Description | Value | Additional Notes |

|---|---|---|---|

| P | Total Number of Particles used to search the function | 25 | Larger number means greater chance of finding the global optimum, but larger computational load |

| random number between 0 and 1 | (0,1) | to be randomly generated at each evaluation | |

| random number between 0 and 1 | (0,1) | to be randomly generated at each evaluation | |

| Inertia Weight Constant (0,1) | 0.7 | ||

| Cognitive Coefficient

(Independence, prioritize the self) |

0.1* | recommend & sufficiently low value for slower smoother operation | |

| Social Coefficient

(Codependence, prioritize the group) |

0.15* | recommend & sufficiently low value for slower smoother operation |

- The algorithm is highly sensitive to c1 and c2. Recommend referencing the search space and using 1/30 to 1/60 of the total range. Ex: target search range is -3 to 3 by -3 to 3, use a value 0.1 to 0.2 to prevent “overshooting” and oscillations of the individual particles.

Step 3: Define Stopping Criteria

For this example select a maximum number of iterations (t):

It is important to note that there are alternative stopping criteria such as checking for convergence of the particles - such as a minimum delta between points or using a Confidence Interval on the X and Y coordinates as your threshold. This could lead to more, or less, iterations depending upon what threshold is used. Care should be taken to ensure the stopping criteria can actually be met. This wiki does not provide an example of this methodology at this point in time.

Step 4: Setup Particle Data

Each particle has three main properties

- Position of particle , at iteration , denoted as where:

or

- Velocity Vector denoted as:

or

- Objective Function Value of:

Where equals total number of particles, cumulatively these would be represented as:

This wiki uses not due to starting at not

Important Note only tracks the current iteration of particle , not all values of a particle over iterations:

and and

This wiki guide will use randomly generated initialization for particle position and velocity. There are alternative initialization methods available that are not covered in this guide such as using a set of points in a grid pattern.

For and , define initialization data for all particles given and define a range to search within:

To ensure an even distribution and less initial clustering of the particles, this example will use a uniform distribution to create surrogate data to initialize the position. Depending on how the code is set up, scaling and offset factors may be required.

This example will use a normal distribution to create surrogate data to initialize the Velocity vector. Depending how the code is set up, a scaling factor may be required.

Example Particle Data, Particle # 7:

- Particle Position Coordinates at time () :

- Particle Velocity Vector at time () :

- Particle Objective Value at time () :

Step 5: Evaluate Function at Positions

For each particle, evaluate the objective function at their position:

Objective Function:

From Step 2, the defined number of particles is

Step 5.1 - Calculate Local Optima

For each particle, determine the best value in its own history. This will be called "Personal Best Objective Function" and notated as . At initialization, there is only one value.

In effect the program is tracking the position and value of each particle through all iterations and choosing the optimal value - in this example the minimum. As such, rather than tracking all values, the computational memory needs can be reduced by only tracking the optima found so far and comparing to the next iteration’s value:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle pbo^i = min[f^i(X^i(0)), \cdots, f^i(X^i(m))] \rightarrow min[f^i(X^i(t_{previous best}^i)), f^i(X^i(t))] = [f^i(X^i(t_{best}^i))] \,}

Note: Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle (t_{best}^i) }

becomes Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle (t_{previous best}^i) }

in the next iteration.

Cumulatively this can also be written as:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle pbo = [min[f^0(X^0(t_{previous best}^0)), f^0(X^0(t))], \cdots, min[f^{P-1}(X^{P-1}(t_{previous best}^{P-1})), f^{P-1}(X^{P-1}(t))]] = \,}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle pbo = [f^0(X^0(t_best^0)), \cdots , f^{P-1}(X^{P-1}(t_{best}^{P-1}))] = \,}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle pbo = [-1.6941, \cdots, -1.7126] \,}

Note: Shown is at Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle t=0 } . All "best" values occur at Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle t=0 } upon initialization.

The location of the optimal objective function value (Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle pbo^i }

) at (Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle t_{best} }

) needs to be recorded. (Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle pb^i }

) will be used to notate the "Personal Best Location" or the positional coordinates that return the best (Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(X) }

) ever explored by particle (Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle i }

) .

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle pb^i = [X^i(0), \cdots, X^i(m)] \rightarrow [X^i(t_{best}^i)] = \begin{bmatrix} x^i(t_{best}^i) \\ y^i(t_{best}^i) \end{bmatrix} \,}

Cumulatively written as:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle pb = [X^0(t_{best}^0), \cdots, X^{P-1}(t_{best}^{P-1})] = \begin{bmatrix} x^0(t_{best}^0), & \cdots , & x^{P-1}(t_{best}^{P-1}) \\ y^0(t_{best}^0), & \cdots , & y^{P-1}(t_{best}^{P-1}) \end{bmatrix}\,}

Step 5.2 - Calculate Global Optima

Now that the personal best for all particles is known, the next step is to compare all particles to determine the best global value. The "Global Best Objective Function Value" will be notated as Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle gbo } , this represent the best Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(X) } explored by all particles.

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle gbo = min[pbo] = f(X(t_{best})) }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle gbo = min [-1.6941, \cdots , -1.7126] }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle gbo = [-1.7137] }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -1.7137 } correlates to Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle pbo^{12} } or particle 12 of 24 (25 particles = particle 0 Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle (i=0) } , ... particle 24 Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle (i=24) } )

The Global Best Objective Function Value was determined in the previous step, next the coordinates that achieved that value needs to be recorded. "Global Best Location" is notated as Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle gb }

to represent the position that gives the best Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(X) }

explored by all particles.

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle gb =[X(t_{best})] }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle gb = pb^{12}=[X^{12}(t_{best}^{12})] =\begin{bmatrix} x^{12}(t_{best}^{12}) \\ y^{12}(t_{best}^{12}) \end{bmatrix} = \begin{bmatrix} -0.4832 \\ 0.1907 \end{bmatrix} }

Step 6: Calculate Next Iteration Position, Velocity, Value

Calculate the next iteration values by using the following rules:

Position:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X^i(t+1) = X^i(t)+V^i(t+1) = \begin{bmatrix} x^i(t)+v_x^i(t+1) \\ y^i(t)+v_y^i(t+1) \end{bmatrix} }

Velocity:

Note: need to generate a random value for Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle r_1 } and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle r_2 } at this step

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle V^i(t+1)=wV^i(t)+c_1 r_1 (pb^i - X^i(t))+ c_2 r_2 (gb-X^i(t)) }

Example:

Determine Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X^5(t+1) } where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle t=3 } :

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X^5(4) = X^5(3)+V^5(4) = \begin{bmatrix} x^5(3)+v_x^5(4) \\ y^5(3)+v_y^5(4) \end{bmatrix}}

Requires determining Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle V^5(4) }

first:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle V^5(4)=wV^5(3)+c_1 r_1 (pb^5 - X^5(3))+ c_2 r_2 (gb-X^5(3)) }

Define Variables:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle w=0.8 , c_1=0.1 , c_2=0.15, r_1=0.3180, r_2=0.1100}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle V^5(3) = \begin{bmatrix} 0.2024 \\ -0.0449 \end{bmatrix}, pb^5 = \begin{bmatrix} -1.8202 \\ -0.5951 \end{bmatrix}, X^5(3) = \begin{bmatrix} -1.6177 \\ 0.5502 \end{bmatrix}, gb = \begin{bmatrix} 0.78606 \\ 0.27157 \end{bmatrix} }

Solve for Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle V^5(4) } :

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle V^5(4) = 0.8 * \begin{bmatrix} 0.2024 \\ -0.0449 \end{bmatrix} + 0.1 * 0.3180 * \begin{pmatrix} \begin{bmatrix} -1.8202 \\ -0.5951 \end{bmatrix} - \begin{bmatrix} 0.78606 \\ 0.27157 \end{bmatrix} \end{pmatrix} + 0.15 * 0.1100* \begin{pmatrix} \begin{bmatrix} 0.78606 \\ 0.27157 \end{bmatrix} - \begin{bmatrix} -1.6177 \\ 0.5502 \end{bmatrix} \end{pmatrix} }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle V^5(4) = 0.8 * \begin{bmatrix} 0.2024 \\ -0.0449 \end{bmatrix} + 0.03180 * \begin{bmatrix} -2.60626 \\ 0.32353 \end{bmatrix} + 0.0165* \begin{bmatrix} 2.40376 \\ -0.27863 \end{bmatrix} }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle V^5(4) = \begin{bmatrix} 0.16192 \\ -0.03592 \end{bmatrix} + \begin{bmatrix} -0.08288 \\ 0.01028 \end{bmatrix} + \begin{bmatrix} 0.03966 \\ -0.0046 \end{bmatrix} }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle V^5(4) = \begin{bmatrix} 0.118703 \\ -0.03023 \end{bmatrix} }

Solve for Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X^5(4) } :

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X^5(4) = X^5(3) + V^5(4) = \begin{bmatrix} -1.6177 \\ 0.5502 \end{bmatrix} + \begin{bmatrix} 0.118703 \\ -0.03023 \end{bmatrix} }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X^5(4) = \begin{bmatrix} -1.7989 \\ 0.5199 \end{bmatrix} }

Reevaluate Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(X^i(t+1)) } :

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(x,y)=sin(5x+1)+cos(7y-3)+x^2+y^2 }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(X^5(4))=sin(5*(-1.7989)+1)+cos(7*(0.5199)-3)+(-1.7989)^2+(0.5199)^2 }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(X^5(4))=3.3189 }

Step 7: Iteratively Solve

Calculate next optima, position, velocity, and function values for each particle. Repeat until stopping criteria has been met.

Final Results after Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle t=50 } iterations:

Global Best Value: Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle (gbo) } : Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -1.7137 }

Global Best Position: Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle (gb) } : Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{bmatrix} -0.4545 \\ 0.2121 \end{bmatrix} }

Visual Representation

To help visualize what's going on:

Blue Dots = Each particle's current position

Blue Arrows = Each particle's velocity

Black Dots = Each particle's best position

Applications

The strength of PSO is in its simplicity and ability to handle arbitrary functions. The algorithm does not use gradient information, making it useful in contexts where computation of the gradient is expensive or the function is otherwise unreliable.

PSO was originally used to train neural networks (NN)[2]. Neural networks frequently have highly non-linear loss functions and it is therefore difficult to find a global optimum [14]. Several papers have noted an improvement in NN accuracy using PSO optimization when compared to back-propagation due to the ability to avoid local optimum [15]. However, the current state of the current practice for training deep neural networks remains the back-propagation algorithm. Back-propagation is more efficient in the context of neural networks because for each particle at each iteration, there will need to be an error function computation. For example, a swarm size of 25 requires 25 times more error function evaluations [16].

In addition to identifying weights in the Neural Networks (training), PSO has been used in selecting hyperparameters for neural networks. In these applications PSO is used to maximize the neural network’s accuracy by varying parameters including number of nodes and structure of the networks [17]. PSO fits this application well because this function is nonlinear and the gradient is non-continuous. Hyperparameters for NNs may be real, integer or binary values.

PSO is most often compared to other heuristic based algorithms such as; genetic algorithms differential evolution simulated annealing. One study for a bus scheduling application did find the PSO was better than genetic algorithms with respect to the optimality of solution and run-time [16]. The results in the paper are difficult to generalize, as results will be sensitive to the application as well as the algorithm’s hyperparameters. The recommended choice of heuristic optimization algorithms remains up to the practitioner’s judgement.