Simulated annealing: Difference between revisions

No edit summary |

|||

| (4 intermediate revisions by the same user not shown) | |||

| Line 62: | Line 62: | ||

== Numerical Examples == | == Numerical Examples == | ||

[[File:Figure 1. Initial configuration.png|alt=|thumb|204x204px|Figure 1: Configuration for L]] | |||

The Ising model is a classic example from statistical physics and in order to reach its optimal state, we can use Simulated Annealing (SA) to reach a minimal energy state.<ref>Peierls, R. (1936). On Ising’s model of ferromagnetism. ''Mathematical Proceedings of the Cambridge Philosophical Society'', 32(3), 477–481.</ref> In the SA approach, we start with a high "temperature" that allows random changes in the system’s configuration, which helps explore different arrangements of spins. As the temperature lowers, the algorithm gradually favors configurations with lower energy, eventually "freezing" into a low-energy state. | The Ising model is a classic example from statistical physics and in order to reach its optimal state, we can use Simulated Annealing (SA) to reach a minimal energy state.<ref>Peierls, R. (1936). On Ising’s model of ferromagnetism. ''Mathematical Proceedings of the Cambridge Philosophical Society'', 32(3), 477–481.</ref> In the SA approach, we start with a high "temperature" that allows random changes in the system’s configuration, which helps explore different arrangements of spins. As the temperature lowers, the algorithm gradually favors configurations with lower energy, eventually "freezing" into a low-energy state. | ||

=== '''''Problem Setup''''' === | === '''''Problem Setup''''' === | ||

Consider a small <math>2\times 2</math> grid of spins, where each spin can be either <math>+1</math> or <math>-1</math> . The energy of the system is determined by the Hamiltonian: <math>E = -J \sum_{\langle i,j \rangle} S_i S_j</math>, where <math>J</math> is a coupling constant (set to 1 for simplicity), <math>s_i</math> and <math>s_j</math> are neighboring spins, and the summation is over nearest neighbors. The goal is to minimize <math>E</math>, achieved when all spins are aligned. | Consider a small <math>2\times 2</math> grid of spins, where each spin can be either <math>+1</math> or <math>-1</math> . The energy of the system is determined by the Hamiltonian: <math>E = -J \sum_{\langle i,j \rangle} S_i S_j</math>, where <math>J</math> is a coupling constant (set to 1 for simplicity), <math>s_i</math> and <math>s_j</math> are neighboring spins, and the summation is over nearest neighbors. The goal is to minimize <math>E</math>, achieved when all spins are aligned. | ||

| Line 75: | Line 75: | ||

\end{bmatrix}</math> | \end{bmatrix}</math> | ||

# Calculate Initial Energy: For this configuration, each pair of neighboring spins contributes <math>-JS_i S_j</math> to the energy. Calculate by summing these contributions: The pairs are <math>(+1, -1), (-1, +1), (-1, +1), (+1, -1)</math>, all of which contribute <math>+1</math>. So, initial energy <math>E = 4</math>. | # Calculate Initial Energy: For this configuration, each pair of neighboring spins contributes <math>-JS_i S_j</math> to the energy. Calculate by summing these contributions: The pairs are <math>(+1, -1), (-1, +1), (-1, +1), (+1, -1)</math>, all of which contribute <math>+1</math>. So, initial energy <math>E = 4</math>. | ||

# Neighboring Configurations: Select a random spin to flip. Suppose the spin in the top right corner is chosen, changing <math>+1</math> to <math>-1</math>: <math>\text{New L} = \begin{bmatrix} +1 & +1 \\ | #Neighboring Configurations: Select a random spin to flip. Suppose the spin in the top right corner is chosen, changing <math>+1</math> to <math>-1</math>: <math>\text{New L} = \begin{bmatrix} +1 & +1 \\ | ||

-1 & +1 \end{bmatrix}</math> Recalculate <math>E</math> for the new configuration: Now, three pairs are aligned <math>(+1,+1)</math> and contribute <math>-1</math> each, while one pair <math>(-1,+1)</math> contributes <math>+1</math>, so total energy <math>E = -2</math>. | -1 & +1 \end{bmatrix}</math> Recalculate <math>E</math> for the new configuration: Now, three pairs are aligned <math>(+1,+1)</math> and contribute <math>-1</math> each, while one pair <math>(-1,+1)</math> contributes <math>+1</math>, so total energy <math>E = -2</math>. | ||

# Acceptance Decision: Calculate <math>\Delta E = E_{\text{new}} - E_{\text{old}} = -2 - 4 = -6</math>. Since <math>\Delta E < 0</math>, accept the new configuration. | # Acceptance Decision: Calculate <math>\Delta E = E_{\text{new}} - E_{\text{old}} = -2 - 4 = -6</math>. Since <math>\Delta E < 0</math>, accept the new configuration. | ||

# Cooling Schedule: Reduce the temperature <math>T</math> gradually to decrease the likelihood of accepting higher-energy configurations. | |||

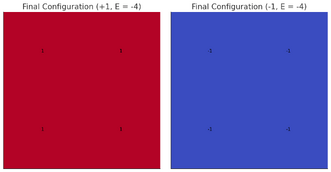

# Iterate: Continue flipping spins randomly, recalculating energy, and applying the acceptance criterion until the system reaches a low-energy configuration (e.g., all <math>+1</math> or all <math>-1</math>). After several iterations at decreasing temperatures, the system is likely to converge to a minimum-energy configuration, such as: <math>\begin{bmatrix} +1 & +1 \\ | |||

+1 & +1 \end{bmatrix} \quad \text{or} \quad \begin{bmatrix} -1 & -1 \\ | |||

-1 & -1 \end{bmatrix}</math>as shown.[[File:Final config. E=-4.png|center|thumb|331x331px|Figure 2: Two Final configurations]] | |||

== Application == | |||

SA is applied across diverse fields due to its effectiveness in solving complex optimization tasks, including areas like physics, engineering, data science, and finance. Some detailed examples are listed below. | |||

==== '''J'''ob Scheduling in Manufacturing ==== | |||

In job scheduling, the goal is to minimize the total production time (makespan) by optimally assigning tasks to machines. Traditional scheduling algorithms often get trapped in local minima due to the problem’s complexity.<ref>Barnes, J. W., & Laguna, M. (1993). "Solving the multiple-machine weighted flow time problem using Tabu search." ''IIE Transactions'', 25(1), 121-128.</ref> SA addresses this by allowing suboptimal solutions during the search process to escape local optima, eventually converging on a near-optimal schedule as the temperature decreases.<ref>Barnes, J. W., & Laguna, M. (1993). "Solving the multiple-machine weighted flow time problem using Tabu search." ''IIE Transactions'', 25(1), 121-128</ref> | |||

Given an initial solution <math>x_\text{current} | |||

</math> representing a job sequence, the objective is to minimize the makespan: | |||

''' <math> | |||

min f(x) = \text{makespan} | |||

</math>''' | |||

where <math>f(x)</math> is the total completion time. SA perturbs the current sequence <math>x_\text{current} | |||

</math> to explore new solutions <math>x_\text{new} | |||

</math>and controls acceptance of suboptimal solutions by: | |||

<math> | |||

P = \exp\left(-\frac{\Delta E}{T}\right) | |||

</math> | |||

where <math>\Delta E = f(x_\text{new}) - f(x_\text{current}) | |||

</math> and <math>T</math> is the temperature. A cooling schedule reduces <math>T</math> over iterations, focusing the search on refining the best solutions. | |||

==== Hyperparameter Tuning in Machine Learning ==== | |||

Hyperparameter tuning is crucial for optimizing machine learning models. With SA, different combinations of hyperparameters are treated as solutions, and the algorithm explores this space to find the best configuration. By allowing suboptimal combinations early on, SA avoids getting stuck in poor configurations, converging on an optimal setting as it cools. | |||

==== Path Optimization in Robotics ==== | |||

SA is widely used in robotics for path optimization, where the goal is to find the shortest or most efficient path for a robot to follow. The algorithm treats each path configuration as a solution and iteratively refines it by accepting suboptimal paths temporarily. As temperature decreases, SA converges to an optimal or near-optimal path, balancing exploration and refinement. | |||

==== Inventory Optimization in Supply Chain ==== | |||

SA is also applied in inventory management to find an optimal stock level that balances storage costs and service levels. Starting with an initial inventory policy, SA perturbs the policy to explore new configurations and accepts costlier policies initially to escape local optima. Over time, the solution converges to a cost-effective policy.<ref>Alfa, A., Heragu, S., & Chen, M. (1991). "A 3-opt based simulated annealing algorithm for vehicle routing problems." ''Computers & Industrial Engineering'', 21(1-4), 635-639.</ref> | |||

==== '''Other Applications''' ==== | |||

SA has applications in diverse areas like finance (portfolio optimization), logistics (route optimization), and artificial intelligence (feature selection). For example, in portfolio optimization, SA can optimize asset weights to balance risk and return, allowing for high-risk portfolios initially before settling on a balanced configuration.<ref>Koulamas, C., Antony, S. R., & Jaen, R. (1994). "A survey of simulated annealing applications to operations research problems." ''Omega'', 22(1), 41-56.</ref> | |||

== Conclusion == | |||

SA since its introduction in the 1980s, has been widely adopted as an effective optimization technique due to its ability to escape local minima and handle complex search spaces. Its probabilistic approach based on metallurgical annealing processes makes it particularly suitable for combinatorial optimization problems where deterministic methods struggle. SA has found successful applications across diverse fields including manufacturing job scheduling, machine learning hyperparameter tuning, robotics path planning, supply chain optimization, and financial portfolio management, demonstrating its versatility and practical value in solving real-world optimization challenges. | |||

Latest revision as of 15:01, 14 December 2024

Author: Gwen Zhang (xz929), Yingjie Wang (yw2749), Junchi Xiao (jx422), Yichen Li (yl3938), Xiaoxiao Ge (xg353) (ChemE 6800 Fall 2024)

Stewards: Nathan Preuss, Wei-Han Chen, Tianqi Xiao, Guoqing Hu

Introduction

Simulated annealing (SA) is a probabilistic optimization algorithm inspired by the metallurgical annealing process, which reduces defects in a material by controlling the cooling rate to achieve a stable state.[1] The core concept of SA is to allow algorithms to escape the constraints of local optima by occasionally accepting suboptimal solutions. This characteristic enables SA to find near-global optima in large and complex search spaces.[2] During the convergence process, the probability of accepting a suboptimal solution diminishes over time.

SA is widely applied in diverse fields such as scheduling,[3] machine learning, and engineering design, and is particularly effective for combinatorial optimization problems that are challenging for deterministic methods.[4] First proposed in the 1980s by Kirkpatrick, Gelatt, and Vecchi, SA demonstrated its efficacy in solving various complex optimization problems through its analogy to thermodynamics.[5] Today, it remains a powerful heuristic often combined with other optimization techniques to enhance performance in challenging problem spaces.

Algorithm Discussion

Formal Description of the Algorithm

SA is a probabilistic technique for finding approximate solutions to optimization problems, particularly those with large search spaces. Inspired by the annealing process in metallurgy, the algorithm explores the solution space by occasionally accepting worse solutions with a probability that diminishes over time. This reduces the risk of getting stuck in local optima and increases the likelihood of discovering the global optimum.

The basic steps of the SA algorithm are as follows:

- Initialization: Begin with an initial solution and an initial temperature Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle T_0 } .

- Iterative Improvement: While the stopping criteria are not met:

- Generate a new solution Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s_{new}} in the neighborhood of the current solution s.

- Calculate the difference in objective values Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \Delta E = f(s_{new}) - f(s)} , where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f} is the objective function.

- If Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \Delta E <0} (i.e., Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s_{new}} is a better solution), accept Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s_{new}} as the current solution.

- If Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \Delta E >0} , accept Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s_{new}} with a probability Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle P = \exp\left(-\frac{\Delta E}{T}\right)} .

- Update the temperature Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle T } according to a cooling schedule, typically Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle T=\alpha \cdot T } , where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha } is a constant between 0 and 1.

- Termination: Stop the process when the temperature falls below a minimum threshold , or after a predefined number of iterations. The best solution encountered is returned as the approximate optimum.

Pseudocode for Simulated Annealing

def SimulatedAnnealing(f, s_0, T_0, alpha, T_min):

s_current = s_0

T = T_0

while T > T_min:

s_new = GenerateNeighbor(s_current)

delta_E = f(s_new) - f(s_current)

if delta_E < 0 or Random(0, 1) < math.exp(-delta_E / T):

s_current = s_new

T = alpha * T

return s_current

Assumptions and Conditions

Objective Function Continuity

The objective function Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f } is assumed to be defined and continuous in the search space.

Cooling Schedule

The cooling schedule, typically geometric, determines how Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle T } decreases over iterations. The cooling rate Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha } affects the convergence speed and solution quality.

Acceptance Probability

Based on the Metropolis criterion, the probability Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle P = \exp\left(-\frac{\Delta E}{T}\right)} allows the algorithm to accept worse solutions initially, encouraging broader exploration.

Termination Condition

SA terminates when Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle T } falls below Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle T_{min} } or when a predefined number of iterations is reached.

Numerical Examples

The Ising model is a classic example from statistical physics and in order to reach its optimal state, we can use Simulated Annealing (SA) to reach a minimal energy state.[6] In the SA approach, we start with a high "temperature" that allows random changes in the system’s configuration, which helps explore different arrangements of spins. As the temperature lowers, the algorithm gradually favors configurations with lower energy, eventually "freezing" into a low-energy state.

Problem Setup

Consider a small Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 2\times 2} grid of spins, where each spin can be either Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle +1} or Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -1} . The energy of the system is determined by the Hamiltonian: Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle E = -J \sum_{\langle i,j \rangle} S_i S_j} , where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle J} is a coupling constant (set to 1 for simplicity), Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s_i} and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s_j} are neighboring spins, and the summation is over nearest neighbors. The goal is to minimize Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle E} , achieved when all spins are aligned.

Algorithm Steps

- Initialize the spins: start with a random configuration. For example: Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle L = \begin{bmatrix} +1 & -1 \\ -1 & +1 \end{bmatrix}}

- Calculate Initial Energy: For this configuration, each pair of neighboring spins contributes Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -JS_i S_j} to the energy. Calculate by summing these contributions: The pairs are Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle (+1, -1), (-1, +1), (-1, +1), (+1, -1)} , all of which contribute Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle +1} . So, initial energy Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle E = 4} .

- Neighboring Configurations: Select a random spin to flip. Suppose the spin in the top right corner is chosen, changing Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle +1} to Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -1} : Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \text{New L} = \begin{bmatrix} +1 & +1 \\ -1 & +1 \end{bmatrix}} Recalculate Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle E} for the new configuration: Now, three pairs are aligned Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle (+1,+1)} and contribute Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -1} each, while one pair Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle (-1,+1)} contributes Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle +1} , so total energy Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle E = -2} .

- Acceptance Decision: Calculate Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \Delta E = E_{\text{new}} - E_{\text{old}} = -2 - 4 = -6} . Since Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \Delta E < 0} , accept the new configuration.

- Cooling Schedule: Reduce the temperature Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle T} gradually to decrease the likelihood of accepting higher-energy configurations.

- Iterate: Continue flipping spins randomly, recalculating energy, and applying the acceptance criterion until the system reaches a low-energy configuration (e.g., all Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle +1}

or all Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -1}

). After several iterations at decreasing temperatures, the system is likely to converge to a minimum-energy configuration, such as: Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{bmatrix} +1 & +1 \\ +1 & +1 \end{bmatrix} \quad \text{or} \quad \begin{bmatrix} -1 & -1 \\ -1 & -1 \end{bmatrix}}

as shown.

Figure 2: Two Final configurations

Application

SA is applied across diverse fields due to its effectiveness in solving complex optimization tasks, including areas like physics, engineering, data science, and finance. Some detailed examples are listed below.

Job Scheduling in Manufacturing

In job scheduling, the goal is to minimize the total production time (makespan) by optimally assigning tasks to machines. Traditional scheduling algorithms often get trapped in local minima due to the problem’s complexity.[7] SA addresses this by allowing suboptimal solutions during the search process to escape local optima, eventually converging on a near-optimal schedule as the temperature decreases.[8]

Given an initial solution Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_\text{current} } representing a job sequence, the objective is to minimize the makespan:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle min f(x) = \text{makespan} }

where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(x)} is the total completion time. SA perturbs the current sequence Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_\text{current} } to explore new solutions Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_\text{new} } and controls acceptance of suboptimal solutions by:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle P = \exp\left(-\frac{\Delta E}{T}\right) }

where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \Delta E = f(x_\text{new}) - f(x_\text{current}) } and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle T} is the temperature. A cooling schedule reduces Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle T} over iterations, focusing the search on refining the best solutions.

Hyperparameter Tuning in Machine Learning

Hyperparameter tuning is crucial for optimizing machine learning models. With SA, different combinations of hyperparameters are treated as solutions, and the algorithm explores this space to find the best configuration. By allowing suboptimal combinations early on, SA avoids getting stuck in poor configurations, converging on an optimal setting as it cools.

Path Optimization in Robotics

SA is widely used in robotics for path optimization, where the goal is to find the shortest or most efficient path for a robot to follow. The algorithm treats each path configuration as a solution and iteratively refines it by accepting suboptimal paths temporarily. As temperature decreases, SA converges to an optimal or near-optimal path, balancing exploration and refinement.

Inventory Optimization in Supply Chain

SA is also applied in inventory management to find an optimal stock level that balances storage costs and service levels. Starting with an initial inventory policy, SA perturbs the policy to explore new configurations and accepts costlier policies initially to escape local optima. Over time, the solution converges to a cost-effective policy.[9]

Other Applications

SA has applications in diverse areas like finance (portfolio optimization), logistics (route optimization), and artificial intelligence (feature selection). For example, in portfolio optimization, SA can optimize asset weights to balance risk and return, allowing for high-risk portfolios initially before settling on a balanced configuration.[10]

Conclusion

SA since its introduction in the 1980s, has been widely adopted as an effective optimization technique due to its ability to escape local minima and handle complex search spaces. Its probabilistic approach based on metallurgical annealing processes makes it particularly suitable for combinatorial optimization problems where deterministic methods struggle. SA has found successful applications across diverse fields including manufacturing job scheduling, machine learning hyperparameter tuning, robotics path planning, supply chain optimization, and financial portfolio management, demonstrating its versatility and practical value in solving real-world optimization challenges.

- ↑ Metropolis, N., Rosenbluth, A. W., Rosenbluth, M. N., Teller, A. H., & Teller, E.(1953). "Equation of state calculations by fast computing machines." Journal of Chemical Physics, 21(6), 1087-1092.

- ↑ Kirkpatrick, S., Gelatt, C. D., & Vecchi, M. P. (1983). "Optimization by simulated annealing." Science, 220(4598), 671-680.

- ↑ Aarts, E. H., & Korst, J. H. (1988). Simulated Annealing and Boltzmann Machines: A Stochastic Approach to Combinatorial Optimization and Neural Computing. Wiley.

- ↑ Cerny, V. (1985). "Thermodynamical approach to the traveling salesman problem: An efficient simulation algorithm." Journal of Optimization Theory and Applications, 45(1), 41-51.

- ↑ Kirkpatrick, S. (1984). "Optimization by simulated annealing: Quantitative studies." Journal of Statistical Physics, 34(5-6), 975-986.

- ↑ Peierls, R. (1936). On Ising’s model of ferromagnetism. Mathematical Proceedings of the Cambridge Philosophical Society, 32(3), 477–481.

- ↑ Barnes, J. W., & Laguna, M. (1993). "Solving the multiple-machine weighted flow time problem using Tabu search." IIE Transactions, 25(1), 121-128.

- ↑ Barnes, J. W., & Laguna, M. (1993). "Solving the multiple-machine weighted flow time problem using Tabu search." IIE Transactions, 25(1), 121-128

- ↑ Alfa, A., Heragu, S., & Chen, M. (1991). "A 3-opt based simulated annealing algorithm for vehicle routing problems." Computers & Industrial Engineering, 21(1-4), 635-639.

- ↑ Koulamas, C., Antony, S. R., & Jaen, R. (1994). "A survey of simulated annealing applications to operations research problems." Omega, 22(1), 41-56.