Quadratic programming: Difference between revisions

mNo edit summary |

m (→Introduction) |

||

| Line 7: | Line 7: | ||

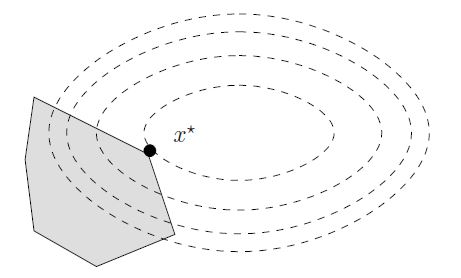

[[File:QPPic.jpg|frame|Optimizating of a quadratic function.<span style="font-size: 8pt; position:relative; bottom: 0.3em;">12</span>]] | [[File:QPPic.jpg|frame|Optimizating of a quadratic function.<span style="font-size: 8pt; position:relative; bottom: 0.3em;">12</span>]] | ||

Quadratic programming (QP) is the problem of optimizing a quadratic objective function and is one of the simplests form of non-linear programming.<span style="font-size: 8pt; position:relative; bottom: 0.3em;">1</span> The objective function can contain bilinear or up to second order polynomial terms,<span style="font-size: 8pt; position:relative; bottom: 0.3em;">2</span> and the constraints are linear and can be both equalities and inequalities. QP is widely used in image and signal processing, to optimize financial portfolios, to perform the least-squares method of regression, to control scheduling in chemical plants, and in [https://optimization.mccormick.northwestern.edu/index.php?title=Sequential_quadratic_programming&action=edit&redlink=1 sequential quadratic programming], a technique for solving more complex non-linear programming problems.<span style="font-size: 8pt; position:relative; bottom: 0.3em;">3,4</span> The problem was first explored in the early 1950s, most notably by Princeton University's [http://en.wikipedia.org/wiki/Philip_Wolfe_(mathematician) Wolfe] and [http://en.wikipedia.org/wiki/Marguerite_Frank Frank], who developed its theoretical background,<span style="font-size: 8pt; position:relative; bottom: 0.3em;">1</span> and by [http://en.wikipedia.org/wiki/Harry_Markowitz Markowitz], who applied it to portfolio optimization, a subfield of finance. | Quadratic programming (QP) is the problem of optimizing a quadratic objective function and is one of the simplests form of non-linear programming.<span style="font-size: 8pt; position:relative; bottom: 0.3em;">1</span> The objective function can contain bilinear or up to second order polynomial terms,<span style="font-size: 8pt; position:relative; bottom: 0.3em;">2</span> and the constraints are linear and can be both equalities and inequalities. QP is widely used in image and signal processing, to optimize financial portfolios, to perform the least-squares method of regression, to control scheduling in chemical plants, and in [https://optimization.mccormick.northwestern.edu/index.php?title=Sequential_quadratic_programming&action=edit&redlink=1 sequential quadratic programming], a technique for solving more complex non-linear programming problems.<span style="font-size: 8pt; position:relative; bottom: 0.3em;">3,4</span> The problem was first explored in the early 1950s, most notably by Princeton University's [http://en.wikipedia.org/wiki/Philip_Wolfe_(mathematician) Wolfe] and [http://en.wikipedia.org/wiki/Marguerite_Frank Frank], who developed its theoretical background,<span style="font-size: 8pt; position:relative; bottom: 0.3em;">1</span> and by [http://en.wikipedia.org/wiki/Harry_Markowitz Harry Markowitz], who applied it to portfolio optimization, a subfield of finance. | ||

=Problem formulation= | =Problem formulation= | ||

Revision as of 20:36, 25 September 2020

This web page is a duplicate of https://optimization.mccormick.northwestern.edu/index.php/Quadratic_programming

Author: Jack Heider (ChE 345 Spring 2015)

Steward: Dajun Yue, Fengqi You

Introduction

Quadratic programming (QP) is the problem of optimizing a quadratic objective function and is one of the simplests form of non-linear programming.1 The objective function can contain bilinear or up to second order polynomial terms,2 and the constraints are linear and can be both equalities and inequalities. QP is widely used in image and signal processing, to optimize financial portfolios, to perform the least-squares method of regression, to control scheduling in chemical plants, and in sequential quadratic programming, a technique for solving more complex non-linear programming problems.3,4 The problem was first explored in the early 1950s, most notably by Princeton University's Wolfe and Frank, who developed its theoretical background,1 and by Harry Markowitz, who applied it to portfolio optimization, a subfield of finance.

Problem formulation

A general quadratic programming formulation contains a quadratic objective function and linear equality and inequality constraints:2,5,6

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x\ge0}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \sqrt[\pm]{2}}

The objective function is arranged such that the vector Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle q} contains all of the (singly-differentiated) linear terms and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle Q} contains all of the (twice-differentiated) quadratic terms. Put more simply, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle Q} is the Hessian matrix of the objective function and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle q} is its gradient. By convention, any constants contained in the objective function are left out of the general formulation.6 The one-half in front of the quadratic term is included to remove the coefficient (2) that results from taking the derivative of a second-order polynomial.

As for the constraints, the matrix equation Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle Ax=a} contains all of the linear equality constraints, and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle Bx \le b} are the linear inequality constraints.

When there are only inequality constraints (Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle Bx\le b}

), the Lagrangean is:6

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle L(x,\lambda,\mu)=q^{T}x+\frac{1}{2}x^{T}Qx+{\lambda}^T(Ax-a)+{\mu}^T(Bx-b)=0}

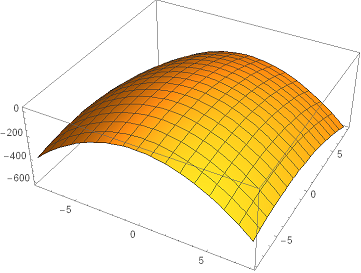

Global solutions

If the objective function is convex, then any local minimum found is also the sole global minimum. To analyze the function’s convexity, one can compute its Hessian matrix and verify that all eigenvalues are positive, or, equivalently, one can verify that the matrix Q is positive definite.6 This is a sufficient condition, meaning that it is not required to be true in order for a local minimum to be the unique global minimum, but will guarantee this property holds if true.

KKT conditions

Solutions can be tested for optimality using Karush-Kuhn-Tucker conditions just as is done for other nonlinear problems:5

Condition 1: sum of gradients is zero:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \triangledown L({x}^*,{\lambda}^*,{\mu}^*)=q^{T}+\frac{1}{2}x^{T}Q+{\lambda}^{*T}A+{\mu}^{*T}B}

Condition 2: all constraints satisfied:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle Ax^*-a=0}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle Bx^*-b\le0}

Condition 3: complementary conditions:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle {\mu}^TBx-b=0}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle {x}^{T},{\lambda}^{T},{\mu}^T\ge0}

Solution strategies

Because quadratic programming problems are a simple form of nonlinear problem, they can be solved in the same manner as other non-linear programming problems. An unconstrained quadratic programming problem is most straightforward to solve: simply set the derivative (gradient) of the objective function equal to zero and solve.7 More practical (constrained) formulations are more difficult to solve. The typical solution technique when the objective function is strictly convex and there are only equality constraints is the conjugate gradient method. If there are inequality constraints (Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle Bx\le b} ), then the interior point and active set methods are the preferred solution methods. When there is a range on the allowable values of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x} (in the form Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x^L\le x\le x^U} , which is the case for image and signal processing applications, trust-region methods are most frequently used.4 For all convex cases, an NLP solver in the optimization utility GAMS, such as KNITRO, MINOS, or CONOPT, can find solutions for quadratic programming problems.

Numerical example

This example demonstrates how to determine the KKT point of a specific QP problem:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \text{min}}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(x)=3{x_1}^2+{x_2}^2+2{x_1}{x_2}+x_1+6{x_2}+2}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \text{s.t.}}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 2{x_1}+3{x_2} \ge 4}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle {x_1},{x_2} \ge 0}

Rewrite the constraints.

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle g_1=-2{x_1}-3{x_2}+4\le 0}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle g_2=-{x_1} \le 0}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle g_3=-{x_2} \le 0}

Construct the gradients.

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \triangledown f= \begin{bmatrix} 6{x_1}+2{x_2}+1 \\ 2{x_1}+2{x_2}+6 \end{bmatrix}, \triangledown {g_1}= \begin{bmatrix} -2 \\ -3 \end{bmatrix}, \triangledown {g_2}= \begin{bmatrix} -1 \\ 0 \end{bmatrix}, \triangledown {g_3}= \begin{bmatrix} 0 \\ -1 \end{bmatrix} }

Assuming all constraints are satisfied, set the gradient equal to zero to attempt to find an optima.

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 6{x_1}+2{x_2}+1=0}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 2{x_1}+2{x_2}+6=0}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle {x_1}=\frac{5}{4}}

,

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle {x_2}=-\frac{17}{4}}

Make constraints Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle g_1}

and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle g_3}

, which are violated, active. Set both equal to zero.

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle {g_1} = -2{x_1}-3{x_2}+4=0}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle {g_3} = -{x_2}=0}

Solve the resulting system of equations.

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \triangledown f+ {\mu_1} \triangledown {g_1}+ {\mu_3} \triangledown {g_3} =0}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle {g_1}=0}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle {g_3}=0}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{bmatrix} 6{x_1}+2{x_2}+1 \\ 2{x_1}+2{x_2}+6 \end{bmatrix} +{\mu_1} \begin{bmatrix} -2 \\ -3 \end{bmatrix} +{\mu_3} \begin{bmatrix} 0 \\ -1 \end{bmatrix} }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -2{x_1}-3{x_2}+4=0}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -{x_2}=0}

Formulating the system as one matrix and row reducing is one of the simplest ways to solve. Doing so yields:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle {x_1}=2, {x_2}=0, {\mu_1}=6.5, {\mu_3}=9.5}

Drop constraint Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle g_3}

because Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle {\mu_3}}

is negative and resolve the system. Doing so yields:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle {x_1}=0.5, {x_2}=1, {\mu_1}=3}

Which yields an objective function value of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f=11.25}

Verify linear dependence of the gradient:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \triangledown f+ {\mu_1} \triangledown {g_1}}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{bmatrix} 6 \\ 9 \end{bmatrix} +3 \begin{bmatrix} -2 \\ -3 \end{bmatrix} = \begin{bmatrix} 0 \\ 0 \end{bmatrix} \therefore }

Verify the uniqueness of the solution:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle {\triangledown}^2 f(x)= \begin{bmatrix} 6 & 2 \\ 2 & 1 \end{bmatrix} \rightarrow \begin{bmatrix} 6-\lambda & 2 \\ 2 & 1-\lambda \end{bmatrix} =0 \rightarrow \lambda = 0.298, 6.702}

Because both eigenvalues are positive, the Hessian matrix is positive determinant, and this local minimum is the global minimum of the objective function given these constraints.

Applications

A few of the many quadratic programming applications are discussed in more detail and accompanied with general models below, and a list of other areas in which QP is important is presented as well.

- Quadratic knapsack problem (QKP)

In the standard knapsack problem, there are a number of items with different weights and values, and the items are selected based on which combination yields the highest overall value without exceeding the overall weight limit of the knapsack.

In the quadratic knapsack problem, the objective function is quadratic or, more specifically, bilinear, and the constraints are the same as in the typical knapsack problem.8 QKP's are used in designing email servers and to optimize the locations of "nodes" in applications such as positioning transportation hubs like airports and train stations.8 Additionally, the problem can model situations in which a limited number of people are assigned to complete specific objectives that require them to interact.9 One formulation is presented below:8

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \text{max or min}}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x^{T}Qx}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle Ax \le a}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x \in {B}^{n} }

The quadratic knapsack problem, although it looks very simple, is NP-hard and is thus difficult to solve. To make obtaining solutions easier, these problems are often linearized.8

- Model predictive control

Quadratic programming also has important applications in chemical engineering. Model predictive control (MPC) is a group of algorithms that help manage production in chemical plants by dictating production in each batch. While often formulated as linear programs because the resulting models are more stable, robust and easier to solve, MPC models are sometimes made with quadratic programming.11 As an example of its utility, quadratic programming was used by Di Ruscio in an MPC algorithm for a thermomechanical pulping process, which a method for making paper.11

- Least squares

Least squares regression is one of the most common types of regression, and works by minimizing the sum of the squares of the difference between data points and a proposed fit. Quadratic optimization is one method that can be used to perform a least squares regression and is more flexible than most linear methods. One formulation for a quadratic programming regression model is as follows:3

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \text{min}}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle e'Ie}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle e = Y-X \beta}

In this model, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \beta} and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle e} are the unknown regression parameters, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle I} is an identity matrix, and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X} and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle Y} contain data about the independent and dependent variables respectively.3

- Other applications

Quadratic programming is used in a wide range of applications not touched upon in the sample presented above. Other major areas in which QP's are relied upon include signal and image processing12 and a subfield of optimization called partial differential constrained optimization.3 QP's are also extensively used in finance, as variance, which is used to measure risk, is a function containing squares.13,14,15 More specifically, Markowitz won the 1990 Nobel Prize in Economics for his widely-used model that employs quadratic programming to optimizes the amount of risk taken on based on variances.14

Additionally, Sequential quadratic programming, an algorithm for solving more complicated NLP's that uses QP subproblems, is one of the most important applications.

Conclusion

Quadratic programming, the problem of optimizing a quadratic function, have been widely used since its development in the 1950s because it is a simple type of non-linear programming that can accurately model many real world systems, notably ones dependent on two variables. Problems formulated this way are straightforward to optimize when the objective function is convex. QP has applications in finance, various types of computer systems, statistics, chemical production, and in algorithms to solve more complex NLP's.

References

1. Frank, Marguerite, and Philip Wolfe. "An Algorithm for Quadratic Programming." Naval Research Logistics Quarterly 3 (1956): 95-110. Web. 4 June 2015.

2. Floudas, Christodoulos A., and V. Visweswaran. "Quadratic Optimization." Nonconvex Optimization and Its Applications, 2 (1995): 217-69. Web. 23 May 2015.

3. McCarl, Bruce A., Moskowitz, Herbert, and Harley Furtan. "Quadratic Programming Applications." The International Journal of Management Science, 5 (1977): 43-55.

4. Geletu, Abele. "Quadratic programming problems." Ilmenau University of Technology. Web. 23 May 2015.

5. Bradley, Hax, and Magnanti. Applied Mathematical Programming. Boston: Addison-Wesley, 1997. 421-40. Web. 23 May 2015.

6. Jensen, Paul A., and Jonathan F. Bard. "Quadratic Programming." Operations Research Models and Methods. The University of Texas at Austin. Web. 23 May 2015.

7. Binner, David. "Quadratic programming example - no constraints." Miscellaneous mathematical utilities. AKiTi. Web. 23 May 2015.

8. Pisinger, David. "The Quadratic Knapsack Problem – A Survey." Discrete Applied Mathematics, 155 (2007): 623 – 648. Web. 23 May 2015.

9. "Quadratic Multiple Knapsack Problem." Optimization of Complex System. Optiscom Project. 2012. Web. 6 June 2015.

10. Gallo, G., P. L. Hammer, and B. Simeone. "Quadratic Knapsack Problems." Mathematical Programming 12 (1980): 132-149. Web. 24 May 2015.

11. Di Ruscio, David. "Model Predictive Control and Optimization." Telemark University College. Mar. 2001. Web. 24 May 2015.

12. Ma, W. K. "Signal Processing Optimization Techniques." The Chinese University of Hong Kong. Web. 24 May 2015.

13. Tütüncü, Reha H. "Optimization in Finance." Tokyo Institute of Technology. Spring 2003. Web. 23 May 2015.

14. Van Slyke, R. "Portfolio Optimization." NYU Polytechnic School of Engineering. 16 Nov. 2007. Web. 24 May 2015.

15. "Portfolio Optimization." SAS/OR(R) 9.2 User's Guide: Mathematical Programming. SAS Institute. Web. 23 May 2015.