Optimization with absolute values: Difference between revisions

Yilian Yin (talk | contribs) |

Yilian Yin (talk | contribs) |

||

| Line 119: | Line 119: | ||

subject to <math>\sum_j\!x_j = 1</math> | subject to <math>\sum_j\!x_j = 1</math> | ||

<math> | <math>x_j \geq 0</math> <math> j = 1,2,..n.</math> | ||

where <math>\tilde{R}_j = \!R_j - \mathbb{E}\!R_j </math> | where <math>\tilde{R}_j = \!R_j - \mathbb{E}\!R_j </math> | ||

| Line 125: | Line 125: | ||

Very obviously, this problem is not a linear programming problem yet. So we have to use the same trick we used in the numeric example, to replace each absolute value with a new variable and impose inequality constraints to ensure that the new variable is the appropriate absolute value once an optimal value is obtained. To simplify the program, we decide to take the average of the historical returns in order to get the mean expected return: <math> | Very obviously, this problem is not a linear programming problem yet. So we have to use the same trick we used in the numeric example, to replace each absolute value with a new variable and impose inequality constraints to ensure that the new variable is the appropriate absolute value once an optimal value is obtained. To simplify the program, we decide to take the average of the historical returns in order to get the mean expected return: <math>r_j = \mathbb{E}\!R_j = \left ( \frac{1}{T} \right ) \sum_{t=1}^T \!R_j(t) | ||

</math>. Thus the objective function turns into: <math>\mu\sum_{j}\!x_j\!r_j - \left ( \frac{1}{T} \right ) \sum_{t=1}^T\left\vert \sum_{j} \!x_j \bigl(R_j (t) - \!r_j\bigr) \right\vert | |||

</math> | </math> | ||

Now, replace <math>\left\vert \sum_{j} \!x_j \bigl(R_j (t) - \!r_j\bigr) \right\vert | |||

</math> with a new variable <math>y_t | |||

</math>and thus the problem can be rewrote as: | |||

maximize <math>\mu \sum_j \!x_j\!r_j - \left ( \frac{1}{T} \right ) \sum_{t=1}^T \!y_t | |||

</math> | |||

subject to <math>-\!y_t \leq \sum_{j} \!x_j \bigl(R_j (t) - \!r_j\bigr) \leq y_t | |||

</math>. t = 1, 2,...,T | |||

where <math>\sum_j \!x_j = 1 | |||

</math> | |||

<math>x_j\geq 0 | |||

</math>. j = 1, 2,...,n | |||

<math>y_t \geq 0 | |||

</math>. t = 1, 2,...,T | |||

So finally, after some simplifications methods and some tricks applied, the original problem is converted into a linear programming which is easier to be solved further. | |||

Revision as of 14:59, 22 November 2020

Authors: Matthew Chan (mdc297), Yilian Yin (), Brian Amado (ba392), Peter (pmw99), Dewei Xiao (dx58) - SYSEN 5800 Fall 2020

Steward: Fengqi You

Introduction

Absolute values can make it relatively difficult to to determine the optimal solution when handled without first converting to standard form. This conversion of the objective function is a good first step in solving optimization problems with absolute values. As a result, you can go on to solve the problem using linear programing techniques.

Method

Defining Absolute Values

An absolute value of a real number can be described as its distance away from zero, or the non-negative magnitude of the number. Thus,

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle |x| = \begin{cases} -x, & \text{if }x < 0 \\ x, & \text{if }x \ge 0 \end{cases} }

Absolute values can exist in optimization problems in two primary instances: in constraints and in the objective function.

Absolute Values in Constraints

Within linear equations, linear constraints can exist in several forms.

The first form exists as Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle |X| = 0 } , where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\textstyle X } is a linear combination of variables.

In this case, the only solution is if Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle |X| = 0 } , simplifying the constraint to Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X = 0 } . Note that this solution also occurs if the constraint is in the form Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle |X| <= 0 } due to the same conclusion (only solution Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X = 0 } ).

Second form a linear constraint can exist in is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle |X| \le C }

where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\textstyle X }

remains a linear combination of variables and constant Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\textstyle C > 0 }

.

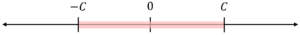

In this case, we can describe an equivalent feasible solution by splitting the inequality into

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X \le C }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -X \le C }

We can understand this visually as the solution Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\textstyle X } must lie between Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\textstyle -C } and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\textstyle C } , as shown below:

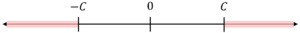

The last case for linear constraints is when Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle |X| \ge C }

.

Visually, the solution space is the complement of the second solution above, resulting in the following representation:

In expression form, the solutions can be written as:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X \ge C }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -X \ge C }

As seen visually, the feasible region has a gap and thus non-convex. The expressions also make it impossible for both to simultaneously hold true. This means that it is not possible to transform constraints in this form to linear equations. An approach to reach a solution for this particular case exists in the form of Mixed-Integer Linear Programming, where only one of the equations above is “active”.

Absolute Values in Objective Functions

WIP

Numerical Example

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \min{|x_1| + 2|x_2| + |x_3|} }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{align} \ s.t. x_1 + x_2 - x_3 \le 10 \\ x_1 - 3x_2 + 2x_3= 12 \end{align}}

We replace the absolute value quantities with a single variable:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle |x_1| = U_1 }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle |x_2| = U_2}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle |x_3| = U_3}

We must introduce additional constraints to ensure we do not lose any information by doing this substitution:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -U_1 \le x_1 \le U_1 }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -U_2 \le x_2 \le U_2 }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -U_3 \le x_3 \le U_3 }

The problem has now been reformulated as a linear programming problem that can be solved normally:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \min{ U_1 + 2U_2 + U_3} }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{align} \ s.t. x_1 + x_2 - x_3 \le 10 \\ x_1 - 3x_2 + 2x_3= 12 \end{align}}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -U_1 \le x_1 \le U_1 }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -U_2 \le x_2 \le U_2 }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -U_3 \le x_3 \le U_3 }

The optimum value for the objective function is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 6} , which occurs when Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_1 = 0 } and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_2 = 0 } and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_3 = 6 } .

Applications

There are no specific applications to Optimization with Absolute Values however it is necessary to account for at times when utilizing the simplex method.

Consider the problem Ax=b; max z= x c,jx,i. This problem cannot, in general, be solved with the simplex method. The problem has a simplex method solution (with unrestricted basis entry) only if c, are nonpositive (non-negative for minimizing problems).

The primary application of absolute-value functionals in linear programming has been for absolute-value or L(i)-metric regression analysis. Such application is always a minimization problem with all C(j) equal to 1 so that the required conditions for valid use of the simplex method are met.

By reformulating the original problem into a Mixed-Integer Linear Program (MILP), in most case we should be able to use GAMS/Pyomo/JuliaOPT to solve the problem.

WIP

3

- Application in Financial: Portfolio Selection

Under this topic, we are going to use the same tricks we played in the Numerical Example section to perform Reduction to a Linear Programming Problem, to reform the problem into a MILP, in order to solve the problem. Let's have a look of the example.

A portfolio is determined by what fraction of one's assets to put into each investment. It can be denoted as a collection of nonnegative numbers xj, where j = 1, 2,...,n. Because each xj stands for a portion of the assets, it sums to one. In order to get a highest reward through finding a right mix of assets, let Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mu} , the positive parameter, denote the importance of risk relative to the return, and Rj denote the return in the next time period on investment j, j = 1, 2,..., n. The total return one would obtain from the investment is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle R = \sum_{j}\!x_j\!R_j } . The expected return is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathbb{E}\!R = \sum_{j}\!x_j\mathbb{E}\!R_j } . And the Mean Absolute Deviation from the Mean (MAD) is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathbb{E}\left\vert \!R - \mathbb{E}\!R \right\vert = \mathbb{E}\left\vert \sum_{j}\!x_j\tilde{R}_j \right\vert } .

maximize Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\textstyle \mu\sum_j\!x_j\mathbb{E}\!R_j - \mathbb{E}\left\vert \sum_j \!x_j\tilde{R}_j \right\vert }

subject to Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \sum_j\!x_j = 1}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_j \geq 0} Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle j = 1,2,..n.}

where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \tilde{R}_j = \!R_j - \mathbb{E}\!R_j }

Very obviously, this problem is not a linear programming problem yet. So we have to use the same trick we used in the numeric example, to replace each absolute value with a new variable and impose inequality constraints to ensure that the new variable is the appropriate absolute value once an optimal value is obtained. To simplify the program, we decide to take the average of the historical returns in order to get the mean expected return: Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle r_j = \mathbb{E}\!R_j = \left ( \frac{1}{T} \right ) \sum_{t=1}^T \!R_j(t) } . Thus the objective function turns into: Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mu\sum_{j}\!x_j\!r_j - \left ( \frac{1}{T} \right ) \sum_{t=1}^T\left\vert \sum_{j} \!x_j \bigl(R_j (t) - \!r_j\bigr) \right\vert }

Now, replace Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \left\vert \sum_{j} \!x_j \bigl(R_j (t) - \!r_j\bigr) \right\vert } with a new variable Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle y_t } and thus the problem can be rewrote as:

maximize Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mu \sum_j \!x_j\!r_j - \left ( \frac{1}{T} \right ) \sum_{t=1}^T \!y_t }

subject to Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -\!y_t \leq \sum_{j} \!x_j \bigl(R_j (t) - \!r_j\bigr) \leq y_t } . t = 1, 2,...,T

where

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_j\geq 0 } . j = 1, 2,...,n

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle y_t \geq 0 } . t = 1, 2,...,T

So finally, after some simplifications methods and some tricks applied, the original problem is converted into a linear programming which is easier to be solved further.

Let's see some other applications:

- Minimizing the sum of absolute deviations

- Minimizing the maximum of absolute values

Conclusion

The presence of an absolute value within the objective function prevents the use of certain optimization methods. Solving these problems requires that the function be manipulated in order to continue with linear programming techniques like the simplex method.

References

To be formatted:

- http://lpsolve.sourceforge.net/5.1/absolute.htm

- https://ocw.mit.edu/courses/sloan-school-of-management/15-053-optimization-methods-in-management-science-spring-2013/tutorials/MIT15_053S13_tut04.pdf

- https://www.ise.ncsu.edu/fuzzy-neural/wp-content/uploads/sites/9/2019/08/LP-Abs-Value.pdf

- Vanderbei R.J. (2008) Financial Applications. In: Linear Programming. International Series in Operations Research & Management Science, vol 114. Springer, Boston, MA. https://doi.org/10.1007/978-0-387-74388-2_13