Markov decision process: Difference between revisions

| Line 1: | Line 1: | ||

Author: Eric Berg (eb645) | Author: Eric Berg (eb645), Fall 2020 | ||

= Introduction = | = Introduction = | ||

| Line 23: | Line 7: | ||

A Markov Decision process makes decisions using information about the system's current state, the actions being performed by the agent and the rewards earned based on states and actions. | A Markov Decision process makes decisions using information about the system's current state, the actions being performed by the agent and the rewards earned based on states and actions. | ||

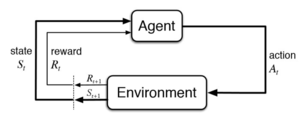

A Markov decision process is made up of multiple fundamental elements: the agent, states, a model, actions, rewards, and a policy. The agent is the object or system being controlled that has to make decisions and perform actions. The agent lives in an environment that can be described using states, which contain information about the agent and the environment. The model determines the rules of the world in which the agent lives, in other words, how certain states and actions lead to other states. The agent can perform a fixed set of actions in any given state. The agent receives rewards based on its current state. A policy is a function that determines the agent's next action based on its current state. | A Markov decision process is made up of multiple fundamental elements: the agent, states, a model, actions, rewards, and a policy. The agent is the object or system being controlled that has to make decisions and perform actions. The agent lives in an environment that can be described using states, which contain information about the agent and the environment. The model determines the rules of the world in which the agent lives, in other words, how certain states and actions lead to other states. The agent can perform a fixed set of actions in any given state. The agent receives rewards based on its current state. A policy is a function that determines the agent's next action based on its current state. [[File:Reinforcement Learning.png|thumb|Reinforcement Learning framework used in Markov Decision Processes]]'''MDP Framework:''' | ||

*<math>S</math> : States (<math>s \epsilon S</math>) | |||

*<math>A</math> : Actions (<math>a \epsilon A</math>) | |||

* <math>S</math> : States (<math>s \epsilon S</math>) | |||

* <math>A</math> : Actions (<math>a \epsilon A</math>) | |||

*<math>P(S_{t+1} | s_t, a_t)</math> : Model determining transition probabilities | *<math>P(S_{t+1} | s_t, a_t)</math> : Model determining transition probabilities | ||

* <math>R(s)</math>: Reward<br /> | *<math>R(s)</math>: Reward<br /> | ||

In order to understand how the Markov Decision Process works, first the Markov Property must be defined. The Markov Property states that the future is independent of the past given the present. In other words, only the present in needed to determine the future, since the present contains all necessary information from the past. The Markov Property can be described in mathematical terms below: | In order to understand how the Markov Decision Process works, first the Markov Property must be defined. The Markov Property states that the future is independent of the past given the present. In other words, only the present in needed to determine the future, since the present contains all necessary information from the past. The Markov Property can be described in mathematical terms below: | ||

<math display="inline">P[S_{t+1} | S_t] = P[S_{t+1} | S_1, S_2, S_3... S_t]</math> | <math display="inline">P[S_{t+1} | S_t] = P[S_{t+1} | S_1, S_2, S_3... S_t]</math> | ||

The above notation conveys that the probability of the next state given the current state is equal to the probability of the next state given all previous states. The Markov Property is relevant to the Markov Decision Process because only the current state is inputted into the policy function to determine the next action, the previous states and actions are not needed | The above notation conveys that the probability of the next state given the current state is equal to the probability of the next state given all previous states. The Markov Property is relevant to the Markov Decision Process because only the current state is inputted into the policy function to determine the next action, the previous states and actions are not needed. | ||

'''The Policy and Value Function''' | '''The Policy and Value Function''' | ||

| Line 72: | Line 51: | ||

</math> | </math> | ||

The value function can solved iteratively using iterative methods such as dynamic programming, Monte-Carlo evaluations, or temporal-difference learning. | The value function can solved iteratively using iterative methods such as dynamic programming, Monte-Carlo evaluations, or temporal-difference learning. | ||

The optimal policy is one that chooses the action with the largest value given the current state: | The optimal policy is one that chooses the action with the largest value given the current state: | ||

| Line 79: | Line 58: | ||

</math> | </math> | ||

The policy is a function of the current state, meaning at each time step a new policy is calculated considering the present information. | <math>\Pi(s) = max_{\Pi} V_{\Pi}(s) | ||

</math> | |||

The policy is a function of the current state, meaning at each time step a new policy is calculated considering the present information. The optimal value function can be solved using methods such as value iteration, policy iteration, or linear programming. | |||

'''The Algorithm''' | '''The Algorithm''' | ||

| Line 93: | Line 75: | ||

</math> | </math> | ||

= Numerical Example = | = Numerical Example = | ||

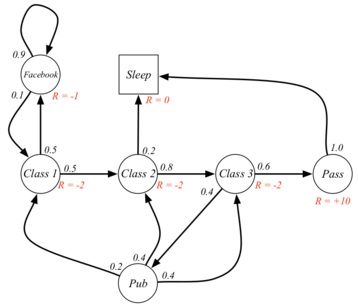

[[File:Image2.png|thumb|A Markov Decision Process describing a college student's hypothetical situation.|358x358px]] | |||

As an example, the Markov decision process can be applied to a college student, depicted to the right. In this case, the agent would be the student. The states would be the circles and squares in the diagram, and the arrows would be the actions. The actions between class states would be "study". In the state that the student is in Class 2, the allowable actions are to study or sleep. The model in this case would assign probabilities to each state given the previous state and action. These probabilities are written next to the arrows. Finally the rewards associated with each state are written in red. | |||

Assume the first state is Class 1, <math>\gamma | |||

</math> =1. | |||

First, the value functions must be calculated for each state. | |||

<math>V(s) = R(s) + \gamma \sum_{s' \epsilon S}P_{ss'}V(s') | |||

</math> | |||

<math>V(Pass) = 10 + (1)(1.0*0)=10 | |||

</math> | |||

<math>V(Class 3) = -2 + 1() \sum_{s' \epsilon S}P_{ss'}V(s') | |||

</math> | |||

Then, in state 1 (Class 1), the optimal policy is that the action chosen should result in the state that generates the highest value function. | |||

<math>\Pi(s) = max_a [R(s,a) + \gamma \sum_{s' \epsilon S}P_{ss'}^aV(s')] | |||

</math> | |||

<math>\Pi(Class 1) = max_a [-2+2.7,-1-2.3] = max_a [0.7,-3.3] =0.7 | |||

</math> | |||

a = study and go to Class 2 | |||

Therefore, the optimal policy in state Class 1 is to go to Class 2 | |||

= Applications = | = Applications = | ||

| Line 103: | Line 112: | ||

= Conclusion = | = Conclusion = | ||

A Markov Decision Process is a stochastic, sequential decision-making method based on the Markov Property. | A Markov Decision Process is a stochastic, sequential decision-making method based on the Markov Property. This process is fundamental in reinforcement learning applications and a core method for developing artificially intelligent systems. MDPs have been applied to various industries such as controlling robots and other automated systems, and even fields like economics. | ||

= References = | = References = | ||

<references /> | <references /> | ||

# Abbeel, P. (n.d.). ''Markov Decision Processes and Exact Solution Methods:'' 34. | |||

# Ashraf, M. (2018, April 11). ''Reinforcement Learning Demystified: Markov Decision Processes (Part 1)''. Medium. <nowiki>https://towardsdatascience.com/reinforcement-learning-demystified-markov-decision-processes-part-1-bf00dda41690</nowiki> | |||

# Bertsekas, D. P. (n.d.). ''Dynamic Programming and Optimal Control 3rd Edition, Volume II''. 233. | |||

# Littman, M. L. (2001). Markov Decision Processes. In N. J. Smelser & P. B. Baltes (Eds.), ''International Encyclopedia of the Social & Behavioral Sciences'' (pp. 9240–9242). Pergamon. <nowiki>https://doi.org/10.1016/B0-08-043076-7/00614-8</nowiki> | |||

# Platt, R. (n.d.). ''Markov Decision Processes''. 66. | |||

# Roberts, J. (n.d.). ''Markov Decision Processes''. 24. | |||

# Silver, D. (n.d.). Lecture 2: Markov Decision Processes. ''Markov Processes'', 57. | |||

<span title="url_ver=Z39.88-2004&ctx_ver=Z39.88-2004&rfr_id=info%3Asid%2Fzotero.org%3A2&rft_val_fmt=info%3Aofi%2Ffmt%3Akev%3Amtx%3Ajournal&rft.genre=article&rft.atitle=Lecture%202%3A%20Markov%20Decision%20Processes&rft.jtitle=Markov%20Processes&rft.aufirst=David&rft.aulast=Silver&rft.au=David%20Silver&rft.pages=57&rft.language=en" class="Z3988"></span> | <span title="url_ver=Z39.88-2004&ctx_ver=Z39.88-2004&rfr_id=info%3Asid%2Fzotero.org%3A2&rft_val_fmt=info%3Aofi%2Ffmt%3Akev%3Amtx%3Ajournal&rft.genre=article&rft.atitle=Lecture%202%3A%20Markov%20Decision%20Processes&rft.jtitle=Markov%20Processes&rft.aufirst=David&rft.aulast=Silver&rft.au=David%20Silver&rft.pages=57&rft.language=en" class="Z3988"></span> | ||

Revision as of 03:01, 29 November 2020

Author: Eric Berg (eb645), Fall 2020

Introduction

A Markov Decision Process (MDP) is a decision making method that takes into account information from the environment, actions performed by the agent, and rewards in order to decide the optimal next action. MDP works in discrete time, meaning at each point in time the decision process is carried out. The name Markov refers to the Russian mathematician Andrey Markov, since the Markov Decision Process is based on the Markov Property. MDPs are often used as control schemes in machine learning applications. Machine learning can be divided into three main categories: unsupervised learning, supervised learning, and reinforcement learning. The Markov decision process is used as a method for decision making in the reinforcement learning category. MDPs are the basis by which the machine makes decisions and "learns" how to behave in order to achieve its goal.

Theory and Methodology

A Markov Decision process makes decisions using information about the system's current state, the actions being performed by the agent and the rewards earned based on states and actions.

A Markov decision process is made up of multiple fundamental elements: the agent, states, a model, actions, rewards, and a policy. The agent is the object or system being controlled that has to make decisions and perform actions. The agent lives in an environment that can be described using states, which contain information about the agent and the environment. The model determines the rules of the world in which the agent lives, in other words, how certain states and actions lead to other states. The agent can perform a fixed set of actions in any given state. The agent receives rewards based on its current state. A policy is a function that determines the agent's next action based on its current state.

MDP Framework:

- : States (Failed to parse (Conversion error. Server ("https://wikimedia.org/api/rest_") reported: "Cannot get mml. Server problem."): {\displaystyle s\epsilon S} )

- : Actions (Failed to parse (Conversion error. Server ("https://wikimedia.org/api/rest_") reported: "Cannot get mml. Server problem."): {\displaystyle a\epsilon A} )

- Failed to parse (Conversion error. Server ("https://wikimedia.org/api/rest_") reported: "Cannot get mml. Server problem."): {\displaystyle P(S_{t+1}|s_{t},a_{t})} : Model determining transition probabilities

- : Reward

In order to understand how the Markov Decision Process works, first the Markov Property must be defined. The Markov Property states that the future is independent of the past given the present. In other words, only the present in needed to determine the future, since the present contains all necessary information from the past. The Markov Property can be described in mathematical terms below:

Failed to parse (Conversion error. Server ("https://wikimedia.org/api/rest_") reported: "Cannot get mml. Server problem."): {\textstyle P[S_{t+1}|S_{t}]=P[S_{t+1}|S_{1},S_{2},S_{3}...S_{t}]}

The above notation conveys that the probability of the next state given the current state is equal to the probability of the next state given all previous states. The Markov Property is relevant to the Markov Decision Process because only the current state is inputted into the policy function to determine the next action, the previous states and actions are not needed.

The Policy and Value Function

The policy, , is a function that maps actions to states. The policy determines which is the optimal action given the current state to achieve maximize reward.

There are various methods that can be used for finding the best policy. Each method tries to maximize rewards in some way, but differs in which accumulation of rewards should be maximized. The first method is to choose the action that maximizes the expected reward given the current state. This is the myopic method, which weighs each time-step decision equally. Next is the finite-horizon method, which tries to maximize the accumulated reward over a fixed number of time steps. But because many applications may have infinite horizons, meaning the agent will always have to make decisions and continuously try to maximize its reward, another method is commonly used, known as the infinite-horizon method. In the infinite-horizon method, the goal is to maximize the expected sum of rewards over all steps in the future. The problem becomes when performing an infinite sum of rewards that are all weighed equally, the results may not converge and the policy algorithm may get stuck in a loop. In order to avoid this, and to be able prioritize short-term or long term-rewards, a discount factor, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \gamma } , is added. If Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \gamma } is closer to 0, the policy will choose actions that prioritize more immediate rewards, and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \gamma } is closer to 1, long-term rewards are prioritized.

- Myopic: Maximize Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle E[ r_t | \Pi , s_t ] } , maximize expected reward for each state

- Finite-horizon: Maximize Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle E[ \textstyle \sum_{t=0}^k \displaystyle r_t | \Pi , s_t ] }

- Discounted Infinite-horizon: Maximize Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle E[ \textstyle \sum_{t=0}^\infty \displaystyle \gamma^t r_t | \Pi , s_t ] } Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \gamma \epsilon [0,1] }

Most commonly, the discounted infinite horizon method is used to determine the best policy. The value function, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle V(s) } , is the sum of discounted rewards.

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle V(s) = E[ \sum_{t=0}^\infty \gamma^t r_t | s_t ] }

Using the Bellman Equation, the value function can be decomposed into two parts, the immediate reward of the current state, and the discounted reward of the next state.

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle V(s) = E[ r_{t+1} + \gamma v(s_{t+1}) | s_t] }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle V(s) = R(s) + \gamma \sum_{s' \epsilon S}P_{ss'}V(s') }

The value function can solved iteratively using iterative methods such as dynamic programming, Monte-Carlo evaluations, or temporal-difference learning.

The optimal policy is one that chooses the action with the largest value given the current state:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \Pi(s) = max_a [R(s,a) + \gamma \sum_{s' \epsilon S}P_{ss'}^aV(s')] }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \Pi(s) = max_{\Pi} V_{\Pi}(s) }

The policy is a function of the current state, meaning at each time step a new policy is calculated considering the present information. The optimal value function can be solved using methods such as value iteration, policy iteration, or linear programming.

The Algorithm

At each step, given state Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s_t }

- Update Value Function Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle V(s) }

- Update Policy Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \Pi(s) } and perform action Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle a }

Numerical Example

As an example, the Markov decision process can be applied to a college student, depicted to the right. In this case, the agent would be the student. The states would be the circles and squares in the diagram, and the arrows would be the actions. The actions between class states would be "study". In the state that the student is in Class 2, the allowable actions are to study or sleep. The model in this case would assign probabilities to each state given the previous state and action. These probabilities are written next to the arrows. Finally the rewards associated with each state are written in red.

Assume the first state is Class 1, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \gamma } =1.

First, the value functions must be calculated for each state.

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle V(s) = R(s) + \gamma \sum_{s' \epsilon S}P_{ss'}V(s') }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle V(Pass) = 10 + (1)(1.0*0)=10 }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle V(Class 3) = -2 + 1() \sum_{s' \epsilon S}P_{ss'}V(s') }

Then, in state 1 (Class 1), the optimal policy is that the action chosen should result in the state that generates the highest value function.

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \Pi(s) = max_a [R(s,a) + \gamma \sum_{s' \epsilon S}P_{ss'}^aV(s')] }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \Pi(Class 1) = max_a [-2+2.7,-1-2.3] = max_a [0.7,-3.3] =0.7 }

a = study and go to Class 2

Therefore, the optimal policy in state Class 1 is to go to Class 2

Applications

Markov decision Processes have been used widely within reinforcement learning to teach robots or other computer-based systems how to do something they were previously were unable to do. For example, Markov decision processes have been used to teach a computer how to play computer games like Pong, Pacman, or AlphaGo. MDPs have been used to teach a simulated robot how to walk and run. MDPs are often applied fields such as robotics, automated systems, manufacturing, and economics.

Conclusion

A Markov Decision Process is a stochastic, sequential decision-making method based on the Markov Property. This process is fundamental in reinforcement learning applications and a core method for developing artificially intelligent systems. MDPs have been applied to various industries such as controlling robots and other automated systems, and even fields like economics.

References

- Abbeel, P. (n.d.). Markov Decision Processes and Exact Solution Methods: 34.

- Ashraf, M. (2018, April 11). Reinforcement Learning Demystified: Markov Decision Processes (Part 1). Medium. https://towardsdatascience.com/reinforcement-learning-demystified-markov-decision-processes-part-1-bf00dda41690

- Bertsekas, D. P. (n.d.). Dynamic Programming and Optimal Control 3rd Edition, Volume II. 233.

- Littman, M. L. (2001). Markov Decision Processes. In N. J. Smelser & P. B. Baltes (Eds.), International Encyclopedia of the Social & Behavioral Sciences (pp. 9240–9242). Pergamon. https://doi.org/10.1016/B0-08-043076-7/00614-8

- Platt, R. (n.d.). Markov Decision Processes. 66.

- Roberts, J. (n.d.). Markov Decision Processes. 24.

- Silver, D. (n.d.). Lecture 2: Markov Decision Processes. Markov Processes, 57.