Subgradient optimization

This web page is a duplicate of https://optimization.mccormick.northwestern.edu/index.php/Subgradient_optimization

Author Name: Aaron Anderson (ChE 345 Spring 2015)

Steward: Dajun Yue and Fengqi You

Subgradient Optimization (or Subgradient Method) is an iterative algorithm for minimizing convex functions, used predominantly in Nondifferentiable optimization for functions that are convex but nondifferentiable. It is often slower than Newton's Method when applied to convex differentiable functions, but can be used on convex nondifferentiable functions where Newton's Method will not converge. It was first developed by Naum Z. Shor in the Soviet Union in the 1960's.

Introduction

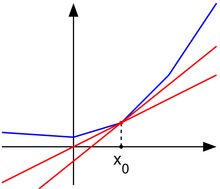

The Subgradient (related to Subderivative and Subdifferential) of a function is a way of generalizing or approximating the derivative of a convex function at nondifferentiable points. The definition of a subgradient is as follows: Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle g}

is a subgradient of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f}

at Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x}

if, for all Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle y}

, the following is true:

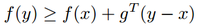

An example of the subgradient of a nondifferentiable convex function Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f} can be seen below:

Where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle g_1} is a subgradient at point Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_1} and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle g_2} and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle g_3} are subgradients at point Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_2} . Notice that when the function is differentiable, such as at point Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_1} , the subgradient, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle g_1} , just becomes the gradient to the function. Other important factors of the subgradient to note are that the subgradient gives a linear global underestimator of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f} and if Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f} is convex, then there is at least one subgradient at every point in its domain. The set of all subgradients at a certain point is called the subdifferential, and is written as Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \partial f(x_0)} at point Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_0} .

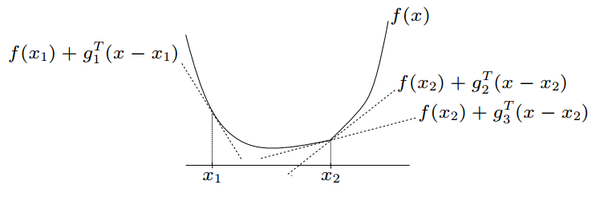

The Subgradient Method

Suppose Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f:\mathbb{R}^n \to \mathbb{R}}

is a convex function with domain Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathbb{R}^n}

. To minimize Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f}

the subgradient method uses the iteration:

Where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle k}

is the number of iterations, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x^{(k)}}

is the Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle k}

th iterate, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle g^{(x)}}

is any subgradient at Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x^{(k)}}

, and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha_k}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle (> 0)}

is the Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle k}

th step size. Thus, at each iteration of the subgradient method, we take a step in the direction of a negative subgradient. As explained above, when Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f}

is differentiable, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle g^{(k)}}

simply reduces to Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \nabla}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(x^{(k)})}

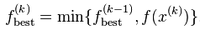

. It is also important to note that the subgradient method is not a descent method in that the new iterate is not always the best iterate. Thus we need some way to keep track of the best solution found so far, i.e. the one with the smallest function value. We can do this by, after each step, setting

and setting Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle i_{\text{best}}^{(k)} = k} if Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x^{(k)}} is the best (smallest) point found so far. Thus we have:

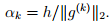

which gives the best objective value found in Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle k}

iterations. Since this value is decreasing, it has a limit (which can be Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -\infty}

).

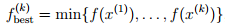

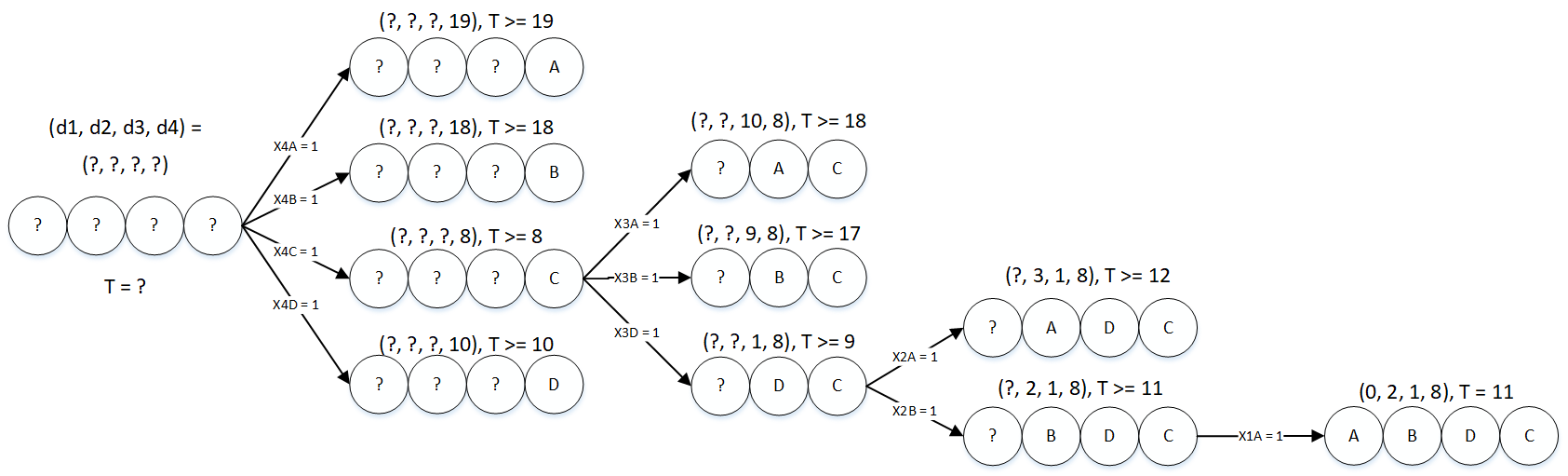

An algorithm flowchart is provided below for the subgradient method:

Step size

Several different step size rules can be used:

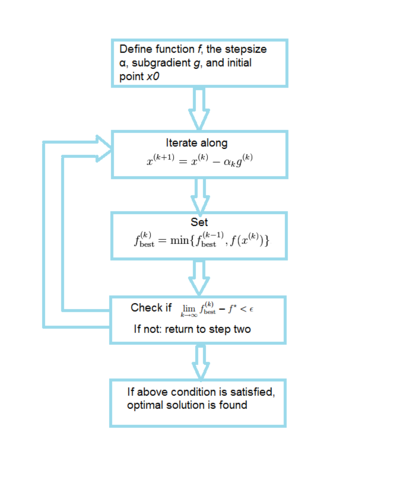

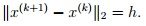

- Constant step size: Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha_k = h} independent of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle k} .

- Constant step length:

This means that

This means that

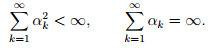

- Square summable but not summable: These step sizes satisfy

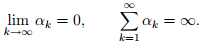

- One typical example is

where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle a>0}

and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle b\ge0}

.

where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle a>0}

and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle b\ge0}

.

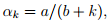

- Nonsummable diminishing: These step sizes satisfy

- One typical example is

where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle a>0}

.

where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle a>0}

.

An important thing to note is that for all four of the rules given here, the step sizes are determined "off-line", or before the method is iterated. Thus the step sizes do not depend on preceding iterations. This "off-line" property of subgradient methods differs from the "on-line" step size rules used for descent methods for differentiable functions where the step sizes do depend on preceding iterations.

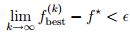

Convergence Results

There are different results on convergence for the subgradient method depending on the different step size rules applied. For constant step size rules and constant step length rules the subgradient method is guaranteed to converge within some range of the optimal value. Thus:

where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f^{*}}

is the optimal solution to the problem and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \epsilon}

is the aforementioned range of convergence. This means that the subgradient method finds a point within Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \epsilon}

of the optimal solution Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f^{*}}

. Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \epsilon}

is number that is a function of the step size parameter Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle h}

, and as Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle h}

decreases the range of convergence Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \epsilon}

also decreases, i.e. the solution of the subgradient method gets closer to Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f^{*}}

with a smaller step size parameter Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle h}

.

For the diminishing step size rule and the square summable but not summable rule, the algorithm is guaranteed to converge to the optimal value or ![]() When the function Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f}

is differentiable the subgradient method with constant step size yields convergence to the optimal value, provided the parameter Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle h}

is small enough.

When the function Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f}

is differentiable the subgradient method with constant step size yields convergence to the optimal value, provided the parameter Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle h}

is small enough.

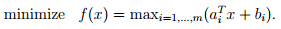

Example: Piecewise linear minimization

Suppose we wanted to minimize the following piecewise linear convex function using the subgradient method:

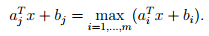

Since this is a linear programming problem finding a subgradient is simple: given Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x} we can find an index Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle j} for which:

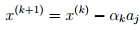

The subgradient in this case is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle g=a_j} . Thus the iterative update is then:

Where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle j}

is chosen such to satisfy  In order to apply the subgradient method to this problem all that is needed is some way to calculate

In order to apply the subgradient method to this problem all that is needed is some way to calculate ![]() and the ability to carry out the iterative update. Even if the problem is dense and very large (where standard linear programming might fail), if there is some efficient way to calculate Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f}

then the subgradient method is a reasonable choice for algorithm.

Consider a problem with Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle n=10}

variables and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle m=100}

terms and with data Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle a_i}

and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle b_i}

generated from a normal distribution. We will consider all four of the step size rules mentioned above and will plot Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \epsilon}

or the difference between the optimal solution and the subgradient solution as a function of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle k}

, the nuber of iterations.

and the ability to carry out the iterative update. Even if the problem is dense and very large (where standard linear programming might fail), if there is some efficient way to calculate Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f}

then the subgradient method is a reasonable choice for algorithm.

Consider a problem with Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle n=10}

variables and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle m=100}

terms and with data Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle a_i}

and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle b_i}

generated from a normal distribution. We will consider all four of the step size rules mentioned above and will plot Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \epsilon}

or the difference between the optimal solution and the subgradient solution as a function of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle k}

, the nuber of iterations.

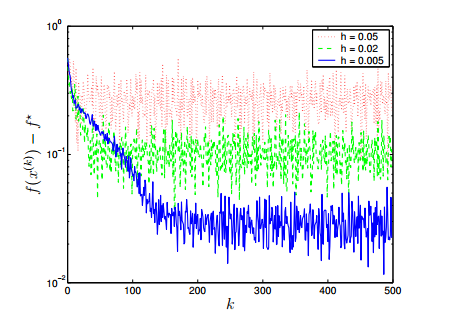

For the constant step size rule File:Example6.png for several values of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle h}

the following plot was obtained:

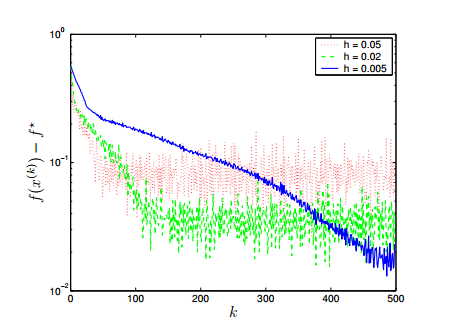

For the constant step length rule ![]() for several values of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle h}

the following plot was obtained:

for several values of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle h}

the following plot was obtained:

The above figures reveal a trade-off: a larger step size parameter Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle h}

gives a faster convergence but in the end gives a larger range of suboptimality so it is important to determine an Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle h}

that will converge close to the optimal solution without taking a very large number of iterations.

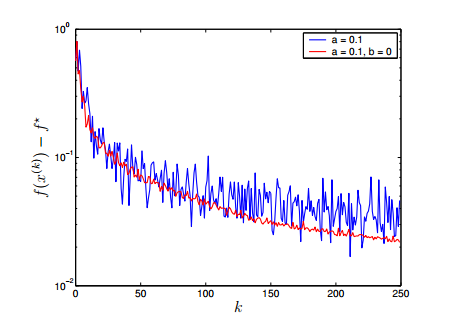

For the subgradient method using diminishing step size rules, both the nonsummable diminishing step size rule ![]() (blue) and the square summable but not summable step size rule

(blue) and the square summable but not summable step size rule ![]() (red) are plotted below for convergence:

(red) are plotted below for convergence:

This figure illustrates that both the nonsummable diminishing step size rule and the square summable but not summable step size rule show relatively fast and good convergence. The square summable but not summable step size rule shows less variation than the nonsummable diminishing step size rule but both show similar speed and convergence.

Overall, all four step size rules can be used to get good convergence, so it is important to try different values for Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle h}

in the constant step size and length rules and different formulas for the nonsummable diminishing step size rule and the square summable but not summable step size rule in order to get good convergence in the smallest amount of iterations possible.

Conclusion

The subgradient method is a very simple algorithm for minimizing convex nondifferentiable functions where newton's method and simple linear programming will not work. While the subgradient method has a disadvantage in that it can be much slower than interior-point methods such as Newton's method, it as the advantage of the memory requirement being often times much smaller than those of an interior-point or Newton method, which means it can be used for extremely large problems for which interior-point or Newton methods cannot be used. Morever, by combining the subgradient method with primal or dual decomposition techniques, it is sometimes possible to develop a simple distributed algorithm for a problem. The subgradient method is therefor an important method to know about for solving convex minimization problems that are nondifferentiable or very large.

References

1. Akgul, M. "Topics in Relaxation and Ellipsoidal Methods", volume 97 of Research Notes in Mathematics. Pitman, 1984.

2. Bazaraa, M. S., Sherali, H. D. "On the choice of step size in subgradient optimization." European Journal of Operational Research 7.4, 1981

3. Bertsekas, D. P. "Nonlinear Programming", (2nd edition), Athena Scientific, Belmont, MA, 1999.

4. Goffin, J. L. "On convergence rates of subgradient optimization methods." Mathematical Programming 13.1, 1977.

5. Shor, N. Z. "Minimization Methods for Non-differentiable Functions". Springer Series in Computational Mathematics. Springer, 1985.

6. Shor, N. Z. "Nondifferentiable Optimization and Polynomial Problems". Nonconvex Optimization and its Applications. Kluwer, 1998.