Interior-point method for NLP: Difference between revisions

Apoorv12345 (talk | contribs) mNo edit summary |

Apoorv12345 (talk | contribs) No edit summary |

||

| (116 intermediate revisions by the same user not shown) | |||

| Line 3: | Line 3: | ||

== Introduction == | == Introduction == | ||

Interior point (IP) methods are used to solve all kind of problems from linear to non-linear, from convex to non convex. The first known IP method is Frisch's (1955) logarithmic barrier method that was later extensively studied by Fiacco and McCormick | Interior point (IP) methods are used to solve all kind of problems from linear to non-linear, from convex to non convex. The first known IP method is Frisch's (1955) logarithmic barrier method that was later extensively studied by Fiacco and McCormick <ref>Mevludin Glavic, Louis Wehenkel. Interior Point Methods: A Survey, Short Survey of Applications to Power Systems, and Research Opportunities. (2004)</ref>. However, these methods mainly emerged in the late 1970's and 1980s. They were developed as algorithms to solve linear programming(LP) problems with a better worst case complexity than the simplex method <ref>Martin Scmidt. An Interior Point Method for Nonlinear Optimization problems with locatable and separable non smoothness.</ref>. In 1984, Karmarkar used IP methods to develop 'A new polynomial-Time method for Linear Programming' <ref>Narendra Karmarkar. “A New Polynomial-Time Algorithm for Linear Programming.” In: Combinatorica 4.4 (1984), pp. 373–395. issn: 0209-9683. doi:10.1007/BF02579150.</ref>. The main advantage of this method was better practical performance than the earlier algorithms like the ellipsoid method by Khachiyan <ref>Leonid Genrikhovich Khachiyan. “A polynomial algorithm in linear program- ming.” In: Soviet Mathematics Doklady 20 (1979), pp. 191–194.</ref>. After Karmarkar's publication more intensive research in this field led to development of a new category of IP methods known as 'primal-dual' methods. Currently, most powerful type of primal-dual codes are Ipopt, KNITRO and LOQO <ref>Martin Scmidt. An Interior Point Method for Nonlinear Optimization problems with locatable and separable non smoothness.</ref>. In recent years the majority of research in the field of IPs is for nonlinear optimisation problems, especially for second order (referred as SOCO) and semidefinite optimisation ( referred as SDO). SDO has wide range of applications in combinatorial optimization, control, structural engineering and electrical engineering <ref>Bomze, Immanuel M., et al.''Nonlinear Optimization'', Springer-Verlag Berlin Heidelberg, 2010.</ref>. | ||

== Theory & Methodology == | == Theory & Methodology == | ||

=== Nonlinear Constrained Optimisation === | === Nonlinear Constrained Optimisation === | ||

General form for any nonlinear constrained optimization problem can be depicted as shown in Equation set 1: | |||

<math>min f(x) | |||

</math> | |||

<math> s.t. c(x) = 0</math> | |||

<math> x \geqslant 0 </math> | |||

* The objective function and constraints can be any linear, quadratic or any general nonlinear functions. | * The objective function and constraints can be any linear, quadratic or any general nonlinear functions. | ||

* Typically, applied for convex | * Typically, applied for convex optimization problems | ||

* Convert maximisation problems to minimisation problems by taking negative of the objective function | * Convert maximisation problems to minimisation problems by taking negative of the objective function | ||

* Convert any general inequalities to simple inequalities using slack variables. | * Convert any general inequalities to simple inequalities using slack variables. | ||

=== Slack variables === | === Slack variables === | ||

Consider a general equation of the form as shown in Equation set 2: | |||

Consider a general equation of the form as shown in Equation set 2 | |||

<math>min f(x) | |||

</math> | |||

<math>g(x) | <math> s.t. g(x) \geqslant b </math> | ||

<math> h(x) = 0</math> | |||

<math> x \geqslant 0 </math> | |||

Now we need to convert this form to the form as shown in Equation set 1. For this the inequality '<math>g(x) \geqslant b</math> ' can be written <math>g(x)-b\geq0</math> which can be further written as: | |||

<math>g(x)-b-s=0</math> , along with <math>s\geq0</math> | |||

Thus the set of equation '<math>g(x)-b-s=0</math>' and '<math>h(x)=0</math>' become '<math>c(x)=0</math>' as shown in Equation set 1. | |||

=== Barrier function === | === Barrier function === | ||

Once the given problem is converted to the standard form (as shown in Equation set 1), we will now try to remove the inequality constraint <math> x \geqslant 0 </math> using the barrier term. We will introduce natural log barrier term for inequality constraints as shown in Equation set 3: | |||

Once the given problem is converted to the standard form (as shown in Equation set 1), we will now try to remove the inequality constraint | |||

<math>\min f(x) - \mu\textstyle \sum_{i} \displaystyle ln(x_i) | |||

</math> | |||

<math> s.t. c(x) = 0 </math> | |||

The point <math> \displaystyle ln(x_i) </math> is not defined for <math> x _i \leqslant 0 </math> . Our goal is to solve this barrier problem for a given <math> '\mu\textstyle' </math>, then decrease this <math> '\mu\textstyle' </math> and resolve the problem. If <math> '\mu\textstyle' </math> is decreased at the right rate and the approximate solutions are good enough then this method will converge to an optimal solution of the non linear optimization problem. We want to only search in the interior feasible space. | |||

==== Example for barrier function: ==== | ==== Example for barrier function: ==== | ||

Following example shows how barrier term can be incorporated into the objective function: | |||

<math>min (x-4)^2 - \mu\textstyle \displaystyle ln(x) </math> | |||

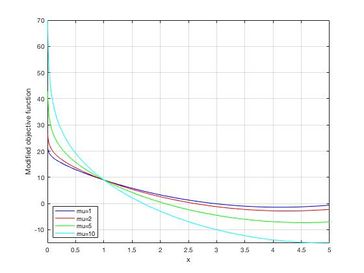

For different values of <math> '\mu\textstyle' </math> , the value of objective function changes and thus as the value of <math> '\mu\textstyle' </math> is decreased from '10' to '1' (as shown in Figure 1) , we approach towards the optimal solution i.e. <math> x = 4 </math>. | |||

[[File:Barrierfunctionexample.jpg|left|thumb|364x364px|'''Figure 1''': Variation of given objective function for different values of 'μ']] | [[File:Barrierfunctionexample.jpg|left|thumb|364x364px|'''Figure 1''': Variation of given objective function for different values of 'μ']] | ||

| Line 39: | Line 62: | ||

After we know the basics described above, the following methodology adopted from <ref>A. Wächter and L. T. Biegler, On the Implementation of an Interior-Point Filter Line-Search Algorithm for Large-Scale Nonlinear Programming, Mathematical Programming 106(1), pp. 25-57, 2006.</ref> can be used to solve the non-linear optimization problem. | |||

=== KKT Condition for Barrier Problem === | === KKT Condition for Barrier Problem === | ||

KKT conditions for the barrier problem of the form | KKT conditions for the barrier problem of the form: | ||

<math>\min f(x) - \mu\textstyle \sum_{i=1}^n \displaystyle ln(x_i)</math> <math>\Rightarrow </math> <math>\nabla f(x) + \nabla c(x)\lambda- \mu\textstyle \sum_{i=1}^n \displaystyle \tfrac{1}{x_i}</math> | |||

<math>s.t. c(x) = 0</math> <math> </math> <math>c(x) = 0</math> | |||

We can define now define <math>z_i = \tfrac{\mu}{x_i} </math> and solve the modified version of the KKT conditions: | |||

<math>\nabla f(x) + \nabla c(x)\lambda - z = 0 | |||

</math> | |||

<math>c(x) = 0</math> | |||

<math>XZe - \mu e = 0</math> . | |||

The matrix <math>'e' </math> is simply a column vector of ones i.e. <math>e = \begin{bmatrix} 1 \\ 1 \\ 1 \\ 1 \end{bmatrix} </math> to satisfy the rules of matrix operations. | |||

We can | === KKT solution with Newton-Raphson method === | ||

<math>\nabla f(x) + \nabla c(x)\lambda - z = 0 | |||

</math> | |||

<math>c(x) = 0</math> | |||

<math>XZe - \mu e = 0</math> | |||

<math>\Rightarrow</math> <math>\begin{bmatrix} W_k & \nabla c(x_k) & -I \\ \nabla c (x_k)^T & 0 & 0 \\ Z_k & 0 & X_k \end{bmatrix}</math><math>\begin{pmatrix} d_k^x \\ d_k^\lambda \\ d_k^z \end{pmatrix} = </math><math>- \begin{pmatrix} \nabla f (x_k) + \nabla c (x_k) \lambda_k - z_k \\ c (x_k) \\ X_k Z_k e - \mu_j e \end{pmatrix}</math> | |||

Here, <math>d_k^z </math> , <math>d_k^x </math> and <math>d_k^\lambda </math> are the search directions for the <math>x, z, \lambda </math> and <math>W_k</math> is the second gradient of the Lagrangian. | |||

Thus, <math>W_k = {\nabla_{xx}}^2 L(x_k, \lambda_k, z_k ) = {\nabla_{xx}}^2 ( f(x_k) + c (x_k)^T \lambda_k - z_k )</math> and <math>Z_k = \begin{bmatrix} z_1 & 0 & 0 \\ 0 & \centerdot\centerdot\centerdot & 0 \\ 0 & 0 & z_n \end{bmatrix}</math> , <math>X_k = \begin{bmatrix} x_1 & 0 & 0 \\ 0 & \centerdot\centerdot\centerdot & 0 \\ 0 & 0 & x_n \end{bmatrix}</math> | |||

The above system can be arranged into symmetric linear system as follows: | |||

<math>\begin{bmatrix} W_k + \Sigma_k & \nabla c(x_k) \\ \nabla c (x_k)^T & 0 \end{bmatrix} \begin{pmatrix} d_k^x \\ d_k^\lambda \end{pmatrix}</math> <math>= - \begin{pmatrix} \nabla f (x_k) + \nabla c (x_k) \lambda_k \\ c (x_k) \end{pmatrix}</math> where <math>\Sigma_k = X_k^{-1}Z_k</math> <math> ........Equation (*) </math> | |||

We can then solve for <math>d_k^z </math> after finding the linear solution to <math>d_k^x </math> and <math>d_k^\lambda </math> with the following form of the explicit solution: <math>d_k^z = \mu_kX_k^{-1} e - z_k - \Sigma_k d_k^x </math> <math> ........Equation (**) </math> | |||

=== Step size '''<math>(\alpha)</math>''' === | |||

After we have found out the search directions we need to find an appropriate value of the step size. The aim here is to find a value of step size to minimise the value of the objective functions and minimise the constraint violations. Following two methods are generally used to determine the value of '''<math>(\alpha)</math>''': | |||

* Decreasing the merit function: Merit function is defined as the sum of the objective function and the absolute values of the constraint violations multiplied by a scalar quantity i.e. <math>merit = f(x) + v \sum\left\vert c(x) \right\vert</math> so that it determines if it's a better step or not. | |||

* Filter methods use a combination of objective function <math>f(x)</math> and constraint violations <math>\left\vert c(x) \right\vert</math> to determine whether to accept the step size '''<math>(\alpha)</math>''' or not. | |||

Once the step size has been determined we can find out the values of <math>x_{k+1} , z_{k+1} , \lambda_{k+1}</math> | |||

<math>x_{k+1} = x_k + \alpha d_k^x</math> | |||

<math>z_{k+1} = z_k + \alpha d_k^z</math> | |||

<math>\lambda_{k+1} = \lambda_k + \alpha d_k^\lambda</math> | |||

=== Convergence Criteria === | |||

Convergence criteria can be defined when KKT conditions are satisfied within a tolerance: | |||

<math>\max\left\vert \nabla f(x) + \nabla c(x) \lambda - z \right\vert \leqslant \epsilon_{tol} </math> | |||

<math>\max\left\vert c(x) \right\vert \leq \epsilon_{tol} </math> | |||

<math>\max\left\vert XZe - \mu e \right\vert \leq \epsilon_{tol} </math> | |||

=== Pseudocode === | |||

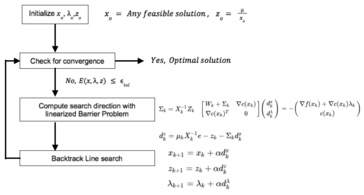

The above described algorithm can be depicted in the form of the following pseudocode as illustrated by <ref> Interior point method for Optimization <https://www.youtube.com/watch?v=zm4mfr-QT1E> </ref> | |||

* Choose a '''feasible''' guess value of <math> x_o </math> and initialise <math> \lambda </math> and <math> z_o </math> by solving the above described equation. | |||

* Once we have initial values then check for convergence, if it is within the 'tolerance limit' then we have reached the optimal solution. If not then, move to next step. | |||

* Compute the search direction using the Equation(*) and Equation(**) as mentioned above. | |||

* Perform back tracking line search by progressively decreasing the value of <math> \alpha </math> using merit function of Filter methods. | |||

* Check for convergence again and repeat steps if the solution is not within tolerance limit. | |||

[[File:Pseudocode.png|left|thumb|364x364px|'''Figure 2''': Pseudocode for Interior point method for NLP]] | |||

=== Barrier method vs Primal-dual method === | |||

In order to understand the concept of '''central path''' and how the barrier method is different from primal-dual method, the following methodology adopted from <ref> L.Vandenberghe."Lecture 13 The central path". <http://www.seas.ucla.edu/~vandenbe/ee236a/lectures/cpath.pdf></ref> can be used: | |||

Consider the simple case of an '''LP''' with the following form: | |||

'''Primal problem:''' | |||

<math> min c^T x | |||

</math> | |||

<math> s.t. Ax \leqslant b</math> | |||

'''Dual problem:''' | |||

<math> max -b^T z | |||

</math> | |||

<math> s.t. A^Tz + c = 0 </math> | |||

<math> z \geqslant 0 </math> | |||

Assuming primal and dual problems are strictly feasible and <math> rank(A) = n</math> | |||

'''Central path''' : Set of points with <math> {x^*(t) | t> 0} </math> with | |||

<math> x^*(t) = argmin(tc^Tx + \phi(x) ) = argmin(tc^Tx - \sum_{i=1}^m log(b_i - a_i^Tx) ) </math> | |||

<math> x^*(t) </math> is unique for all <math> t > 0 </math> | |||

The point <math> x^*(t) </math> is strictly primal feasible and satisfies: | |||

<math> c + \sum_{i=1}^m z_i^*(t)a_i = 0 </math> with <math> z_i^*(t) = \displaystyle \tfrac{1}{t(b_i - a_i^Tx^*(t))} </math> | |||

<math> z_i^*(t) </math> is also strictly dual feasible: <math> A^Tz^*(t) + c = 0 </math> and <math> z^*(t) > 0 </math> | |||

Duality gap between <math> z^*(t) </math> and <math> x^*(t) </math> can be calculated as: | |||

<math> c^Tx + b^Tz = (b-Ax)^Tz = \tfrac{m}{t} </math> | |||

The bound on the sub-optimality of <math> x^*(t) </math> : | |||

<math> c^Tx^*(t) - p^* \leqslant \tfrac{m}{t} </math> , where <math> p^* </math> is the optimal value of the LP. | |||

The following overview on Barrier vs primal-dual method was given by <ref>Ryan Tibshirani."Primal-Dual Interior-Point methods <https://www.stat.cmu.edu/~ryantibs/convexopt/lectures/primal-dual.pdf> </ref> | |||

* Both methods are motivated in terms of perturbed KKT conditions. | |||

* Primal dual interior point methods take one Newton step and then move to next step i.e. there are no separate inner and outer loops. | |||

* Primal dual interior point iterates are not necessarily feasible. | |||

* Primal-dual interior-point methods are often more efficient, as they can exhibit better than linear convergence. | |||

== Numerical Example == | == Numerical Example == | ||

Consider the following non-linear optimisation problem: | Consider the following non-linear optimisation problem: | ||

<math>\min | <math>\min x_2(5+x_1) </math> | ||

<math>s.t. | <math>s.t. x_1x_2 \geq 5</math> | ||

<math>x_1^2 + {x_2}^2 \leq 20</math> | <math>x_1^2 + {x_2}^2 \leq 20</math> | ||

== | This problem needs to be converted into standard form first: | ||

<math>\min x_2(5+x_1)</math> | |||

<math>s.t. x_1x_2 - 5 - x_3 = 0 \Rightarrow c_1(x)</math> | |||

<math>20-x_1^2 - {x_2}^2 - x4 = 0 \Rightarrow c_2(x)</math> | |||

<math> x_3 \geq 0</math> | |||

<math> x_4\geq 0</math> | |||

The initial guess values can be selected as <math>x^o = </math> <math>\begin{bmatrix} x_1 \\ x_2 \\ x_3 \\ x_4 \end{bmatrix} = \begin{bmatrix} 0 \\ 0 \\ 0 \\ 0 \end{bmatrix}</math> | |||

We will also choose the initial guess values of <math> \lambda_1 , \lambda_2 , z_1, z_2 </math> as <math> '0' </math>. | |||

<math>c(x^o) = </math> <math>\begin{bmatrix} -5 \\ 20 \end{bmatrix}</math> | |||

<math>\nabla_x c = \begin{bmatrix} x_2 & -2x_1 \\ x_1 & -2x_2 \\ -1 & 0 \\ 0 & -1 \end{bmatrix} = \begin{bmatrix} 0 & 0 \\ 0 & 0 \\ -1 & 0 \\ 0 & -1 \end{bmatrix} </math>, The first column represents <math>\nabla_x c_1</math> and second column represents <math>\nabla_x c_2</math> . | |||

Now we will calculate the values of <math>\nabla_{xx} c_1 </math> and <math>\nabla_{xx} c_2 </math> at the initial guess values. | |||

<math>\nabla_{xx} c_1 = \begin{bmatrix} 0 & 1& 0 & 0 \\ 1 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 \end{bmatrix} </math> , <math>\nabla_{xx} c_2 = \begin{bmatrix} -2 & 0& 0 & 0 \\ 0 & -2 & 0 & 0 \\ 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 \end{bmatrix} </math> | |||

Now, <math>\nabla f(x) = \begin{bmatrix} x_2 \\ 5 + x_1 \\ 0 \\ 0 \end{bmatrix} = \begin{bmatrix} 0 \\ 5 \\ 0 \\ 0 \end{bmatrix} </math>. Similarly, <math>\nabla_{xx} f = \begin{bmatrix} 0 & 1& 0 & 0 \\ 1 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 \end{bmatrix} </math> | |||

In the above equation Matrix <math>'Z' </math> and <math>'X'</math> are basically diagonal matrices with the diagonal elements as follows: | |||

<math>Z = \begin{bmatrix} z_1 & 0& 0 & 0 \\ 0 & z_2 & 0 & 0 \\ 0 & 0 & z_3 & 0\\ 0 & 0 & 0 & z_4 \end{bmatrix} </math> and <math>X = \begin{bmatrix} x_1 & 0& 0 & 0 \\ 0 & x_2 & 0 & 0 \\ 0 & 0 & x_3 & 0\\ 0 & 0 & 0 & x_4 \end{bmatrix} </math> | |||

As defined earlier, the matrix <math>'e' </math> is simply a column vector of ones i.e. <math>e = \begin{bmatrix} 1 \\ 1 \\ 1 \\ 1 \end{bmatrix} </math> | |||

Now since <math>W_k = {\nabla_{xx}}^2 L(x_k, \lambda_k, z_k ) = {\nabla_{xx}}^2 ( f(x_k) + c (x_k)^T \lambda_k - z_k )</math> and we have chosen initial guess values of <math>\lambda_1, \lambda_2 , z_1 , z_2 as '0'</math> , thus <math>W_k = \nabla_{xx} f </math>. | |||

Now we have everything to substitute in the following equation and calculate the search directions and iterate till we fulfil the convergence criteria: | |||

<math>\begin{bmatrix} W_k & \nabla c(x_k) & -I \\ \nabla c (x_k)^T & 0 & 0 \\ Z_k & 0 & X_k \end{bmatrix}</math><math>\begin{pmatrix} d_k^x \\ d_k^\lambda \\ d_k^z \end{pmatrix} = </math><math>- \begin{pmatrix} \nabla f (x_k) + \nabla c (x_k) \lambda_k - z_k \\ c (x_k) \\ X_k Z_k e - \mu_j e \end{pmatrix}</math> | |||

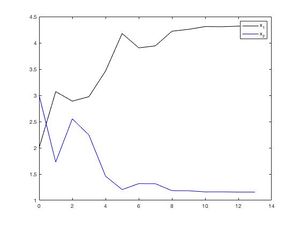

We can now implement BPOPT solver using MATLAB to get the following results as illustrated by <ref>Design Optimisation-Interior point methods, <https://apmonitor.com/me575/index.php/Main/InteriorPointMethod> </ref>: | |||

[[File:X1andx2.jpg|left|thumb|'''Figure 3''': Variation of x1 and x2 as iterations progress [11]]] | |||

From figure 3 we see that the solver has converged to following optimal values of x1 and x2 in '13' iterations. | |||

<math> x1 = 4.3198 </math> and <math> x2 = 1.1575 </math> | |||

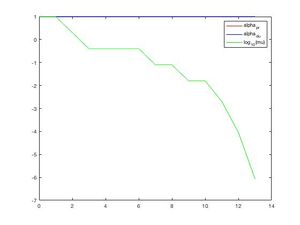

[[File:Muvariation.jpg|left|thumb|'''Figure 4''': Variation of 'μ' as iterations progress [11]]] | |||

In the figure 4 we can clearly see how the value of <math>' \mu ' </math> is decreasing as we progress towards the solution (increasing iterations). | |||

== Applications == | |||

IP methods have proven computationally to be viable alternative for the solution of several power engineering optimisation models. A variety of these are applied to a number of power systems models. 'Optimal power flow (OPF)' seeks the operation point of a power system that maximizes a given objective function and satisfies operational and physical constraints. Given real-time operating conditions and the large scale of the power system, it is demanding to develop algorithms that allow for OPF to be decomposed and efficiently solved on parallel computing systems <ref>R. A. Jabr, A. H. Cooninck, B. J. Cory: ”A Primal-Dual Interior Point Method for Optimal Power Flow Dispatching”, IEEE Transactions on Power Systems, Vol. 17, No. 3, pp. 654-662, 2002.</ref>. As compared to gradient based algorithms primal-dual based interior point methods have faster convergence rates. | |||

A hydro-thermal coordination program deals with the problem of finding the scheduling and the production of every hydro and thermal plant of a system so that the customer demand is supplied at minimum cost with a certain level of security and all constraints related to the thermal and hydro subsystems are satisfied. A medium-term hydro-thermal coordination (MTHTC) problem results in a very large Non-Linear Mixed-Integer Programming problem <ref>J. Medina, V. H. Quintana, A. J. Conejo, F. P. Thoden: “A Comparison of Interior-Point Codes for Medium-Term Hydro-Thermal Coordination”, IEEE PES Summer Meeting, Paper 0-7803-1/97, 1997.</ref> IP optimisation codes are used to find the solution of these MTHTC problems. | |||

IP methods are also used in Voltage collapse and reliability evaluation. Power flow unsolvability occurs when for a given set of active and reactive power loads the power flow equations have no real solution. This kind of problem may happen for instance when a heavily stressed system is subject to a severe contingency situation. The set of control actions in the IP algorithm includes rescheduling of active power of generators, adjustments on terminal voltage of generators, tap changes on LTC transformers, and as a last resort, minimum load shedding <ref>S. Grenville, J. C. O. Mello, A. C. G. Mello: “Application of interior point methods to power flow unsolvability”, IEEE Transactions on Power Systems, Vol. 11, pp. 1096-1103, 1996.</ref>. | |||

Fuel Planning and Multi-reservoir management are some other areas where interior point methods have proven to be useful. A long-term fuel planning problem aims to minimize the sum of fuel purchase and yearly storage costs, subject to meeting the generation requirements, the constraints associatedwith fuel supply contracts, and the constraints associated with storage of fuel.<ref>V. R. Sherkat, Y. Ikura: “Experience with Interior Point Optimisation Software for a Fuel Planning Application” IEEE Transactions on Power Systems,Vol. 9, No. 2, 1994.</ref> | |||

== Conclusion == | |||

Interior Point methods remain an active and fruitful area of research, although the frenetic pace that has characterized the area slowed down in recent years. Linear programming codes have become mainstream and continue to undergo development although they face stiff competition from the simplex method. Applications to quadratic | |||

programming show considerable promise, because of the superior ability of interior point approach to exploit problem structure efficiently. The influence on nonlinear programming theory and practice is yet to be determined even though substantial research has already been done on this topic <ref>Stephen J. Wright. "Recent developments in Interior point methods."</ref>. An extensive evaluation of line search options was conducted by <ref>A. Wächter and L. T. Biegler, On the Implementation of an Interior-Point Filter Line-Search Algorithm for Large-Scale Nonlinear Programming, Mathematical Programming 106(1), pp. 25-57, 2006.</ref> and it was concluded that filter approach was the most robust approach. Many researchers are hopeful for the use of interior point approach in decomposition methods, though no rigorous comparative studies with alternative approaches have been performed.<ref>Stephen J. Wright. "Recent developments in Interior point methods."</ref> Many authors have also worked on a special subclass of non smooth optimization problems and a new interior point method has been proposed for this class. These methods extend a standard interior-point method for nonlinear and nonconvex problems by additional line-search and step length computation features and a problem-tailored stationarity test. A special type of generalized gradients is developed that is used at points of the nonsmooth problems that fail to be differentiable.<ref>Martin Scmidt. An Interior Point Method for Nonlinear Optimization problems with locatable and separable non smoothness.</ref> | |||

== References == | |||

Latest revision as of 08:49, 7 December 2021

Author: Apoorv Lal (CHEME 6800, Fall 2021)

Introduction

Interior point (IP) methods are used to solve all kind of problems from linear to non-linear, from convex to non convex. The first known IP method is Frisch's (1955) logarithmic barrier method that was later extensively studied by Fiacco and McCormick [1]. However, these methods mainly emerged in the late 1970's and 1980s. They were developed as algorithms to solve linear programming(LP) problems with a better worst case complexity than the simplex method [2]. In 1984, Karmarkar used IP methods to develop 'A new polynomial-Time method for Linear Programming' [3]. The main advantage of this method was better practical performance than the earlier algorithms like the ellipsoid method by Khachiyan [4]. After Karmarkar's publication more intensive research in this field led to development of a new category of IP methods known as 'primal-dual' methods. Currently, most powerful type of primal-dual codes are Ipopt, KNITRO and LOQO [5]. In recent years the majority of research in the field of IPs is for nonlinear optimisation problems, especially for second order (referred as SOCO) and semidefinite optimisation ( referred as SDO). SDO has wide range of applications in combinatorial optimization, control, structural engineering and electrical engineering [6].

Theory & Methodology

Nonlinear Constrained Optimisation

General form for any nonlinear constrained optimization problem can be depicted as shown in Equation set 1:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x \geqslant 0 }

- The objective function and constraints can be any linear, quadratic or any general nonlinear functions.

- Typically, applied for convex optimization problems

- Convert maximisation problems to minimisation problems by taking negative of the objective function

- Convert any general inequalities to simple inequalities using slack variables.

Slack variables

Consider a general equation of the form as shown in Equation set 2:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle min f(x) }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s.t. g(x) \geqslant b }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle h(x) = 0}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x \geqslant 0 }

Now we need to convert this form to the form as shown in Equation set 1. For this the inequality 'Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle g(x) \geqslant b} ' can be written Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle g(x)-b\geq0} which can be further written as:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle g(x)-b-s=0} , along with Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s\geq0}

Thus the set of equation 'Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle g(x)-b-s=0} ' and 'Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle h(x)=0} ' become 'Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle c(x)=0} ' as shown in Equation set 1.

Barrier function

Once the given problem is converted to the standard form (as shown in Equation set 1), we will now try to remove the inequality constraint Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x \geqslant 0 } using the barrier term. We will introduce natural log barrier term for inequality constraints as shown in Equation set 3:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \min f(x) - \mu\textstyle \sum_{i} \displaystyle ln(x_i) }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s.t. c(x) = 0 }

The point Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \displaystyle ln(x_i) } is not defined for Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x _i \leqslant 0 } . Our goal is to solve this barrier problem for a given Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle '\mu\textstyle' } , then decrease this Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle '\mu\textstyle' } and resolve the problem. If Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle '\mu\textstyle' } is decreased at the right rate and the approximate solutions are good enough then this method will converge to an optimal solution of the non linear optimization problem. We want to only search in the interior feasible space.

Example for barrier function:

Following example shows how barrier term can be incorporated into the objective function:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle min (x-4)^2 - \mu\textstyle \displaystyle ln(x) }

For different values of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle '\mu\textstyle' } , the value of objective function changes and thus as the value of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle '\mu\textstyle' } is decreased from '10' to '1' (as shown in Figure 1) , we approach towards the optimal solution i.e. Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x = 4 } .

After we know the basics described above, the following methodology adopted from [7] can be used to solve the non-linear optimization problem.

KKT Condition for Barrier Problem

KKT conditions for the barrier problem of the form:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \min f(x) - \mu\textstyle \sum_{i=1}^n \displaystyle ln(x_i)} Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \Rightarrow } Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \nabla f(x) + \nabla c(x)\lambda- \mu\textstyle \sum_{i=1}^n \displaystyle \tfrac{1}{x_i}}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s.t. c(x) = 0} Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle } Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle c(x) = 0}

We can define now define Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle z_i = \tfrac{\mu}{x_i} } and solve the modified version of the KKT conditions:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \nabla f(x) + \nabla c(x)\lambda - z = 0 }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle c(x) = 0}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle XZe - \mu e = 0} .

The matrix Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 'e' } is simply a column vector of ones i.e. Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle e = \begin{bmatrix} 1 \\ 1 \\ 1 \\ 1 \end{bmatrix} } to satisfy the rules of matrix operations.

KKT solution with Newton-Raphson method

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \nabla f(x) + \nabla c(x)\lambda - z = 0 }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle c(x) = 0}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle XZe - \mu e = 0}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \Rightarrow} Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{bmatrix} W_k & \nabla c(x_k) & -I \\ \nabla c (x_k)^T & 0 & 0 \\ Z_k & 0 & X_k \end{bmatrix}} Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{pmatrix} d_k^x \\ d_k^\lambda \\ d_k^z \end{pmatrix} = } Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle - \begin{pmatrix} \nabla f (x_k) + \nabla c (x_k) \lambda_k - z_k \\ c (x_k) \\ X_k Z_k e - \mu_j e \end{pmatrix}}

Here, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle d_k^z }

, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle d_k^x }

and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle d_k^\lambda }

are the search directions for the Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x, z, \lambda }

and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle W_k}

is the second gradient of the Lagrangian.

Thus, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle W_k = {\nabla_{xx}}^2 L(x_k, \lambda_k, z_k ) = {\nabla_{xx}}^2 ( f(x_k) + c (x_k)^T \lambda_k - z_k )} and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle Z_k = \begin{bmatrix} z_1 & 0 & 0 \\ 0 & \centerdot\centerdot\centerdot & 0 \\ 0 & 0 & z_n \end{bmatrix}} ,

The above system can be arranged into symmetric linear system as follows:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{bmatrix} W_k + \Sigma_k & \nabla c(x_k) \\ \nabla c (x_k)^T & 0 \end{bmatrix} \begin{pmatrix} d_k^x \\ d_k^\lambda \end{pmatrix}} Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle = - \begin{pmatrix} \nabla f (x_k) + \nabla c (x_k) \lambda_k \\ c (x_k) \end{pmatrix}} where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \Sigma_k = X_k^{-1}Z_k} Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle ........Equation (*) }

We can then solve for Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle d_k^z } after finding the linear solution to Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle d_k^x } and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle d_k^\lambda } with the following form of the explicit solution: Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle d_k^z = \mu_kX_k^{-1} e - z_k - \Sigma_k d_k^x } Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle ........Equation (**) }

Step size Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle (\alpha)}

After we have found out the search directions we need to find an appropriate value of the step size. The aim here is to find a value of step size to minimise the value of the objective functions and minimise the constraint violations. Following two methods are generally used to determine the value of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle (\alpha)} :

- Decreasing the merit function: Merit function is defined as the sum of the objective function and the absolute values of the constraint violations multiplied by a scalar quantity i.e. Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle merit = f(x) + v \sum\left\vert c(x) \right\vert} so that it determines if it's a better step or not.

- Filter methods use a combination of objective function Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(x)} and constraint violations Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \left\vert c(x) \right\vert} to determine whether to accept the step size Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle (\alpha)} or not.

Once the step size has been determined we can find out the values of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_{k+1} , z_{k+1} , \lambda_{k+1}}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_{k+1} = x_k + \alpha d_k^x}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle z_{k+1} = z_k + \alpha d_k^z}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \lambda_{k+1} = \lambda_k + \alpha d_k^\lambda}

Convergence Criteria

Convergence criteria can be defined when KKT conditions are satisfied within a tolerance:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \max\left\vert \nabla f(x) + \nabla c(x) \lambda - z \right\vert \leqslant \epsilon_{tol} }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \max\left\vert c(x) \right\vert \leq \epsilon_{tol} }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \max\left\vert XZe - \mu e \right\vert \leq \epsilon_{tol} }

Pseudocode

The above described algorithm can be depicted in the form of the following pseudocode as illustrated by [8]

- Choose a feasible guess value of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_o } and initialise Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \lambda } and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle z_o } by solving the above described equation.

- Once we have initial values then check for convergence, if it is within the 'tolerance limit' then we have reached the optimal solution. If not then, move to next step.

- Compute the search direction using the Equation(*) and Equation(**) as mentioned above.

- Perform back tracking line search by progressively decreasing the value of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha } using merit function of Filter methods.

- Check for convergence again and repeat steps if the solution is not within tolerance limit.

Barrier method vs Primal-dual method

In order to understand the concept of central path and how the barrier method is different from primal-dual method, the following methodology adopted from [9] can be used:

Consider the simple case of an LP with the following form:

Primal problem:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle min c^T x }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s.t. Ax \leqslant b}

Dual problem:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle max -b^T z }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s.t. A^Tz + c = 0 }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle z \geqslant 0 }

Assuming primal and dual problems are strictly feasible and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle rank(A) = n}

Central path : Set of points with Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle {x^*(t) | t> 0} }

with

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x^*(t) = argmin(tc^Tx + \phi(x) ) = argmin(tc^Tx - \sum_{i=1}^m log(b_i - a_i^Tx) ) }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x^*(t) } is unique for all Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle t > 0 }

The point Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x^*(t) }

is strictly primal feasible and satisfies:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle c + \sum_{i=1}^m z_i^*(t)a_i = 0 } with Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle z_i^*(t) = \displaystyle \tfrac{1}{t(b_i - a_i^Tx^*(t))} }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle z_i^*(t) }

is also strictly dual feasible: Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle A^Tz^*(t) + c = 0 }

and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle z^*(t) > 0 }

Duality gap between Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle z^*(t) }

and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x^*(t) }

can be calculated as:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle c^Tx + b^Tz = (b-Ax)^Tz = \tfrac{m}{t} }

The bound on the sub-optimality of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x^*(t) }

:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle c^Tx^*(t) - p^* \leqslant \tfrac{m}{t} } , where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle p^* } is the optimal value of the LP.

The following overview on Barrier vs primal-dual method was given by [10]

- Both methods are motivated in terms of perturbed KKT conditions.

- Primal dual interior point methods take one Newton step and then move to next step i.e. there are no separate inner and outer loops.

- Primal dual interior point iterates are not necessarily feasible.

- Primal-dual interior-point methods are often more efficient, as they can exhibit better than linear convergence.

Numerical Example

Consider the following non-linear optimisation problem:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \min x_2(5+x_1) }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s.t. x_1x_2 \geq 5}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_1^2 + {x_2}^2 \leq 20}

This problem needs to be converted into standard form first:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \min x_2(5+x_1)}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s.t. x_1x_2 - 5 - x_3 = 0 \Rightarrow c_1(x)}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 20-x_1^2 - {x_2}^2 - x4 = 0 \Rightarrow c_2(x)}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_3 \geq 0}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_4\geq 0}

The initial guess values can be selected as Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x^o = } Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{bmatrix} x_1 \\ x_2 \\ x_3 \\ x_4 \end{bmatrix} = \begin{bmatrix} 0 \\ 0 \\ 0 \\ 0 \end{bmatrix}}

We will also choose the initial guess values of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \lambda_1 , \lambda_2 , z_1, z_2 } as Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle '0' } .

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle c(x^o) = } Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{bmatrix} -5 \\ 20 \end{bmatrix}}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \nabla_x c = \begin{bmatrix} x_2 & -2x_1 \\ x_1 & -2x_2 \\ -1 & 0 \\ 0 & -1 \end{bmatrix} = \begin{bmatrix} 0 & 0 \\ 0 & 0 \\ -1 & 0 \\ 0 & -1 \end{bmatrix} } , The first column represents Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \nabla_x c_1} and second column represents Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \nabla_x c_2} .

Now we will calculate the values of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \nabla_{xx} c_1 } and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \nabla_{xx} c_2 } at the initial guess values.

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \nabla_{xx} c_1 = \begin{bmatrix} 0 & 1& 0 & 0 \\ 1 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 \end{bmatrix} } , Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \nabla_{xx} c_2 = \begin{bmatrix} -2 & 0& 0 & 0 \\ 0 & -2 & 0 & 0 \\ 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 \end{bmatrix} }

Now, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \nabla f(x) = \begin{bmatrix} x_2 \\ 5 + x_1 \\ 0 \\ 0 \end{bmatrix} = \begin{bmatrix} 0 \\ 5 \\ 0 \\ 0 \end{bmatrix} }

. Similarly, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \nabla_{xx} f = \begin{bmatrix} 0 & 1& 0 & 0 \\ 1 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 \end{bmatrix} }

In the above equation Matrix Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 'Z' } and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 'X'} are basically diagonal matrices with the diagonal elements as follows:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle Z = \begin{bmatrix} z_1 & 0& 0 & 0 \\ 0 & z_2 & 0 & 0 \\ 0 & 0 & z_3 & 0\\ 0 & 0 & 0 & z_4 \end{bmatrix} } and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X = \begin{bmatrix} x_1 & 0& 0 & 0 \\ 0 & x_2 & 0 & 0 \\ 0 & 0 & x_3 & 0\\ 0 & 0 & 0 & x_4 \end{bmatrix} }

As defined earlier, the matrix Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 'e' } is simply a column vector of ones i.e. Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle e = \begin{bmatrix} 1 \\ 1 \\ 1 \\ 1 \end{bmatrix} }

Now since Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle W_k = {\nabla_{xx}}^2 L(x_k, \lambda_k, z_k ) = {\nabla_{xx}}^2 ( f(x_k) + c (x_k)^T \lambda_k - z_k )} and we have chosen initial guess values of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \lambda_1, \lambda_2 , z_1 , z_2 as '0'} , thus Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle W_k = \nabla_{xx} f } .

Now we have everything to substitute in the following equation and calculate the search directions and iterate till we fulfil the convergence criteria:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{bmatrix} W_k & \nabla c(x_k) & -I \\ \nabla c (x_k)^T & 0 & 0 \\ Z_k & 0 & X_k \end{bmatrix}} Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{pmatrix} d_k^x \\ d_k^\lambda \\ d_k^z \end{pmatrix} = } Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle - \begin{pmatrix} \nabla f (x_k) + \nabla c (x_k) \lambda_k - z_k \\ c (x_k) \\ X_k Z_k e - \mu_j e \end{pmatrix}}

We can now implement BPOPT solver using MATLAB to get the following results as illustrated by [11]:

From figure 3 we see that the solver has converged to following optimal values of x1 and x2 in '13' iterations. Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x1 = 4.3198 } and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x2 = 1.1575 }

In the figure 4 we can clearly see how the value of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle ' \mu ' }

is decreasing as we progress towards the solution (increasing iterations).

Applications

IP methods have proven computationally to be viable alternative for the solution of several power engineering optimisation models. A variety of these are applied to a number of power systems models. 'Optimal power flow (OPF)' seeks the operation point of a power system that maximizes a given objective function and satisfies operational and physical constraints. Given real-time operating conditions and the large scale of the power system, it is demanding to develop algorithms that allow for OPF to be decomposed and efficiently solved on parallel computing systems [12]. As compared to gradient based algorithms primal-dual based interior point methods have faster convergence rates.

A hydro-thermal coordination program deals with the problem of finding the scheduling and the production of every hydro and thermal plant of a system so that the customer demand is supplied at minimum cost with a certain level of security and all constraints related to the thermal and hydro subsystems are satisfied. A medium-term hydro-thermal coordination (MTHTC) problem results in a very large Non-Linear Mixed-Integer Programming problem [13] IP optimisation codes are used to find the solution of these MTHTC problems.

IP methods are also used in Voltage collapse and reliability evaluation. Power flow unsolvability occurs when for a given set of active and reactive power loads the power flow equations have no real solution. This kind of problem may happen for instance when a heavily stressed system is subject to a severe contingency situation. The set of control actions in the IP algorithm includes rescheduling of active power of generators, adjustments on terminal voltage of generators, tap changes on LTC transformers, and as a last resort, minimum load shedding [14].

Fuel Planning and Multi-reservoir management are some other areas where interior point methods have proven to be useful. A long-term fuel planning problem aims to minimize the sum of fuel purchase and yearly storage costs, subject to meeting the generation requirements, the constraints associatedwith fuel supply contracts, and the constraints associated with storage of fuel.[15]

Conclusion

Interior Point methods remain an active and fruitful area of research, although the frenetic pace that has characterized the area slowed down in recent years. Linear programming codes have become mainstream and continue to undergo development although they face stiff competition from the simplex method. Applications to quadratic programming show considerable promise, because of the superior ability of interior point approach to exploit problem structure efficiently. The influence on nonlinear programming theory and practice is yet to be determined even though substantial research has already been done on this topic [16]. An extensive evaluation of line search options was conducted by [17] and it was concluded that filter approach was the most robust approach. Many researchers are hopeful for the use of interior point approach in decomposition methods, though no rigorous comparative studies with alternative approaches have been performed.[18] Many authors have also worked on a special subclass of non smooth optimization problems and a new interior point method has been proposed for this class. These methods extend a standard interior-point method for nonlinear and nonconvex problems by additional line-search and step length computation features and a problem-tailored stationarity test. A special type of generalized gradients is developed that is used at points of the nonsmooth problems that fail to be differentiable.[19]

References

- ↑ Mevludin Glavic, Louis Wehenkel. Interior Point Methods: A Survey, Short Survey of Applications to Power Systems, and Research Opportunities. (2004)

- ↑ Martin Scmidt. An Interior Point Method for Nonlinear Optimization problems with locatable and separable non smoothness.

- ↑ Narendra Karmarkar. “A New Polynomial-Time Algorithm for Linear Programming.” In: Combinatorica 4.4 (1984), pp. 373–395. issn: 0209-9683. doi:10.1007/BF02579150.

- ↑ Leonid Genrikhovich Khachiyan. “A polynomial algorithm in linear program- ming.” In: Soviet Mathematics Doklady 20 (1979), pp. 191–194.

- ↑ Martin Scmidt. An Interior Point Method for Nonlinear Optimization problems with locatable and separable non smoothness.

- ↑ Bomze, Immanuel M., et al.Nonlinear Optimization, Springer-Verlag Berlin Heidelberg, 2010.

- ↑ A. Wächter and L. T. Biegler, On the Implementation of an Interior-Point Filter Line-Search Algorithm for Large-Scale Nonlinear Programming, Mathematical Programming 106(1), pp. 25-57, 2006.

- ↑ Interior point method for Optimization <https://www.youtube.com/watch?v=zm4mfr-QT1E>

- ↑ L.Vandenberghe."Lecture 13 The central path". <http://www.seas.ucla.edu/~vandenbe/ee236a/lectures/cpath.pdf>

- ↑ Ryan Tibshirani."Primal-Dual Interior-Point methods <https://www.stat.cmu.edu/~ryantibs/convexopt/lectures/primal-dual.pdf>

- ↑ Design Optimisation-Interior point methods, <https://apmonitor.com/me575/index.php/Main/InteriorPointMethod>

- ↑ R. A. Jabr, A. H. Cooninck, B. J. Cory: ”A Primal-Dual Interior Point Method for Optimal Power Flow Dispatching”, IEEE Transactions on Power Systems, Vol. 17, No. 3, pp. 654-662, 2002.

- ↑ J. Medina, V. H. Quintana, A. J. Conejo, F. P. Thoden: “A Comparison of Interior-Point Codes for Medium-Term Hydro-Thermal Coordination”, IEEE PES Summer Meeting, Paper 0-7803-1/97, 1997.

- ↑ S. Grenville, J. C. O. Mello, A. C. G. Mello: “Application of interior point methods to power flow unsolvability”, IEEE Transactions on Power Systems, Vol. 11, pp. 1096-1103, 1996.

- ↑ V. R. Sherkat, Y. Ikura: “Experience with Interior Point Optimisation Software for a Fuel Planning Application” IEEE Transactions on Power Systems,Vol. 9, No. 2, 1994.

- ↑ Stephen J. Wright. "Recent developments in Interior point methods."

- ↑ A. Wächter and L. T. Biegler, On the Implementation of an Interior-Point Filter Line-Search Algorithm for Large-Scale Nonlinear Programming, Mathematical Programming 106(1), pp. 25-57, 2006.

- ↑ Stephen J. Wright. "Recent developments in Interior point methods."

- ↑ Martin Scmidt. An Interior Point Method for Nonlinear Optimization problems with locatable and separable non smoothness.