Adam: Difference between revisions

No edit summary |

|||

| Line 91: | Line 91: | ||

Let's see an example of Adam optimizer. A sample dataset is shown below which as weight and height of couple of people. We have to predict the height of a person based on the given weight. | |||

{| class="wikitable" | {| class="wikitable" | ||

|- | |- | ||

| Hours Studying || | | Hours Studying || 60 || 76 || 85 || 76 || 50 || 55 || 100 || 105 || 45 || 78 || 57 || 91 || 69 || 74 || 112 | ||

|- | |- | ||

| Exam Grad || | | Exam Grad || 76 || 72.3 || 88 || 60 || 79 || 47 || 67 || 66 || 65 || 61 || 68 || 56 || 75 || 57 || 76 | ||

|} | |} | ||

The | The hypothesis function is, | ||

<math>f_\theta(x) = \theta_0 + \theta_1 x.</math> | <math>f_\theta(x) = \theta_0 + \theta_1 x.</math> | ||

The cost function is | The cost function is, | ||

<math> J({\theta}) = \frac{1}{2}\sum_i^n \big(f_\theta(x_i) - y_i \big)^2, </math> | <math> J({\theta}) = \frac{1}{2}\sum_i^n \big(f_\theta(x_i) - y_i \big)^2, </math> | ||

The optimization problem is defined as, we have to find the values of theta which help to minimize the objective function mentioned below, | |||

<math> \mathrm{argmin}_{\theta} \quad \frac{1}{n}\sum_{i}^n \big(f_\theta(x_i) - y_i \big) ^2 </math> | <math> \mathrm{argmin}_{\theta} \quad \frac{1}{n}\sum_{i}^n \big(f_\theta(x_i) - y_i \big) ^2 </math> | ||

The cost function with respect to the weights <math>\theta_0</math> and <math>\theta_1</math> are, | |||

<math> \frac{\partial J(\theta)}{\partial \theta_0} = \big(f_\theta(x) - y \big) </math><br/> | <math> \frac{\partial J(\theta)}{\partial \theta_0} = \big(f_\theta(x) - y \big) </math><br/> | ||

<math> \frac{\partial J(\theta)}{\partial \theta_1} = \big(f_\theta(x) - y \big) x </math> | <math> \frac{\partial J(\theta)}{\partial \theta_1} = \big(f_\theta(x) - y \big) x </math> | ||

The initial values of <math>{\theta}</math> will be set to [ | The initial values of <math>{\theta}</math> will be set to [10, 1] and the learning rate <math>\alpha</math>, is set to 0.01 and setting the parameters <math>\beta_1</math>, <math>\beta_2</math>, and e as 0.94, 0.9878 and 10^-8 respectively. Starting from the first data sample the gradients are, | ||

<math> \frac{\partial J(\theta)}{\partial \theta_0} = \big(( | <math> \frac{\partial J(\theta)}{\partial \theta_0} = \big((10 + 1\cdot 60 - 76 \big) = -6 </math><br/> | ||

<math> \frac{\partial J(\theta)}{\partial \theta_1} = \big(( | <math> \frac{\partial J(\theta)}{\partial \theta_1} = \big((10 + 1\cdot 60 - 76 \big)\cdot 60 = -360 </math><br/> | ||

Here <math>m_0</math> and <math>v_0</math> are zero, <math>m_1</math> and <math>v_1</math> are calculated as | |||

<math> m_1 = 0. | <math> m_1 = 0.94 \cdot 0 + (1-0.94) \cdot \begin{bmatrix} -6\\ -360 \end{bmatrix} = \begin{bmatrix} -0.36\\ -21.6\end{bmatrix} </math> <br/> | ||

<math> v_1 = 0. | <math> v_1 = 0.9878\cdot 0 + (1-0.9878) \cdot \begin{bmatrix} -6^2\\-360^2 \end{bmatrix} = \begin{bmatrix} 0.4392\\ 1581.12\end{bmatrix} , </math> <br/> | ||

The bias-corrected | The new bias-corrected values of <math>m_1</math> and <math>v_1</math> are, | ||

<math> \hat{m}_1 = \begin{bmatrix} - | <math> \hat{m}_1 = \begin{bmatrix} -0.36\\ -21.6\end{bmatrix} \frac{1}{ (1-0.94^1)} = \begin{bmatrix} -6\\-360\end{bmatrix}</math> <br/> | ||

<math> \hat{v}_1 = \begin{bmatrix} 0. | <math> \hat{v}_1 = \begin{bmatrix} 0.4392\\ 1581.12\end{bmatrix} \frac{1} {(1-0.9878^1)} = \begin{bmatrix} 36\\129600\end{bmatrix}. </math> <br/> | ||

Finally, the | Finally, the weight update is, | ||

<math> \theta_0 = | <math> \theta_0 = 10 - 0.01 \cdot -6 / (\sqrt{36} + 10^{-8}) = 10.01 </math> <br/> | ||

<math> \theta_1 = 1 - 0. | <math> \theta_1 = 1 - 0.01 \cdot -360 / (\sqrt{129600} + 10^{-8}) = 1.01 </math> <br/> | ||

The procedure is repeated until the values of the weights are converged. | |||

== Applications == | == Applications == | ||

The Adam optimization algorithm | The Adam optimization algorithm is the replacement optimization algorithm for SGD for training DNN. According to Adam combines the best properties of the AdaGrad and RMSP algorithms to provide an optimization algorithm that can handle sparse gradients on noisy problems. Adam is proved to be the best optimizer amongst all the other optimizers such as AdaGrad, SGD, RMSP etc. Further research is going on Adaptive optimizers for Federated Learning and their performances are being compared. Federated Learning is a privacy preserving technique which is an alternative for Machine Learning where data training is done on the device itself without sharing it with the cloud server. | ||

== Variants of Adam == | == Variants of Adam == | ||

Revision as of 16:14, 29 November 2021

Author: Akash Ajagekar (SYSEN 6800 Fall 2021)

Introduction

Adam optimizer is the extended version of stochastic gradient descent which has broader scope in future for deep learning applications in computer vision and natural processing. It is an optimization algorithm that can be an alternative for stochastic gradient descent process. The name is derived from adaptive moment estimation. Adam is proposed as the most efficient stochastic optimization which only requires first order gradients where memory requirement too less.[1] Before Adam many adaptive optimization techniques were introduced such as AdaGrad, RMSP which have good performance over SGD but in some cases have some disadvantages such as generalizing performance which is worse than that of the SGD in some cases. So Adam was introduced which is better in terms of generalizing performance.

Theory

Adam is a combination of two gradient descent methods which are explained below,

Momentum:

This is a optimization algorithm which takes into consideration the 'exponentially weighted average' and accelerates the gradient descent. It is an extension of gradient descent optimization algorithm.

The Momentum algorithm is solved in two parts. First is to calculate the change to position and second one is to update the old position with the updated position. The change in position is given by,

update = α * m_t

The new position or weights at time t is given by,

w_t+1 = w_t - update

Here in the above equation α(Step Size) is the Hyperparameter which controls the movement in the search space which is also called as learning rate. And, f'(x) is the derivative function or aggregate of gradients at time t.

where,

m_t = β * m_t - 1 + (1 - β) * (∂L / ∂w_t)

In the above equations m_t and m_t-1 are aggregate of gradients at time t and aggregate of gradient at time t-1.

According to [2] Momentum has the effect of dampening down the change in the gradient and, in turn, the step size with each new point in the search space.

Root Mean Square Propagation (RMSP):

RMSP is an adaptive optimization algorithm which is a improved version of AdaGrad . In AdaGrad we take the cumulative summation of squared gradients but, in RMSP we take the 'exponential average'.

It s given by,

w_t+1 = w_t - (αt / (vt + e) ^ 1/2) * (∂L / ∂w_t)

where,

vt = βvt - 1 + (1 - β) * (∂L / ∂w_t) ^ 2

Here,

Aggregate of gradient at t = m_t

Aggregate of gradient at t - 1 = m_t - 1

Weights at time t = w_t

Weights at time t + 1 = w_t + 1

αt = learning rate(Hyperparameter)

∂L = derivative of loss function

∂w_t = derivative of weights at t

β = Average parameter

e = constant

But as we know these two optimizers explained below have some problems such as generalizing performance. The article [3] tells us that Adam takes over the attributes of the above two optimizers and build upon them to give more optimized gradient descent.

Algorithm

Taking the equations used in the above two optimizers

m_t = β1 * m_t - 1 + (1 - β1) * (∂L / ∂w_t) and vt = β2vt - 1 + (1 - β2) * (∂L / ∂w_t) ^ 2

Initially both mt and vt are set to 0. Both tend to be more biased towards ) as β1 and β2 are equal to 1. By computing bias corrected m_t and vt, this problem is corrected by the Adam optimizer. The equations are as follows,

m'_t = m_t / (1 - β1 ^ t)

v't = vt / (1 - β2 ^ t)

Now as we are getting used to gradient descent after every iteration and hence it remains controlled and unbiased. Now substitute the new parameters in place of the old ones. We get,

w_t+1 = w_t - m'_t ( α / v't^1/2 + e)

Performance

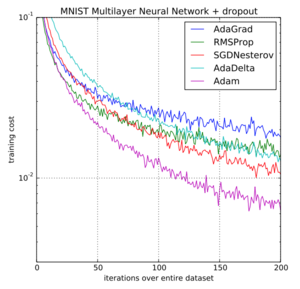

Adam optimizer gives much more higher performance results than the other optimizers and outperforms by a big margin for a better optimized gradient. The diagram below is one example of performance comparison of all the optimizers.

Numerical Example

Let's see an example of Adam optimizer. A sample dataset is shown below which as weight and height of couple of people. We have to predict the height of a person based on the given weight.

| Hours Studying | 60 | 76 | 85 | 76 | 50 | 55 | 100 | 105 | 45 | 78 | 57 | 91 | 69 | 74 | 112 |

| Exam Grad | 76 | 72.3 | 88 | 60 | 79 | 47 | 67 | 66 | 65 | 61 | 68 | 56 | 75 | 57 | 76 |

The hypothesis function is,

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f_\theta(x) = \theta_0 + \theta_1 x.}

The cost function is,

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle J({\theta}) = \frac{1}{2}\sum_i^n \big(f_\theta(x_i) - y_i \big)^2, }

The optimization problem is defined as, we have to find the values of theta which help to minimize the objective function mentioned below,

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathrm{argmin}_{\theta} \quad \frac{1}{n}\sum_{i}^n \big(f_\theta(x_i) - y_i \big) ^2 }

The cost function with respect to the weights Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \theta_0} and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \theta_1} are,

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \frac{\partial J(\theta)}{\partial \theta_1} = \big(f_\theta(x) - y \big) x }

The initial values of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle {\theta}} will be set to [10, 1] and the learning rate , is set to 0.01 and setting the parameters Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \beta_1} , Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \beta_2} , and e as 0.94, 0.9878 and 10^-8 respectively. Starting from the first data sample the gradients are,

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \frac{\partial J(\theta)}{\partial \theta_0} = \big((10 + 1\cdot 60 - 76 \big) = -6 }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \frac{\partial J(\theta)}{\partial \theta_1} = \big((10 + 1\cdot 60 - 76 \big)\cdot 60 = -360 }

Here Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle m_0} and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle v_0} are zero, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle m_1} and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle v_1} are calculated as

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle m_1 = 0.94 \cdot 0 + (1-0.94) \cdot \begin{bmatrix} -6\\ -360 \end{bmatrix} = \begin{bmatrix} -0.36\\ -21.6\end{bmatrix} }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle v_1 = 0.9878\cdot 0 + (1-0.9878) \cdot \begin{bmatrix} -6^2\\-360^2 \end{bmatrix} = \begin{bmatrix} 0.4392\\ 1581.12\end{bmatrix} , }

The new bias-corrected values of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle m_1} and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle v_1} are,

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \hat{m}_1 = \begin{bmatrix} -0.36\\ -21.6\end{bmatrix} \frac{1}{ (1-0.94^1)} = \begin{bmatrix} -6\\-360\end{bmatrix}}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \hat{v}_1 = \begin{bmatrix} 0.4392\\ 1581.12\end{bmatrix} \frac{1} {(1-0.9878^1)} = \begin{bmatrix} 36\\129600\end{bmatrix}. }

Finally, the weight update is,

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \theta_0 = 10 - 0.01 \cdot -6 / (\sqrt{36} + 10^{-8}) = 10.01 }

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \theta_1 = 1 - 0.01 \cdot -360 / (\sqrt{129600} + 10^{-8}) = 1.01 }

The procedure is repeated until the values of the weights are converged.

Applications

The Adam optimization algorithm is the replacement optimization algorithm for SGD for training DNN. According to Adam combines the best properties of the AdaGrad and RMSP algorithms to provide an optimization algorithm that can handle sparse gradients on noisy problems. Adam is proved to be the best optimizer amongst all the other optimizers such as AdaGrad, SGD, RMSP etc. Further research is going on Adaptive optimizers for Federated Learning and their performances are being compared. Federated Learning is a privacy preserving technique which is an alternative for Machine Learning where data training is done on the device itself without sharing it with the cloud server.

Variants of Adam

AdaMax

AdaMax[4] is a variant of the Adam algorithm proposed in the original Adam paper that uses an exponentially weighted infinity norm instead of the second-order moment estimate. The weighted infinity norm updated Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle u_t} , is computed as

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle u_t = \max(\beta_2 \cdot u_{t-1}, |g_t|). }

The parameter update then becomes

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \theta_t = \theta_{t-1} - (\alpha / (1-\beta_1^t)) \cdot m_t / u_t. }

Nadam

The Nadam algorithm[5] was proposed in 2016 and incorporates the Nesterov Accelerate Gradient (NAG)[6], a popular momentum like SGD variation, into the first-order moment term.

Conclusion

Adam is a variant of the gradient descent algorithm that has been widely adopted in the machine learning community. Adam can be seen as the combination of two other variants of gradient descent, SGD with momentum and RMSProp. Adam uses estimations of the first and second-order moments of the gradient to adapt the parameter update. These moment estimations are computed via moving averages,Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle m_t} and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle v_t} , of the gradient and the squared gradient respectfully. In a variety of neural network training applications, Adam has shown increased convergence and robustness over other gradient descent algorithms and is often recommended as the default optimizer for training.[7]

References

- ↑ https://arxiv.org/pdf/1412.6980.pdf ADAM: A METHOD FOR STOCHASTIC OPTIMIZATION

- ↑ Deep Learning (Adaptive Computation and Machine Learning series)

- ↑ https://www.geeksforgeeks.org/intuition-of-adam-optimizer/ Intuition of Adam Optimizer

- ↑ 4.0 4.1 Cite error: Invalid

<ref>tag; no text was provided for refs namedadam - ↑ Dozat, Timothy. Incorporating Nesterov Momentum into Adam. ICLR Workshop, no. 1, 2016, pp. 2013–16.

- ↑ Nesterov, Yuri. A method of solving a convex programming problem with convergence rate O(1/k^2). In Soviet Mathematics Doklady, 1983, pp. 372-376.

- ↑ "Neural Networks Part 3: Learning and Evaluation," CS231n: Convolutional Neural Networks for Visual Recognition, Stanford Unversity, 2020