LossScaleOptimizer

Author: Han Zeng (hz665), Tianyi Zhou (tz427), Bingzheng Wang (bw537), Regan Zhou (zz755), Emma Burford (ebb92) (ChemE 6800 Fall 2024)

Stewards: Nathan Preuss, Wei-Han Chen, Tianqi Xiao, Guoqing Hu

Introduction

Loss Scale Optimizer mainly used to deal with numerical stability problems in Mixed Precision Training (MPT) in deep learning models. Mixed Precision Training involves using both lower-precision (float16) and standard precision (float32) data types, which allows for faster training and reduced memory usage without sacrificing model accuracy[1]. However, the smaller dynamic range of FP16 may result in numerical overflow (i.e. the size of the result of the calculation is smaller than the smallest number that can be represented by a floating-point number), causing the gradients to become zero and preventing proper learning[2].

Loss Scaling works by multiplying the loss value by a scaling factor (Loss Scale Factor) when calculating the loss function value and backpropagation. The purpose is to scale gradients that may be too small in FP16 format to a range that FP16 can represent, thus avoiding numerical underflow[3].

Algorithm Discussion

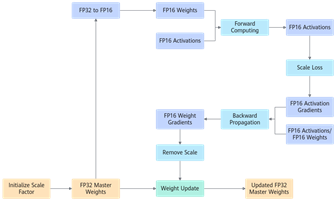

The core idea is to multiply the loss by a scaling factor before computing the gradients, thereby increasing the gradient values to avoid numerical underflow. Once the gradients are computed, they are divided by the same scaling factor before updating the model weights. This ensures that the gradient values are within a stable numerical range while retaining the original scale of updates.

1. Copy parameters and convert to float16 model precision.

2. Forward propagation (float16 model parameters).

3. Loss times scaling factor.

4. Backpropagation (model parameters of float16 with parameter gradient).

5. Parameter gradient divided by scaling factor.

6. Update the model parameters of float32 using the gradient of float16[4].

Numerical Examples

For a traditional linear regression task, set up the model as:

is the weight, is the input data, is the bias, is the predicted value.

The objective is to minimise the loss function, i.e. the MSE:

The gradient can be calculated as

Suppose the input data , the true label , and the initial parameters are and .

The predicted value is:

Without Loss Scale

Then the loss value is:

The gradient can be calculated as:

Use gradient descent to update the parameters and , assuming a learning rate of .

update:

update:

If the model is trained at FP16, there will be gradient underflow problems with the value of . This value will be approximated as 0.01004.

With Loss Scale

To avoid gradient underflow, we introduce Loss Scale, assuming that the Loss Scale factor is used.

The loss value is:

The gradient can be calculated as:

Using scaled gradient values for update:

Using scaled gradient values for update:

If the model is trained at FP16, the value can be stored normally.

Applications

Mixed Precision Training

When training with FP16, calculations are faster because FP16 data takes up less memory resources. But this can also lead to loss of numerical accuracy because of gradient underflow[5]. Loss Scale Optimizer could be used to ensure the training stability by scaling the loss function. It could avoid gradients gets too small when calculating. For example, when training a large convolutional neural network, if FP16 is used to accelerate the computation, Loss Scale will dynamically scale the loss to keep the gradient computation in a reasonable range.

Self-Supervised Learning

Self-supervised learning methods use a large amount of unlabelled data during training, which may cause instability during gradient computation[6]. Loss Scale Optimizer helps to adjust the scale of the loss function during training, avoiding instability caused by lack of precision, and ensuring that the network can converge smoothly.

Large-scale neural network training

When training large-scale neural networks (e.g., large models such as GPT, BERT, etc.), the model parameters and computation volume are very large, and the training will encounter memory and computational resource limitations[7]. By using Loss Scale Optimizer, we can avoid the instability of gradient computation due to precision limitation[8].

Commonly Used Tools With Loss Scale Optimizer

| 工具 | 描述 |

| TensorFlow | TensorFlow 使用 Loss Scale Optimizer 来确保梯度更新的稳定性。tf.keras.mixed_precision API 可以自动处理混合精度训练 |

| PyTorch 插件 | PyTorch 支持混合精度训练,并提供 API torch.cuda.amp 用于混合精度训练。其中,组件 GradScaler 实现了 Loss Scale Optimizer 的功能。 |

| NVIDIA Apex | Apex 是 NVIDIA 的 PyTorch 扩展库,专门用于加速深度学习训练。它包括 LossScaler 作为处理混合精度训练的关键组件。 |

| 深度速度 | DeepSpeed 是由 Microsoft 开发的深度学习优化库。它支持在大规模训练中使用混合精度训练,并能够通过 LossScaleOptimizer 进一步提高训练的稳定性和性能。 |

| Microsoft Azure 机器学习 | 在 Azure Cloud Platform 中,用户可以通过 AzureML SDK 实现混合精度训练。 |

Conclusion

Loss Scale Optimizer has a wide range of applications in the field of machine learning, especially in models that are more computationally intensive. Loss Scale Optimizer provides the feasibility of mixed-precision computation, which is less memory intensive and faster to train.

References

- ↑ Micikevicius P, Narang S, Alben J, et al. Mixed precision training[J]. arXiv preprint arXiv:1710.03740, 2017.

- ↑ Li H, Wang Y, Hong Y, et al. Layered mixed-precision training: a new training method for large-scale AI models[J]. Journal of King Saud University-Computer and Information Sciences, 2023, 35(8): 101656.

- ↑ Das D, Mellempudi N, Mudigere D, et al. Mixed precision training of convolutional neural networks using integer operations[J]. arXiv preprint arXiv:1802.00930, 2018.

- ↑ Principle of mixed precision and calculation process (AMP)[EB/OL]. [2024-11-29]. https://www.hiascend.com/document/detail/zh/Pytorch/60RC2/ptmoddevg/trainingmigrguide/PT_LMTMOG_0077.html.

- ↑ Mellempudi N, Srinivasan S, Das D, et al. Mixed precision training with 8-bit floating point[J]. arXiv preprint arXiv:1905.12334, 2019.

- ↑ Liu Q, Millis B A, Asad Z, et al. Integrate memory efficiency methods for self-supervised learning on pathological image analysis[C]//Medical Imaging 2022: Image Processing. SPIE, 2022, 12032: 695-701.

- ↑ Nandakumar S R, Le Gallo M, Piveteau C, et al. Mixed-precision deep learning based on computational memory[J]. Frontiers in neuroscience, 2020, 14: 406.

- ↑ Li H, Wang Y, Hong Y, et al. Layered mixed-precision training: a new training method for large-scale AI models[J]. Journal of King Saud University-Computer and Information Sciences, 2023, 35(8): 101656.