LossScaleOptimizer

Author: Han Zeng (hz665), Tianyi Zhou (tz427), Bingzheng Wang (bw537), Regan Zhou (zz755), Emma Burford (ebb92) (ChemE 6800 Fall 2024)

Stewards: Nathan Preuss, Wei-Han Chen, Tianqi Xiao, Guoqing Hu

Introduction

A LossScales Optimizer is a technique designed to maintain numerical stability in Mixed Precision Training (MPT) environments by systematically adjusting the magnitude of the loss value to ensure that gradients remain within representable ranges, thereby preventing underflow or overflow in half-precision (FP16) computations[1]. Mixed Precision Training involves using both standard-precision (FP32) and half-precision (FP16) floating-point numbers within the same model, a practice that can significantly reduce computation time and memory usage without substantially degrading model accurac[2]. This balanced approach enables large-scale and high-complexity neural networks to be trained more efficiently, facilitating rapid experimentation and deployment.

However, the limited dynamic range of FP16 arithmetic introduces numerical challenges. When gradients or intermediate values become exceedingly small or large, they may underflow—collapsing to zero and halting effective learning—or overflow—producing NaN (Not a Number) values that impede convergence[3]. These problems are especially pronounced in larger or more sensitive models, where unstable gradients can undermine training progress and final model quality.

To address these issues, loss scaling multiplies the loss function by a predetermined or adaptively adjusted scaling factor before backpropagation. By doing so, gradients that would otherwise fall below the representable range of FP16 are “lifted” into a stable interval, thus preserving essential gradient information and supporting stable training dynamics[4]. Dynamic loss scaling methods can continually optimize this scaling factor during training, adjusting to the evolving conditions within a model’s parameter space and ensuring stable training across diverse architectures and datasets[5].

This concept has proven effective in tandem with layered mixed-precision strategies, wherein different layers of the network may employ varying precisions, and also when integrated with integer-based mixed precision methods aimed at enhancing convolutional neural network training[6]. State-of-the-art deep learning frameworks, including PyTorch and TensorFlow, have implemented automated mixed precision capabilities and offer built-in functionalities for loss scaling, streamlining its adoption and refinement in practical applications[5].

Algorithm Discussion

The LossScales Optimizer leverages a scaling factor on the loss value to mitigate numerical instability within Mixed Precision Training workflows. By temporarily elevating the loss value prior to gradient computation, gradients calculated under half-precision (FP16) arithmetic are effectively “lifted” into a numerically stable range. Once the gradients are computed, the same scaling factor is applied in reverse—dividing the gradients back to their original magnitudes—ensuring that the weight updates faithfully reflect the intended adjustments. This process allows models to capitalize on the throughput and memory savings of half-precision computations without succumbing to underflow or overflow issues[1]. In practice, such strategies are often automated through frameworks or integrated toolsets, enabling more straightforward adoption and tuning.

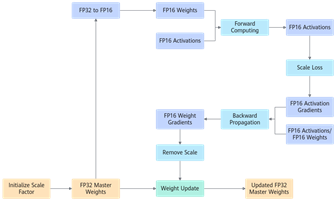

A representation of the algorithm’s procedure is as follows[7]:

1. Parameter Casting: Copy the model’s parameters and convert them to FP16 precision. At the same time, maintain a master set of parameters in FP32 to preserve full numerical fidelity.

2. Forward Pass in FP16: Perform forward propagation using the FP16 parameters. This step accelerates computation and reduces memory usage, yet can lead to reduced representational range.

3. Loss Scaling: Multiply the loss by a predetermined or dynamically adjusted scaling factor. By increasing the magnitude of the loss value, subsequent gradients are shifted into a range that FP16 can represent accurately, thus minimizing the risk of gradients disappearing due to underflow.

4. Backpropagation with Scaled Loss: Compute gradients with respect to the FP16 parameters. Because the loss was scaled beforehand, these gradients remain numerically stable, avoiding zero gradients that would otherwise derail training.

5. Unscaling the Gradients: Divide the computed gradients by the scaling factor, restoring them to the correct relative magnitudes intended by the original loss function.

6. Parameter Update in FP32: Update the master FP32 parameters using the unscaled gradients. This step ensures that while computations may occur at half precision for efficiency, the ultimate parameter updates—crucial for convergence—retain the stability of full-precision arithmetic. The updated FP32 parameters are then recast to FP16 as needed for subsequent forward passes.

This cyclical process, guided by loss scaling, guards against the deleterious effects of limited dynamic range in FP16 computations. It has proven effective across a variety of architectures, from convolutional neural networks to transformer-based models, and is frequently combined with dynamic loss scaling techniques or layered precision strategies for enhanced robustness and adaptability[5]. With many deep learning frameworks offering built-in or easily configurable tools for loss scaling, incorporating this approach into the training pipeline has become both more accessible and more reliable.

Numerical Examples

For a traditional linear regression task, set up the model as:

is the weight, is the input data, is the bias, is the predicted value.

The objective is to minimise the loss function, i.e. the MSE:

The gradient can be calculated as

Suppose the input data , the true label , and the initial parameters are and .

The predicted value is:

Without Loss Scale

Then the loss value is:

The gradient can be calculated as:

Use gradient descent to update the parameters and , assuming a learning rate of .

update:

update:

If the model is trained at FP16, there will be gradient underflow problems with the value of . This value will be approximated as 0.01004.

With Loss Scale

To avoid gradient underflow, we introduce Loss Scale, assuming that the Loss Scale factor is used.

The loss value is:

The gradient can be calculated as:

Using scaled gradient values for update:

Using scaled gradient values for update:

If the model is trained at FP16, the value can be stored normally.

Applications

Mixed Precision Training

Mixed Precision Training leverages half-precision floating-point formats (FP16), significantly reducing memory usage and accelerating computational throughput. However, directly employing FP16 arithmetic without additional measures can lead to numerical instability, such as gradient underflow, especially in large and complex networks[8]. By applying a Loss Scale Optimizer, the training process incorporates a dynamic scaling factor to the loss function. This step ensures that gradients, which might otherwise vanish due to limited precision, are maintained within a stable and representable range. As a result, practitioners can achieve faster training and reduced resource consumption without sacrificing model quality or stability[2]. For example, when training large convolutional neural networks, scaling the loss value helps keep the gradient computations numerically robust, even under high-complexity workloads that push computational boundaries.

Self-Supervised Learning

Self-supervised learning involves extracting meaningful representations from large volumes of unlabeled data. Although this paradigm fosters flexible and scalable model training, it can introduce gradient instabilities due to increased complexity and the absence of reliable supervisory signals[9]. Implementing Loss Scale Optimizer mitigates these issues by preventing gradients from collapsing to zero and ensuring stable convergence under the limited dynamic range of half-precision computations. By maintaining numerical stability, the optimizer facilitates more efficient model pre-training, enabling models to leverage abundant unlabeled data without succumbing to precision-induced training disruptions.

Large-scale neural network training

Scaling up models to the magnitude of architectures like GPT or BERT expands the frontier of what neural networks can achieve but amplifies the challenges associated with memory constraints and computational overhead[10]. Under these circumstances, mixed precision strategies, combined with Loss Scale Optimizers, help alleviate the instability of gradient computations that emerges from working within narrower floating-point ranges[10]. By continuously adjusting the scaling factor, the optimizer ensures that the gradients retain their representational integrity, even as the model’s size and complexity increase. This approach supports the efficient and stable training of large-scale networks, broadening the scope of advanced deep learning applications and models designed to handle vast and intricate datasets.

Commonly Used Tools With Loss Scale Optimizer

| Tool | Description |

| TensorFlow | TensorFlow uses the Loss Scale Optimizer to ensure stability of gradient updates. tf.keras.mixed\_precision API can automatically handle mixed precision training |

| PyTorch | PyTorch supports mixed-precision training and provides an API torch.cuda.amp for mixed-precision training. Among them, the component GradScaler implements the function of Loss Scale Optimizer. |

| NVIDIA Apex | Apex is a PyTorch extension library from NVIDIA specifically designed to accelerate deep learning training. It includes LossScaler as a key component for handling mixed-precision training. |

| DeepSpeed | DeepSpeed is a deep learning optimisation library developed by Microsoft. It supports the use of mixed-precision training in large-scale training and is able to further improve the stability and performance of training through LossScaleOptimizer. |

| Microsoft Azure ML | In the Azure Cloud Platform, users can implement mixed precision training through the AzureML SDK. |

Conclusion

The LossScales Optimizer represents a critical advancement in managing the numerical stability challenges inherent to Mixed Precision Training. By strategically scaling and subsequently restoring loss values, it ensures that gradients remain within representable ranges, preventing underflow and overflow issues that can undermine model convergence. This approach enables deep neural networks—particularly those at the forefront of complexity and computational demand—to train efficiently with half-precision arithmetic, yielding reductions in both memory footprint and overall training time[1]. The ease of integration with modern deep learning frameworks and complementary techniques, such as layered precision strategies and dynamic loss scaling, extends the applicability of this method across a wide array of architectures and tasks[5]. As the field continues to innovate and refine mixed precision strategies, the LossScales Optimizer stands as a crucial component that supports stable, resource-efficient training, ultimately broadening the horizons for large-scale, high-performance deep learning systems.

References

- ↑ 1.0 1.1 1.2 Micikevicius P, Narang S, Alben J, et al. Mixed precision training[J]. arXiv preprint arXiv:1710.03740, 2017.

- ↑ 2.0 2.1 NVIDIA Developer Blog. “Mixed Precision Training.” Available: https://developer.nvidia.com/mixed-precision-training

- ↑ Li H, Wang Y, Hong Y, et al. Layered mixed-precision training: a new training method for large-scale AI models[J]. Journal of King Saud University-Computer and Information Sciences, 2023, 35(8): 101656.

- ↑ Jia X, Thomas S, Yao Z, et al. Highly Scalable Deep Learning Training System with Mixed-Precision: Training ImageNet in Four Minutes. arXiv preprint arXiv:1807.11205, 2018.

- ↑ 5.0 5.1 5.2 5.3 Paszke A, Gross S, Massa F, et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. Advances in Neural Information Processing Systems, 2019.

- ↑ Das D, Mellempudi N, Mudigere D, et al. Mixed precision training of convolutional neural networks using integer operations[J]. arXiv preprint arXiv:1802.00930, 2018.

- ↑ Principle of mixed precision and calculation process (AMP)[EB/OL]. [2024-11-29]. https://www.hiascend.com/document/detail/zh/Pytorch/60RC2/ptmoddevg/trainingmigrguide/PT_LMTMOG_0077.html.

- ↑ Nandakumar S R, Le Gallo M, Piveteau C, et al. Mixed-precision deep learning based on computational memory[J]. Frontiers in neuroscience, 2020, 14: 406.

- ↑ Liu Q, Millis B A, Asad Z, et al. Integrate memory efficiency methods for self-supervised learning on pathological image analysis[C]//Medical Imaging 2022: Image Processing. SPIE, 2022, 12032: 695-701.

- ↑ 10.0 10.1 Mellempudi N, Srinivasan S, Das D, et al. Mixed precision training with 8-bit floating point[J]. arXiv preprint arXiv:1905.12334, 2019.