Bayesian optimization

Author: Brad Edgerton (bme44), Sochetra Sor (ss3437), Jerry Feng (zf245), James Chen (jzc28) (ChemE 6800 Fall 2024)

Stewards: Nathan Preuss, Wei-Han Chen, Tianqi Xiao, Guoqing Hu

Introduction

Bayesian Optimization (BO) is a probabilistic model-based method for the optimization of objective functions that are computationally expensive and prevent thorough evaluation. It has found its place in optimization by handling objectives that are costly to compute and function as “black boxes” without an efficient mechanism to estimate their gradient [1].

The origins of BO trace back to the 1960s, with foundational work from H.J. Kushner and his introduction of a GP Model for noisy, one-dimensional optimization and leveraging Bayesian decision theory to derive optimization policies. V.R. Šaltenis expanded these ideas in the 1970s by introducing the Expected Improvement (EI) acquisition function and demonstrating its effectiveness through empirical studies on high-dimensional problems. An J. Mockus further developed the field by endorsing the one-step lookahead approach with the knowledge gradient, emphasizing its practical use in resource-constrained settings where it aligned with EI [1].

BO has experienced a modern resurgence driven by advancements in machine learning, computational power, and the increasing demand to optimize complex systems across fields like artificial intelligence, robotics, and materials discovery, particularly excelling in scenarios with costly function evaluations, such as hyperparameter tuning, engineering design, and experimental sciences.

The significance of Bayesian Optimization lies in its ability to solve problems where traditional optimization techniques fail due to limited resources or the black-box nature of the objective function. For example, tuning the hyperparameters of a neural network involves computationally intensive evaluations, often requiring substantial time and resources. BO provides a systematic and efficient framework to minimize the number of evaluations while still identifying near-optimal solutions [1].

Algorithm Discussion

Overview

The BO algorithm consists of 3 Steps:

1. Surrogate Model (Response Surface Model)

When optimizing our objective function, the most ideal situation would be to know the exact shape (i.e., probability distribution) of the said objective function, for which it can simply use gradient ascent/descent to obtain the global maximum/minimum. A surrogate model allows for approximating the shape of the true objective function from a sample of hyperparameters-objective value pairs. As an extreme example, exhaustive grid search returns a one-to-one projection of all possible hyperparameters to the corresponding objective value.

2. Acquisition Function (Selection Function)

The second step would be to use the samples of objective function obtained in Step 1 to smartly select the next sample. This is done by selecting the sample that maximizes a certain acquisition function (i.e., select where to sample). One thing to note is that the acquisition function is calculated based on the surrogate function, instead of the true objective function (since the true objective function is unknown).

3. Updating the Surrogate Model

After getting a smart hyperparameter-objective value sample pair, it is added to the surrogate model training data to update the model. This process is repeated until maximum iteration/computing time is reached.

The pseudocode for the algorithm is shown below:

where f represents the objective function, represents the fitted surrogate model at iteration t, T represents the maximum allowed iteration, A represents the acquisition function, x represents a new sample of hyperparameter-objective value pairs, represent the history of the hyperparameter-objective value samples at iteration t.

Major challenges in BO include scalability to high-dimensional problems, handling noise in evaluations, and balancing the exploration-exploitation trade-off inherent in the optimization process. These challenges, however, are addressed through advancements in surrogate models, such as scalable approximations to GP or alternative probabilistic models.

Surrogate Model

GPs are the most common choices when it comes to surrogate model selection [2][3]. One notable property of GP is that it is closed, in other words a f modeled as a GP remains a GP after conditioned on sample [2].

GP assumes the prior distribution of points to be a multivariate normal distribution, from which the collection of hyperparameter-objective value pairs (x, f(x)) were drawn randomly. A mean function, and a covariance function, is evaluated at each pair. The prior distribution is then

At a new point x*, given the observations, a posterior probability distribution can be derived. By Bayes’ rule, it can be shown that [4]

For the mean and the covariance function, is usually modeled simply by , although a low-order polynomial of x may be added. is usually modeled by a Gaussian kernel, , where represents the squared Euclidean distance. Some other , like the Màtern kernel may also be used.

Acquisition Function

The most commonly used acquisition function is the EI function. At a new point (x,f), the EI is given by:

where f** represents the current best objective value, represents the conditional probability distribution of f under the surrogate model of choice.

Another commonly used acquisition function is the Knowledge Gradient (KG), which is defined as follows given and a new x:

where corresponds to the current optimal x**, i.e., the one with the largest value. The x that is selected maximizes the KG. The KG is commonly used under a not-noise-free scenario with a risk-neutral evaluation criteria (i.e., the only concern is the expectation) [3][5].

Numerical Examples

Setup

A numerical example is constructed based on [6]. The package implements the BO algorithm with a GP surrogate function. It has the ability to customize the acquisition function.

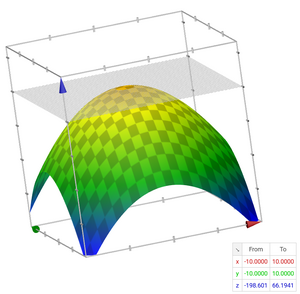

Efforts are made to maximize the function (which will be unknown to us in the real world):

The function is optimized using both Expected Improvement and another function called Upper Confidence Bound (UCB). This can be done with the BayesianOptimization function defined in the package. By defining the bounded region of the parameter space and selecting the appropriate acquisition function, the optimizer can be optimized with a set number of iterations.

Results

| Acquisition Function | Surrogate Model | f(x) | x | y |

| Upper Confidence Bound | Gaussian Process | 0.9999 | 0.0035 | 1.0004 |

| Expected Improvement (𝜉 = 0.05) | Gaussian Process | 0.9980 | 0.0069 | 1.0444 |

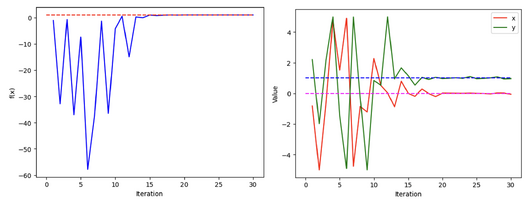

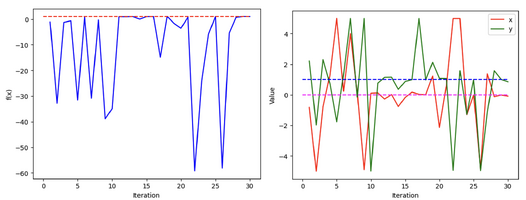

As mentioned in the algorithm section, a BO algorithm does not give us the exact optimal solution, but a “good enough” approximation relatively quickly. As shown, BO can be applied on both univariate and multivariate functions. The algorithm was run for 30 iterations:

Observations

1. For this objective function, UCB seems to be a better choice for the acquisition function than the popular Expect Improvement (EI) function, with UCB converging faster and producing a solution closer to the true optimum eventually.

2. Increasing the number of iterations does not necessarily make the solution better. For the EI acquisition function, it approached the optimum solution in 12 iterations and “stayed” in the vicinity for a few iterations, but failed to converge and displayed a large fluctuation in both x and y.

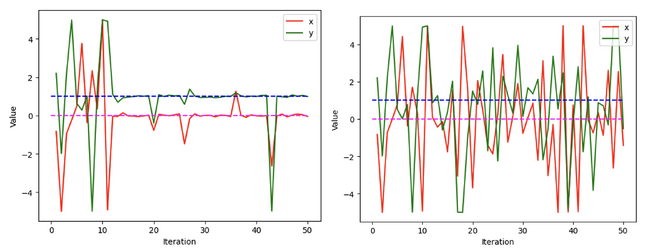

3. As shown in the code, A parameter 𝜉 can be customized for the EI acquisition function. Literature mentions that a low 𝜉 prefers “exploitation”, while a high 𝜉 prefers “exploration” [7]. As shown below, 𝜉 = 0.005 allowed the algorithm to reach the optimum quickly, and stayed in the vicinity of the optimum throughout almost all iterations. On the other hand, 𝜉 = 0.5 made the algorithm “explore” a lot more values. A high 𝜉 might be useful for more complex objective functions to ensure that the algorithm is not “trapped” in local mini/maximums.

Application

BO has become vital to optimization of functions in a variety of fields. It is the most useful in scenarios where each iteration of the evaluation is costly in terms of time, resources, or computation. The most prominent application of BO comes in tuning hyperparameters of machine learning models. The problem lies in the high-dimensional and non-convex nature of hyperparameters such as learning rates and regularization coefficients, most commonly. Their values can drastically change the model, so it is important to optimize them appropriately. Snoek et al. (2012) demonstrated the effectiveness of BO in tuning neural network hyperparameters that resulted in state-of-the-art performance on benchmark datasets. Several hyperparameters were targeted including learning rate, momentum, weight decay, number of layers, number of units per layer, and other parameters specific to the architecture of the models. GPs were used to model the validation error as a function of the hyperparameters selected, and the EI criterion was used to select the next set of hyperparameters. BO found hyperparameter combinations that gave lower validation errors compared to traditional tuning techniques and had fewer function evaluations, reducing time complexity [8].

Another major area of application is the optimization of engineering design where BO aids in optimizing design parameters that would otherwise require costly simulations or physical experiments. This is applicable to all forms of engineering where design is crucial such as aerospace, automotive design, and structural engineering. According to Edgar et al. (2001), optimization plays a crucial role in enhancing the efficiency and safety of chemical processes by systematically adjusting design parameters. Edgar et al. (2001) present a case study on the optimization of distillation column design. The basic design parameters are the number of stages, reflux ratio, feed stage location, and column diameter. The objective function is the total annual cost, and while the complex and nonlinear relationship between the parameters and the function poses a challenge, BO is well-suited to handle such a problem. By fitting a GP to initial design points, and selecting an appropriate acquisition function, optimal hyperparameters can be determined through iteration [9].

Similarly, in fields like chemistry and material science, experiments can be costly and time consuming. In this case, Bayesian Optimization can be utilized by selecting apt experimental conditions to conduct experiments under which are analogous to selecting hyperparameters in machine learning models. For instance, Gomez-Bombarelli et al (2018) proposed using BO to design novel organic molecules with specific electronic properties by altering and optimizing molecular structures. In this case, the molecular structure, the functional groups, and the bond angles and lengths were chosen as the parameters. Since electronic properties are nonlinearly dependent on molecular structures, and quantum mechanical calculations are computationally intensive, BO is appropriate. By defining a particular electronic property as the objective function, initializing a surrogate model, and selecting an acquisition function, the parameters can be found through iterative optimization [10].

Conclusion

The Bayesian Optimization process is described in three steps: build a surrogate model, define an acquisition function; and update the surrogate model. The surrogate model is an approximation of the actual, unknown objective function. Samples obtained from the surrogate model can then be plugged into an acquisition function, the sample that provides the largest value will be selected as the next sample. The model is then updated and the process repeated until an optimal value is achieved.

BO has been shown to be effective when computing costly objectives. In recent times, it has contributed greatly to machine learning algorithms by reducing the amount of resources needed to compute a solution. BO does all this while taking into account noise and uncertainty. When used inside the bounds of these limitations, BO can be an effective tool to solve what would otherwise be computationally expensive problems to solve. BO has found its place as a viable optimization method by solving objective functions that incur a high cost to compute.

References

[1] Garnett R. extensions and related settings. In: Bayesian Optimization. Cambridge University Press; 2023:1, 289-290, 292.

[2] James Bergstra, Rémi Bardenet, Yoshua Bengio, and Balázs Kégl. 2011. Algorithms for hyper-parameter optimization. In Proceedings of the 24th International Conference on Neural Information Processing Systems (NIPS'11). Red Hook, NY, USA, 2546–2554.

[3] Peter I. Frazier. 2018. A Tutorial on Bayesian Optimization. arXiv preprint. https://doi.org/10.48550/arXiv.1807.02811.

[4] R.J. Vanderbei, Linear Programming: Foundations and Extensions. Springer, 2008. (PDF at http://link.springer.com/book/10.1007/978-0-387-74388-2Links to an external site.)

[5] Rasmussen, C. and Williams, C. (2006). Gaussian Processes for Machine Learning. MIT Press, Cambridge, MA. ISBN: 9780262256834.

[6] https://github.com/bayesian-optimization/BayesianOptimization

[7] Berger, J. O. (2013). Statistical Decision Theory and Bayesian Analysis. Springer Science & Business Media.

[8] Snoek, J., Larochelle, H., & Adams, R. P. (2012). Practical Bayesian Optimization of Machine Learning Algorithms. Advances in Neural Information Processing Systems, 25, 2951-2959.

[9] T.F. Edgar, D.M. Himmelblau, L.S. Lasdon, Optimization of Chemical Processes. McGraw-Hill, 2001, 419-426, 437-450.

[10] Gómez-Bombarelli, R., Wei, J. N., Duvenaud, D., Hernández-Lobato, J. M., Sánchez-Lengeling, B., Sheberla, D., ... & Aspuru-Guzik, A. (2018). Automatic discovery of materials and catalysts by quantum mechanics and machine learning. ACS Central Science, 268-276.

![{\displaystyle E[max_{x},(\mu _{n+1}(x^{'}))-max_{x'}(\mu _{n}(x'))|x_{n+1}=x]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/91bbae4e90097c5f0e94ae8914d8b1de216b6232)