Genetic algorithm

Author: Yunchen Huo (yh2244), Ran Yi (ry357), Yanni Xie (yx682), Changlin Huang (ch2269), Jingyao Tong (jt887) (ChemE 6800 Fall 2024)

Stewards: Nathan Preuss, Wei-Han Chen, Tianqi Xiao, Guoqing Hu

Introduction

The Genetic Algorithm (GA) is an optimization technique inspired by Charles Darwin's theory of evolution through natural selection[1]. First developed by John H. Holland in 1973[2], GA simulates biological processes such as selection, crossover, and mutation to explore and exploit solution spaces efficiently. Unlike traditional methods, GA does not rely on gradient information, making it particularly effective for solving complex, non-linear, and multi-modal problems.

GA operates on a population of candidate solutions, iteratively evolving toward better solutions by using fitness-based selection. This characteristic makes it suitable for tackling problems in various domains, such as engineering, machine learning, and finance. Its robustness and adaptability have established GA as a key technique in computational optimization and artificial intelligence research, as documented in Computational Optimization and Applications.

Algorithm Discussion

The GA was first introduced by John H. Holland[2] in 1973. It is an optimization technique based on Charles Darwin’s theory of evolution by natural selection.

Before diving into the algorithm, here are definitions of the basic terminologies.

- Gene: The smallest unit that makes up the chromosome (decision variable)

- Chromosome: A group of genes, where each chromosome represents a solution (potential solution)

- Population: A group of chromosomes (a group of potential solutions)

GA involves the following seven steps:

- Initialization

- Randomly generate the initial population for a predetermined population size

- Evaluation

- Evaluate the fitness of every chromosome in the population to see how good it is. Higher fitness implies better solution, making the chromosome more likely to be selected as a parent of next generation

- Selection

- Natural selection serves as the main inspiration of GA, where chromosomes are randomly selected from the entire population for mating, and chromosomes with higher fitness values are more likely to be selected[3].

- Crossover

- The purpose of crossover is to create superior offspring (better solutions) by combining parts from each selected parent chromosome. There are different types of crossover, such as single-point and double-point crossover[3]. In single-point crossover, the parent chromosomes are swapped before and after a single point. In double-point crossover, the parent chromosomes are swapped between two points[3].

- Mutation

- A mutation operator is applied to make random changes to the genes of children's chromosomes, maintaining the diversity of the individual chromosomes in the population and enabling GA to find better solutions[3].

- Insertion

- Insert the mutated children chromosomes back into the population

- Repeat 2-6 until a stopping criteria is met

- Maximum number of generations reached

- No significant improvement from newer generations

- Expected fitness value is achieved

Genetic Operators:

Steps 3-5 in GA involves techniques of changing the genes of chromosomes to create new generations. The functions that were applied to the populations are called genetic operators. The main types of GA operators include selection operator, crossover operator, and mutation operator. Below are some widely used operators:

- Selection Operator

- Some famous selection operators include roulette wheel, rank, tournament, etc. Roulette wheel selection allocates strings onto a wheel based on their fitness value, then randomly selects solutions for the next generation[4].

- Crossover Operator

- Single-point and double-point crossover operators were introduced in the algorithm above, and there is also a k-point crossover that selects multiple points and swaps the segments between those points of the parent chromosomes to generate new offspring[4]. Some other well-known crossover operators are uniform crossover, partially matched crossover, order crossover, and shuffle crossover[4].

- Mutation Operator

- Mutation operator introduces variability into the population that helps the algorithm to find the global optimum. Displacement mutation operator randomly choose a substring from an individual and insert it into a new position[4]. Additionally, other prominent mutation operators are simple inversion mutation, scramble mutation, binary bit-flipping mutation, directed mutation, and others[5].

Numerical Example

1. Simple Example

We aim to maximize , where . Chromosomes are encoded as 5-bit binary strings since the binary format of the maximum value 31 is 11111.

1.1 Initialization (Generation 0)

The initial population is randomly generated:

| Chromosome (Binary) | x (Decimal) |

|---|---|

| 10010 | 18 |

| 00111 | 7 |

| 11001 | 25 |

| 01001 | 9 |

1.2 Generation 1

1.2.1 Evaluation

Calculate the fitness values:

| Chromosome | ||

|---|---|---|

| 10010 | 18 | 324 |

| 00111 | 7 | 49 |

| 11001 | 25 | 625 |

| 01001 | 9 | 81 |

1.2.2 Selection

Use roulette wheel selection to choose parents for crossover. Selection probabilities are calculated as:

Thus,

Total Fitness

Compute the selection probabilities:

| Chromosome | Selection Probability | |

|---|---|---|

| 10010 | ||

| 00111 | ||

| 11001 | ||

| 01001 |

Cumulative probabilities:

| Chromosome | Cumulative Probability |

|---|---|

| 10010 | |

| 00111 | |

| 11001 | |

| 01001 |

Random numbers for selection:

Selected parents:

- Pair 1: 00111 and 11001

- Pair 2: 11001 and 10010

1.2.3 Crossover

Crossover probability:

Pair 1: Crossover occurs at position 2.

- Parent 1:

- Parent 2:

- Children: ,

Pair 2: No crossover.

- Children: Child 3: , Child 4:

1.2.4 Mutation

Mutation probability: Child 1: (bits: ).

- Mutations: Bit 1 and Bit 4 flip.

- Resulting chromosome: .

Child 2: (bits: ).

- Mutation: Bit 3 flips.

- Resulting chromosome: .

Child 3: (bits: ).

- Mutations: Bit 1 and Bit 5 flip.

- Resulting chromosome: .

Child 4: (bits: ).

- Mutations: Bit 1 and Bit 3 flip.

- Resulting chromosome: .

1.2.5 Insertion

| Chromosome | ||

|---|---|---|

| 10011 | ||

| 11011 | ||

| 01000 | ||

| 00110 |

1.3 Generation 2

1.3.1 Evaluation

Calculate the fitness values:

| Chromosome | ||

|---|---|---|

| 10011 | ||

| 11011 | ||

| 01000 | ||

| 00110 |

1.3.2 Selection

Total fitness:

Compute selection probabilities:

| Chromosome | Selection Probability | |

|---|---|---|

| 10011 | ||

| 11011 | ||

| 01000 | ||

| 00110 |

Cumulative probabilities:

| Chromosome | Cumulative Probability |

|---|---|

| 10011 | |

| 11011 | |

| 01000 | |

| 00110 |

Random numbers for selection:

Selected parents:

- Pair 1: 10011 and 11011

- Pair 2: 11011 and 01000

1.3.3 Crossover

Crossover probability:

Pair 1: Crossover occurs at position 2.

- Parent 1:

- Parent 2:

- Children: ,

Pair 2: Crossover occurs at position 4.

- Parent 1:

- Parent 2:

- Children: ,

1.3.4 Mutation

Mutation probability:

- Child 1: No mutations. .

- Child 2: Bit 1 flips. .

- Child 3: Bit 5 flips. .

- Child 4: No mutations. .

1.3.5 Insertion

| Chromosome | ||

|---|---|---|

| 10011 | ||

| 01011 | ||

| 11010 | ||

| 11011 |

1.4 Conclusion

After 2 iterations, the best optimal solution we find is .

Due to the limitation of the page, we will not perform additional loops. In the following examples, we will show how to use code to perform multiple calculations on complex problems and specify stopping conditions.

2. Complex Example

We aim to maximize the function:

subject to the constraints:

2.1 Encoding the Variables

Each chromosome represents a pair of variables and . We encode these variables into binary strings:

- : , precision , requiring bits.

- : , precision , requiring bits.

Each chromosome is a 16-bit binary string, where the first 8 bits represent and the next 8 bits represent .

2.2 Initialization

We randomly generate an initial population of 6 chromosomes. Each chromosome is decoded to its respective and values using the formula:

Initial Population

| Chromosome (Binary) | Bits | Bits | (Decimal) | (Decimal) |

|---|---|---|---|---|

| 10101100 11010101 | 10101100 | 11010101 | ||

| 01110011 00101110 | 01110011 | 00101110 | ||

| 11100001 01011100 | 11100001 | 01011100 | ||

| 00011010 10110011 | 00011010 | 10110011 | ||

| 11001100 01100110 | 11001100 | 01100110 | ||

| 00110111 10001001 | 00110111 | 10001001 |

2.3 Evaluation

Calculate the fitness for each chromosome using the given function. Below are the computed fitness values:

| Chromosome (Binary) | |||

|---|---|---|---|

| 10101100 11010101 | |||

| 01110011 00101110 | |||

| 11100001 01011100 | |||

| 00011010 10110011 | |||

| 11001100 01100110 | |||

| 00110111 10001001 |

2.4 Selection

Use roulette wheel selection to choose parents for crossover.

Total fitness:

Selection probabilities are calculated as:

| Chromosome | Fitness | Selection Probability |

|---|---|---|

| 10101100 11010101 | ||

| 01110011 00101110 | ||

| 11100001 01011100 | ||

| 00011010 10110011 | ||

| 11001100 01100110 | ||

| 00110111 10001001 |

Selected pairs for crossover:

- Pair 1: Chromosome 3 and Chromosome 5

- Pair 2: Chromosome 2 and Chromosome 6

- Pair 3: Chromosome 1 and Chromosome 4

2.5 Crossover

Perform single-point crossover at position 8 (between and bits).

Pair 1:

- Parent 1:

- Parent 2:

- Child 1:

- Child 2:

Repeat for other pairs.

2.6 Mutation

Apply mutation with a small probability (e.g., 1% per bit). Suppose a mutation occurs in Child 1 at bit position 5 of the bits:

The new child becomes:

2.7 Insertion

Evaluate the fitness of offspring and combine with the current population. Select the top 6 chromosomes to form the next generation.

| Chromosome (Binary) | |||

|---|---|---|---|

| 11101001 01100110 | |||

| 11100001 01011100 | |||

| 11001100 01100110 | |||

| 10101100 11010101 | |||

| 01110011 00101110 | |||

| 00110111 10001001 |

2.8 Repeat Steps 2.3-2.7

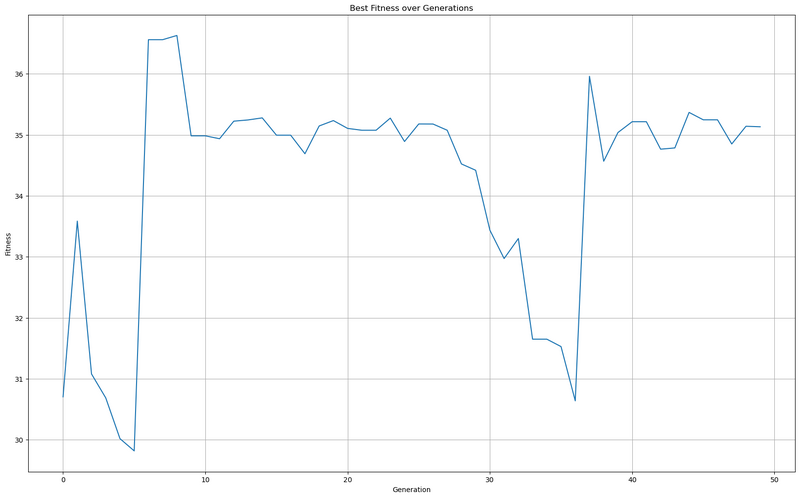

Using the code (https://github.com/AcidHCL/CornellWiki) to perform the repeating process for 50 more generations we got

Fig.2. 50 iteration results

Based on Fig. 2 we can find the optimal value to be 35.2769.

Application

GA is one of the most important and successful algorithms in optimization, which can be demonstrated by numerous applications. Applications of GA include machine learning, engineering, finance and other domain applications, the following introduces the applications of GA in Unsupervised Regression, Virtual Power Plans and Forecasting Financial Market Indices.

Unsupervised Regression

The Unsupervised regression is a promising dimensionality reduction method[6]. The concept of the Unsupervised regression is to map from low-dimensional space into the high-dimensional space by using a regression model[7]. The goal of the Unsupervised Regression is to minimize the data space reconstruction error[6]. Common optimization methods to achieve this goal are the Nearest neighbor regression and the Kernel regression[6]. Since the mapping from the low-dimensional space into the high-dimensional space is usually complex, the data space reconstruction error function may become a non-convex multimodal function with multiple local optimal solutions. In this case, using GA can overcome local optima because of the population search, selection, crossover and mutation.

Virtual Power Plants

The renewable energy resources, such as wind power and solar power, have a distinct fluctuating character[6]. To address the inconvenience of such fluctuations on the power grid, engineers have introduced the concept of virtual power plants, which bundles various different power sources into a single unit that meets specific properties[6].

The optimization goal is to minimize the absolute value of power in the virtual power plants system with a rule base[6]. This rule base is also known as the learning classifier system, which allows energy storage devices and standby power plants in the system to respond flexibly in different system states and achieve a balance between power consumption and generation[6].

Since the rule base has complexity and a high degree of uncertainty, using GA can observably evolve the action part of the rule base. For example, in the complex energy scheduling process, GA can optimize the charge/discharge strategy of the energy storage equipment and the plan of start/stop of standby power plants to ensure the balance of power consumption and generation.

Forecasting Financial Market Indices

A financial market index consists of a weighted average of the prices of individual shares that make up the market[8]. In financial markets, many traders and analysts believe that stock prices move in trends and repeat price patterns[8]. Under this premise, using Grammatical Evolution(GE) to forecast the financial market indices and enhance trading decisions is a good choice.

GE is a machine learning method based on the GA[8]. GE uses a biologically-inspired, genotype-phenotype mapping process, evolving computer program in any language[8]. Unlike encoding the solution within the genetic material in the GA, the GE includes a many-to-one mapping process, which shows the robustness[8].

While using GE to forecast financial market indices, people need to import processed historical stock price data. GE will learn price patterns in this data and generate models which can predict future price movements. These models can help traders and analysts identify the trend of the financial market, such as upward and downward trends.

Software tools and platforms that utilize Genetic Algorithms

MATLAB: The Global Optimization Toolbox of MATLAB is widely used for engineering simulations and machine learning.

Python: The DEAP and PyGAD in Python provide an environment for research and AI model optimization.

OpenGA: The OpenGA is a free C++ GA library, which is open-source. The OpenGA provides developers with a flexible way to implement GA for solving a variety of optimization problems[9].

Conclusion

The GA is a versatile optimization tool inspired by evolutionary principles, excelling in solving complex and non-linear problems across diverse fields. Its applications, ranging from energy management to financial forecasting, highlight its adaptability and effectiveness. As computational capabilities advance, GA is poised to solve increasingly sophisticated challenges, reinforcing its relevance in both research and practical domains.

References

- ↑ Lambora, A., Gupta, K., & Chopra, K. (2019). Genetic Algorithm - A Literature Review. 2019 International Conference on Machine Learning, Big Data, Cloud and Parallel Computing (COMITCon), Faridabad, India, pp. 380–384.

- ↑ 2.0 2.1 Holland, J. H. (1973). Genetic algorithms and the optimal allocation of trials. SIAM Journal on Computing, 2(2), 88–105

- ↑ 3.0 3.1 3.2 3.3 Mirjalili, S. (2018). Genetic Algorithm. Evolutionary Algorithms and Neural Networks, Springer, pp. 43–56

- ↑ 4.0 4.1 4.2 4.3 Katoch, S., Chauhan, S.S. & Kumar, V. (2021). A review on genetic algorithm: past, present, and future. Multimed Tools Appl 80, 8091–8126

- ↑ Lim, S. M., Sultan, A. B. M., Sulaiman, M. N., Mustapha, A., & Leong, K. Y. (2017). Crossover and mutation operators of genetic algorithms. International journal of machine learning and computing, 7(1), 9-12

- ↑ 6.0 6.1 6.2 6.3 6.4 6.5 6.6 Kramer, O. (2017). Studies in Computational Intelligence 679 Genetic Algorithm Essentials.

- ↑ Kramer, O. (2016). Dimensionality reduction with unsupervised nearest neighbors.

- ↑ 8.0 8.1 8.2 8.3 8.4 Chen, S.-H. (2012). Genetic Algorithms and Genetic Programming in Computational Finance. Springer Science & Business Media.

- ↑ Arash-codedev. (2019, September 18). Arash-codedev/openGA. GitHub. https://github.com/Arash-codedev/openGA

![{\displaystyle x\in [0,31]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/fcd589190be7eb62ac31a9ea4d29d09f81eb2f70)