Logarithmic transformation

This web page is a duplicate of https://optimization.mccormick.northwestern.edu/index.php/Logarithmic_transformation

Author: Hassan Ali (ChE 345 Spring 2015)

Steward: Dajun Yue, Fengqi You

Logarithmic transformation is a method used to change geometric programs into their convex forms. A geometric program, or GP, is a type of global optimization problem that concerns minimizing a subject to constraint functions so as to allow one to solve unique non-linear programming problems. All geometric programs contain functions called posynomials that are inherently non-convex. Due to this fact, solving geometric problems can be computationally intensive and finding a global optimum solution is not guaranteed. However, by creating a logarithmic transformation for a problem, one can solve for the globally optimum solution quicker and easier. A logarithmic transformation is not the only transformation which allows. One can also use an exponential transformation to obtain the same result. A logarithmic transformation can also be used on signomial programs, which are an extension of geometric programs.1,2,3

Background

Geometric Programming

Geometric programming was first discussed in the early 1960's as a class of optimization problem that could be solved with geometric inequalities. Duffin and his colleagues went on to formulate basic theories of geometric programming and its applications in 1967 in their seminal textbook Geometric Programming Theory and Application.4 One of their insights into geometric programming was noticing that problems with highly non-linear constraints could be stated equivalently with a dual program. In other words, a global minimizing solution could be found by solving its corresponding dual maximization problem. This is because the dual constraints are linear. All one would need to do is change the geometric programming problem from its standard posynomial form into this "dual form" and solve using these now linear constraints.3 The answer could only be locally optimum as one was still dealing with non-linear programming (NLP) methods.

A standard GP problem takes the following form

where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f_0,...,f_m} are posynomials and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle h_1,...,h_M} are monomials, and the variables x are all positive.

Posynomials

Posynomials are functions that are non-linear and consist of a set of constants multiplied to a series of multiple variables multiplied together. Often these variables are raised to various powers. More specifically, they are functions of the form:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(x_1,x_2,...,x_n)=\sum_{k=1}^K c_kx_1^{a_{1k}}...x_n^{a_{nk}} }

where the variables Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_i } and the coefficients Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle c_k } are positive, real numbers, and all of the exponents Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle a_{ik} } are real numbers.4

Monomials are a specific case of posynomial where there is no set of terms but rather only one term and its constant. A posynomial is then simply a sum of monomials. An example of a monomial is

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(x_1,x_2)=400x_1^{3}x_2^{-2} }

and an example of a posynomial is

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(x_1,x_2)=4x_1^{2}x_2^{4}+x_1^{3}x_2^{5} }

A posynomial is therefore not of the form

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(x_1,x_2)=x_1-x_2 }

as this is a linear function.

Geometric Programming Derivation

GP problems are not always given and sometimes must be derived.5 Take the following example of a standard NLP problem

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \max ~x/y}

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s.t. ~~2\le x\le 3}

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle ~x^2 + 3y/z\le \sqrt{y} }

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle ~x/y = z^2 }

where the variables x,y, and z are positive. The standard GP form of this problem would be

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \max ~x^{-y}}

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s.t. ~~2x^-1\le 1, (1/3)x\le 1 }

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle ~x^{2}y^{-0.5} + 3y^{0.5}z^{-1}\le 1 }

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle ~xy^{-1}z^{-2} = 1 }

A standard GP problem then minimizes a posynomial function while constraining it to posynomial inequality constraints and monomial equality constraints. Posynomials are positive functions and contain log convexity. This is an important facet as it allows standard GP problems to undergo log transformations.1

Logarithmic Transformation

The Duffin "dual-program" method for solving GP problems is still in use but as logarithmic and exponential transformation methods were understood it became easier to simply use them to change the standard GP problem into a convex GP problem and solve using an interior point method. Interior point methods can solve GP problems very fast and robust as they require essentially no tuning of the parameters. Most importantly, the final solution obtained through this method is guaranteed to be the global optimum solution. Solving a standard GP problem is like solving an NLP problem. The only difference being that a GP problem is more constrained in its use of posynomials and monomials in its objective function and constraints. This makes standard GP problems more efficient to solve and they can be solved relatively quickly, but like NLP problems there is no guarantee the solution is globally optimum. In addition, an initial guess needs to be provided and parameters must be carefully chosen.5

Logarithmic transformations are therefore very helpful as they transform the standard GP form into its convex form based on a logarithmic change of variables and a logarithmic transformation of the objective and constraint functions. So instead of minimizing the objective Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f_0} , the logarithm of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f_0} is minimized. The variable x is replaced with the its logarithm Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle y = \log x} . So now Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x = e^y} . The inequality constraint is now Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \log f_i \le 0} instead of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f_i \le 0} and the equality constraint is now Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \log h_j=0} instead of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle h_j=0} .5

Therefore the convex form of a GP problem is

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \min ~\log f_0(e^y)}

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s.t. ~~ \log f_i(e^y) \le 0, i = 1,...,m }

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \log h_j(e^y)=0, j=1,...,M}

where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f_0,...,f_m} are posynomials and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle h_1,...,h_M} are monomials, and the variables y are all positive. This reformulation can occur as long as the constants in the function are positive.

Methods

To see the transformation clearer, more detailed steps can be shown. If the objective function is a monomial of the format

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f_0(x) = cx_1^{a_1}x_2^{a_2}...x_n^{a_n}}

then the new objective function will be a function of a new variable y where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle y = \log x} and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x = e^y} . It will also be a logarithm of the original objective function so

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(y) = \log f_0(e^y)}

where

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \log f_0(e^y) = \log c +a_1\log x_1 +...+ a_n\log x_n = \log c +a_1y_1 +...+ a_ny_n}

which is an affine function of y

If the above objective function was instead a monomial equality constraint such that

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle g(x) = cx_1^{a_1}x_2^{a_2}...x_n^{a_n}=1}

then the new equality constraint would be equal to zero and be simplified to a linear equation of the form

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -\log c = a_1y_1 +...+ a_ny_n}

Any GP with only monomials reduces to a linear program after a logarithmic transformation. Checking for linearity in a monomial case will confirm if the transformation was done correctly.5

If the GP has posynomials the problem becomes more complex. The log of multiple terms cannot be simplified beyond its logarithm form. So if the objective function is

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f_0(x) = \sum_{n=1}^N c_nx_n^{a_n}}

where c > 0, then the new objective function will be

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(y) = \log f_0(e^y)}

where

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \log f_0(e^y) = \log \left ( \sum_{n=1}^N e^{a_ny_n+b_n} \right ) }

and where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle b_n = \log c_n } for Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle n = 1,...,N} . The above can be written in a simpler form as

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \log f_0(e^y) = lse(Ay+b)}

where A is an N by n matrix with rows Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle a_1,...,a_N} and lse is the log-sum-exp function which is convex.6

The reason the logarithmic transformation is done when an exponential transformation can be done in less steps is that the exponential function might take in large values. This may create numerical problems as well as lead to complications in optimization programs. Taking a logarithm allows for the recovery of smaller values, which gives the logarithmic transformation a distinct advantage.6

Examples

A few objective functions and constraints are provided in this section as illustrative examples.

EXAMPLE 1

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \min ~f_0(x_1,x_2)=4x_1^{2}x_2^{4}}

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s.t. ~~ x_1 \ge 0 }

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_2 \ge 0 }

After a logarithmic transformation, this GP becomes:

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \min ~f(y_1, y_2)= \log 4 + 2y_1 + 4y_2 }

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s.t. ~~ y_1 \ge 0 }

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle y_2 \ge 0 }

EXAMPLE 2

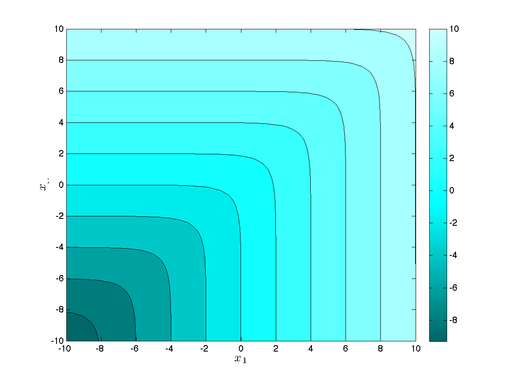

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \min ~f_0(x_1,x_2)=x_1^{2}x_2 + x_1x_2}

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s.t. ~~ x_1^{2}x_2^{-2} \ge 1 }

After a logarithmic transformation, this GP becomes:

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \min ~f(y_1, y_2)= \log \left ( \sum_{n=1}^2 e^{a_ny_n} \right ) }

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s.t. ~~ 2y_1 - 2y_2 \ge 0 }

EXAMPLE 3

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \min ~f_0(x_1,x_2)=16x_1^{2}x_2^{5}}

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s.t. ~~ \frac{x_1}{x_2} = 1 }

After a logarithmic transformation, this GP becomes:

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \min ~f(y_1, y_2)= \log 16 + 2y_1 + 5y_2 }

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s.t. ~~ y_1=y_2 }

To prove that the answers to the above examples are indeed convex, one could verify its convexity through a positive-definiteness test of the Hessian. The reformulations can also be tested in GAMS.

Feasibility Analysis

A standard GP problem can be infeasible i.e. the constraints are too "tight" and don't allow for a solution. Because the constraints from a standard GP problem hold over even when changed to its convex form via logarithmic transformation, an infeasible GP problem will always be infeasible regardless of convexity. This is why it is important to check for infeasibility prior to creating a linear transformation. This is done by undergoing a feasibility analysis and setting up the GP as follows:

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \min ~s }

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s.t. ~~f_i(x) \le s, i = 1,...,m}

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle g_i(x)=1, i=1,...,p}

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s \ge 1}

As s nears a value of 1, the original problem nears feasibility. The final goal is to find a solution such that s=1 so that feasibility of the problem is confirmed.1

Applications

Applications of geometric programming include:

- Electrical Engineering5

- Power Control

- Wire Sizing

- Routing

- Optimal doping profile in semiconductor device engineering

- Digital circuit gate sizing

- Chemical Engineering5

- Optimal reactor design

- Mass Transfer Optimization

- Kinetics

- Maximizing reliability of reactors

- Other

- Transportation6

- Economic Models

- Inventory Models

A great example among these varied applications concerns optimal reactor design. The chemical systems within a reactor follow non convex kinetics equations. If one wanted to optimize a reactor for a given system with given reaction rates, one could do so easily with a logarithmic transformation. Take a reaction A + B to C with reaction rate Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle r = kC_AC_B}

. The logarithmic transformation that would allow one to obtain a convex relation is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle r^*=\log k + c_A +c_B}

where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle c = \log C}

. With this new equation, you can optimize your reactor design for your given system.

Conclusion

Geometric Programming is an application that can solve a varying degree of difficult optimization problems but due to the nature of their complexity, these problems cannot be solved easily. Solving geometric problems can be computationally intensive and finding a global optimum solution is not guaranteed. This is due to the fact that GPs involve posynomials and monomials which are by nature non-linear. However, by creating a logarithmic transformation for a problem, one can solve for the globally optimum solution quicker and easier because the GP is changed to its convex form. Logarithmic transformations offer an advantage over other transformations as it allows one to work with smaller values which are less problematic. Logarithmic transformations can be used wherever geometric programming is used which includes applications in chemical engineering and economics.

References

- Chiang, M. (2005). Geometric Programming for Communication Systems, Publishers, Inc., ISBN 1-933019-09-3.

- Duffin, R.J. (1970). "Linearizing Geometric Programs", SIAM Review 12 (2).

- Biswal, K.K., Ohja, A.K. (2010). "Posynomial Geometric Programming Problems with Multiple Parameters", Journal of Computing 2 (1).

- Duffin, R.J., Peterson, E.L., Zener, C.M. (1967). Geometric Programming Theory and Application, John Wiley & Sons Inc., ISBN 978-0471223702.

- Boyd, S., Hassibi, A., Kim, S.J., Vandenberghe, L. (2007). "A Tutorial on Geometric Programming", Optim Eng 8: 67-127.

- Calafiore, G.C., El Ghaoui, L. (2014). Optimization Models and Applications, Cambridge University Press., ISBN 978-1107050877.