Line search methods: Difference between revisions

m (→Inexact Search) |

|||

| (245 intermediate revisions by 5 users not shown) | |||

| Line 3: | Line 3: | ||

==Introduction== | ==Introduction== | ||

Line search method is an iterative approach to find a local minimum of a multidimensional nonlinear function using the function's gradients. It computes a search direction and then finds an acceptable step length that satisfies certain standard conditions. <ref name="nocedal">J. Nocedal and S. Wright, ''Numerical Optimization'', Springer Science, 1999, p. 30-44. </ref> Line search method can be categorized into exact and inexact methods. The exact method, as in the name, aims to find the exact minimizer at each iteration; while the inexact method computes step lengths to satisfy conditions including Wolfe and Goldstein conditions. | |||

Line search and [https://optimization.cbe.cornell.edu/index.php?title=Trust-region_methods trust-region] methods are two fundamental strategies for locating the new iterate given the current point. | |||

With the ability to solve the unconstrained optimization problem, line search is widely used in many cases including machine learning, game theory and other fields. | |||

==Generic Line Search Method== | ==Generic Line Search Method== | ||

===Basic Algorithm=== | ===Basic Algorithm=== | ||

*Pick | *Pick the starting point <math>x_0</math> | ||

*Repeat the following steps until <math>x_k</math> | *Repeat the following steps until <math>f_k:=f(x_k)</math> coverges to a local minimum : | ||

**Choose a descent direction <math>p_k</math> starting at <math>x_k</math>, defined as | **Choose a descent direction <math>p_k</math> starting at <math>x_k</math>, defined as: <math>\nabla f_{k}^\top p_{k}<0</math> for <math>\nabla f_k \not =0</math> | ||

** | **Find a step length <math>\alpha_k>0</math> so that <math>f(x_k+\alpha_kp_k)<f_k</math> | ||

** Set <math>x_{k+1}=x_k+\alpha_k p_k</math> | **Set <math>x_{k+1}=x_k+\alpha_k p_k</math> | ||

===Search Direction for Line Search=== | ===Search Direction for Line Search=== | ||

The direction of the line search should be chosen to make <math>f</math> decrease moving from point <math>x_k</math> to <math>x_{k+1}</math>. The most obvious direction is the <math>- \nabla f_k</math> because it is the one to make <math>f</math> decreases most rapidly. | The direction of the line search should be chosen to make <math>f</math> decrease moving from point <math>x_k</math> to <math>x_{k+1}</math>, and it is usually related to the gradient <math>\nabla f_k</math>. The most obvious direction is the <math>- \nabla f_k</math> because it is the one to make <math>f</math> decreases most rapidly. This claim can be verified by Taylor's theorem: | ||

<math>f(x_k+\alpha)=f(x_k)+\alpha p^\top\nabla f_k+\frac{1}{2}\alpha^2p^ | <math>f(x_k+\alpha)=f(x_k)+\alpha p^\top\nabla f_k+\frac{1}{2}\alpha^2p^\top f(x_k+tp)p</math>, where <math> t\in (0, \alpha) </math>. | ||

The rate of change in <math>f</math> along the direction <math>p</math> at <math>x_k</math> is the coefficient of <math>\alpha</math>. Therefore, the unit direction <math>p</math> of most rapid decrease is the solution to | The rate of change in <math>f</math> along the direction <math>p</math> at <math>x_k</math> is the coefficient of <math>\alpha</math>. Therefore, the unit direction <math>p</math> of most rapid decrease is the solution to | ||

| Line 25: | Line 27: | ||

<math>\text{s.t.}\ \ ||p||=1</math>. | <math>\text{s.t.}\ \ ||p||=1</math>. | ||

<math>p=\frac{-\nabla f_k}{||\nabla f_k||}</math> is the solution and this direction is orthogonal to the contours of the function. In the following sections, | <math>p=\frac{-\nabla f_k}{||\nabla f_k||}</math> is the solution and this direction is orthogonal to the contours of the function. In the following sections, this will be used as the default direction of the line search. | ||

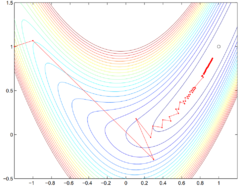

However, the steepest descent direction is not the most efficient, as the steepest descent method does not pass the Rosenbrock test (see '''Figure 1'''). <ref> "[https://pi.math.cornell.edu/~web6140/TopTenAlgorithms/RosenbrockFunction.html Rosenbrock Function]," Cornell University. </ref> Carefully designed descent directions deviating from the steepest direction can be used in practice to produce faster convergence. <ref> R. Fletcher and M. Powell, "[http://galton.uchicago.edu/~lekheng/courses/302/classics/fletcher-powell.pdf A Rapidly Convergent Descent Method for Minimization]," ''The Computer Journal'', vol. 6, no. 2, pp. 163–168, 1963.</ref> | |||

===Step Length=== | ===Step Length=== | ||

The step length is a non-negative value such that <math>f(x_k+\alpha_k p_k)< | The step length is a non-negative value such that <math>f(x_k+\alpha_k p_k)<f(x_k)</math>. When choosing the step length <math>\alpha_k</math>, there is a trade off between giving a substantial reduction of <math>f</math> and not spending too much time finding the solution. If <math>\alpha_k</math> is too large, then the step will overshoot, while if the step length is too small, it is time consuming to find the convergent point. An exact line search and inexact line search are needed to find the value of <math>\alpha</math> and more detail about these approaches will be introduced in the next section. | ||

===Convergence=== | ===Convergence=== | ||

| Line 34: | Line 38: | ||

<math>\lim_{k\to\infty} ||\nabla f(x_{k})|| = 0</math>. | <math>\lim_{k\to\infty} ||\nabla f(x_{k})|| = 0</math>. | ||

It can be shown from '''Zoutendijk's theorem''' <ref | It can be shown from '''Zoutendijk's theorem''' <ref name="nocedal" /> that if the line search algorithm satisfies (weak) Wolfe's conditions (similar results also hold for strong Wolfe and Goldstein conditions) and has a search direction that makes an angle with the steepest descent direction that is bounded away from 90°, the algorithm is globally convergent. | ||

'''Zoutendijk's theorem''' states that, given an iteration where <math>p_k</math> is the descent direction and <math>\alpha_k</math> is the step length that satisfies (weak) Wolfe conditions, if the objective <math>f</math> is bounded below on <math>\mathbb{R}^{n}</math> and is continuously differentiable in an open set <math>\mathcal{N}</math> containing the level set <math>\mathcal{L}:=\{x\ |\ f(x)\leq f(x_0)\}</math>, where <math>x_0</math> is the starting point of the iteration, and the gradient <math>\nabla f</math> is Lipschitz continuous on <math>\mathcal{N}</math>, then | '''Zoutendijk's theorem''' states that, given an iteration where <math>p_k</math> is the descent direction and <math>\alpha_k</math> is the step length that satisfies (weak) Wolfe conditions, if the objective <math>f</math> is bounded below on <math>\mathbb{R}^{n}</math> and is continuously differentiable in an open set <math>\mathcal{N}</math> containing the level set <math>\mathcal{L}:=\{x\ |\ f(x)\leq f(x_0)\}</math>, where <math>x_0</math> is the starting point of the iteration, and the gradient <math>\nabla f</math> is Lipschitz continuous on <math>\mathcal{N}</math>, then | ||

| Line 46: | Line 50: | ||

<math>\lim_{k\to\infty}\cos^{2}\theta_{k}||\nabla f_{k}||^2=0</math>, | <math>\lim_{k\to\infty}\cos^{2}\theta_{k}||\nabla f_{k}||^2=0</math>, | ||

by the n-th term divergence test. Hence, if the algorithm chooses a search direction that is bounded away from | by the n-th term divergence test. Hence, if the algorithm chooses a search direction that is bounded away from 90° relative to the gradient, i.e., given <math>\epsilon>0</math>, | ||

<math>\cos\theta_{k}\geq\epsilon>0,\ \forall k</math>, | <math>\cos\theta_{k}\geq\epsilon>0,\ \forall k</math>, | ||

| Line 54: | Line 58: | ||

<math>\lim_{k\to\infty}||\nabla f_{k}||=0</math>. | <math>\lim_{k\to\infty}||\nabla f_{k}||=0</math>. | ||

However, the ''Zoutendijk condition'' doesn't guarantee convergence to a local minimum but only stationary points. Hence, additional conditions on the search direction is necessary, such as finding a direction of negative curvature, to | However, the ''Zoutendijk condition'' doesn't guarantee convergence to a local minimum but only stationary points. Hence, additional conditions on the search direction is necessary, such as finding a direction of negative curvature whenever possible, to avoid converging to a nonminimizing stationary point. | ||

==Exact Search== | ==Exact Search== | ||

===Steepest Descent Method=== | ===Steepest Descent Method=== | ||

Given the intuition that the negative gradient <math>- \nabla f_k</math> can be an effective search direction, steepest descent follows the idea and establishes a systematic method for minimizing the objective function. Setting <math>- \nabla f_k</math> as the direction, steepest descent computes the step-length <math>\alpha_k</math> by minimizing a single-variable objective function. More specifically, the steps of Steepest Descent Method are as follows | Given the intuition that the negative gradient <math>- \nabla f_k</math> can be an effective search direction, steepest descent follows the idea and establishes a systematic method for minimizing the objective function. Setting <math>- \nabla f_k</math> as the direction, steepest descent computes the step-length <math>\alpha_k</math> by minimizing a single-variable objective function. More specifically, the steps of Steepest Descent Method are as follows: | ||

'''''Steepest Descent Algorithm'''''<blockquote>Set a starting point <math>x_0</math> | '''''Steepest Descent Algorithm'''''<blockquote>Set a starting point <math>x_0</math> | ||

| Line 77: | Line 81: | ||

::'''Break''' | ::'''Break''' | ||

:'''End''' | :'''End if''' | ||

<math>\alpha_k=\underset{\alpha}{\arg\min} f(x_k-\alpha \nabla f(x_k))</math> | <math>\alpha_k=\underset{\alpha}{\arg\min} f(x_k-\alpha \nabla f(x_k))</math> | ||

| Line 85: | Line 89: | ||

<math>k = k + 1</math> | <math>k = k + 1</math> | ||

'''End''' | '''End while''' | ||

'''Return''' <math>x_{k}</math>, <math>f(x_{k})</math></blockquote> | '''Return''' <math>x_{k}</math>, <math>f(x_{k})</math></blockquote> | ||

[[File:Rosenbrock.png|thumb|upright=0.8|'''Figure 1.''' Steepest descent does not produce convergence on the Rosenbrock function. <ref name="hauser" />]] | |||

One advantage of the steepest descent method is the convergency. For a steepest descent method, it converges to a local minimum from any starting point. <ref name="hauser">R. Hauser, "[https://people.maths.ox.ac.uk/hauser/hauser_lecture2.pdf Line Search Methods for Unconstrained Optimization]," Oxford University Computing Laboratory, 2007. </ref> | |||

'''Theorem: Global Convergence of Steepest Descent''' | |||

Let the gradient of <math>f \in C^1</math> be uniformly Lipschitz continuous on <math>\mathbb{R}^{n}</math>. Then, for the iterates with steepest-descent search directions, one of the following situations occurs: | Let the gradient of <math>f \in C^1</math> be uniformly Lipschitz continuous on <math>\mathbb{R}^{n}</math>. Then, for the iterates with steepest-descent search directions, one of the following situations occurs: | ||

*<math>\nabla f(x_k) = 0</math> for some finite <math>k</math> | *<math>\nabla f(x_k) = 0</math> for some finite <math>k</math> | ||

*<math>\lim_{k \to \infty} f(x_k) = -\infty</math> | *<math>\lim_{k \to \infty} f(x_k) = -\infty</math> | ||

*<math>\lim_{k \to \infty} \nabla f(x_k) = 0</math> | *<math>\lim_{k \to \infty} \nabla f(x_k) = 0</math> | ||

Steepest descent method is a special case of gradient descent in that the step-length is | However, steepest descent has disadvantages in that the convergence is always slow and numerically may be not convergent (see '''Figure 1'''.) | ||

Steepest descent method is a special case of gradient descent in that the step-length is analytically defined. However, step-lengths cannot always be computed analytically; in this case, inexact methods can be used to optimize <math>\alpha</math> at each iteration. | |||

==Inexact Search== | ==Inexact Search== | ||

While minimizing an objective function using numeric methods, in each iteration, the updated objective is <math>\phi(\alpha) = f(x_k+\alpha p_k)</math>, which is a function of <math>\alpha</math> after fixing the search direction. The goal is to minimize this objective with respect to <math>\alpha</math>. However, if one wants to solve for the exact minimum in each iteration, it could be computationally expensive and the algorithm will be time-consuming. Therefore, in practice, it is easier solve the subproblem | |||

<math>\underset{\alpha}{min} \quad f(x_k + \alpha p_k)</math> | <math>\underset{\alpha}{min} \quad \phi(\alpha) = f(x_k + \alpha p_k)</math> | ||

numerically and find a reasonable step length <math>\alpha</math> instead, which will decrease the objective function. That is, <math>\alpha</math> satisfies <math>f(x_k + \alpha p_k) \leq f(x_k)</math>. However, convergence to the function's minimum cannot be guaranteed, so Wolfe or Goldstein conditions need to be applied when searching for an acceptable step length. <ref name="nocedal" /> | |||

===Wolfe Conditions=== | ===Wolfe Conditions=== | ||

This condition is proposed by Phillip Wolfe in 1969. It provide an efficient way of choosing a step length that decreases the objective function sufficiently. It consists of two conditions | This condition is proposed by Phillip Wolfe in 1969. <ref>P. Wolfe, "[https://epubs.siam.org/doi/abs/10.1137/1011036 Convergence Conditions for Ascent Methods]," ''SIAM Review'', vol. 11, no. 2, pp. 226–235, 1969.</ref> It provide an efficient way of choosing a step length that decreases the objective function sufficiently. It consists of two conditions: Armijo (sufficient decrease) condition and the curvature condition. | ||

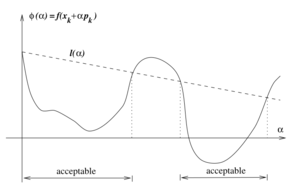

[[File:Armijo.png|thumb|upright=1|'''Figure 2.''' Armijo Condition. <ref name="nocedal" />]] | |||

====Armijo (Sufficient Decrease) Condition==== | |||

<math>f(x_k + \alpha p_k) \leq f(x_k) + c_1 \alpha_{k} p^\top_k \nabla{f(x_k)}</math>, | |||

where <math>c_1\in(0,1)</math> and is often chosen to be of a small order of magnitude around <math>10^{-3}</math>. This condition ensures the computed step length can sufficiently decrease the objective function <math>f(x_k)</math>. Only using this condition, however, it cannot be guaranteed that <math>x_k</math> will converge in a reasonable number of iterations, since Armijo condition is always satisfied with step length being small enough. Therefore, the second condition below needs to be paired with the sufficient decrease condition to keep <math>\alpha_k</math> from being too short. | |||

====Curvature Condition==== | |||

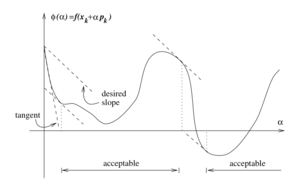

[[File:Curvature condition.png|thumb|upright=1|'''Figure 3.''' Curvature Condition. <ref name="nocedal" />]] | |||

<math>\nabla{f(x_k + \alpha p_k)}^\top p_k \geq c_2 \nabla{f(x_k)}^\top p_k</math>, | |||

where <math>c_2\in(c_1,1)</math> is much greater than <math>c_1</math> and is typically on the order of <math>0.1</math>. This condition ensures a sufficient increase of the gradient. | |||

<math> | This left hand side of the curvature condition is simply the derivative of <math>\phi(\alpha)</math>, thus ensuring <math>\alpha_k</math> to be in the vicinity of a stationary point of <math>\phi(\alpha)</math>. | ||

==== | ====Strong Wolfe Curvature Condition==== | ||

The (weak) Wolfe conditions can result in an <math>\alpha</math> value that is not close to the minimizer of <math>\phi(\alpha)</math>. The (weak) Wolfe conditions can be modified by using the following condition called Strong Wolfe condition, which writes the curvature condition in absolute values: | |||

<math>|p_k \nabla{f(x_k + \alpha p_k)| \leq c_2 |p^\top_k f(x_k)}|</math>. | |||

The strong Wolfe curvature condition restricts the slope of <math>\phi(\alpha)</math> from getting too positive, hence excluding points far away from the stationary point of <math>\phi</math>. | |||

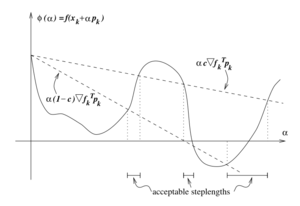

[[File:Goldstein.png|thumb|upright=1|'''Figure 4.''' Goldstein Conditions. <ref name="nocedal" />]] | |||

===Goldstein Conditions=== | ===Goldstein Conditions=== | ||

Another condition to find an appropriate step length is called Goldstein conditions | Another condition to find an appropriate step length is called Goldstein conditions: | ||

<math>f(x_k) + (1-c) \alpha_k \nabla{f^top_k} p_k \leq f(x_k + \alpha p_k) \leq f(x_k) + c \alpha_k \nabla{f^\top_k} p_k</math> | <math>f(x_k) + (1-c) \alpha_k \nabla{f^\top_k} p_k \leq f(x_k + \alpha p_k) \leq f(x_k) + c \alpha_k \nabla{f^\top_k} p_k</math> | ||

where <math>0 \leq c \leq 1/2</math>. The Goldstein condition is quite similar with the Wolfe condition in that, its second inequality ensures that the step length <math>\alpha</math> will decrease the objective function sufficiently and its first inequality keep <math>\alpha</math> from being too short. In comparison with Wolfe condition, one disadvantage of Goldstein condition is that the first inequality of the condition might exclude all minimizers of <math>\phi</math> function. However, usually it is not a fatal problem as long as the objective | where <math>0 \leq c \leq 1/2</math>. The Goldstein condition is quite similar with the Wolfe condition in that, its second inequality ensures that the step length <math>\alpha</math> will decrease the objective function sufficiently and its first inequality keep <math>\alpha</math> from being too short. In comparison with Wolfe condition, one disadvantage of Goldstein condition is that the first inequality of the condition might exclude all minimizers of <math>\phi</math> function. However, usually it is not a fatal problem as long as the objective decreases in the direction of convergence. As a short conclusion, the Goldstein and Wolfe | ||

conditions have quite similar convergence theories. Compared to the Wolfe conditions, the Goldstein conditions are often used in Newton-type methods but are not well suited for quasi-Newton methods that maintain a positive definite Hessian approximation. | conditions have quite similar convergence theories. Compared to the Wolfe conditions, the Goldstein conditions are often used in Newton-type methods but are not well-suited for quasi-Newton methods that maintain a positive definite Hessian approximation. | ||

===Backtracking=== | ===Backtracking Line Search=== | ||

The backtracking method is often used to find the appropriate step length and terminate line search. The backtracking method starts with a relatively large initial step length (e.g., 1 for Newton method), then iteratively shrinking it by a contraction factor until the Armijo (sufficient decrease) condition is satisfied. The advantage of this approach is that the curvature condition needs not be considered, and the step length found at each line search iterate is ensured to be short enough to satisfy sufficient decrease but large enough to still allow the algorithm to make reasonable progress towards convergence. | The backtracking method is often used to find the appropriate step length and terminate line search based. The backtracking method starts with a relatively large initial step length (e.g., 1 for Newton method), then iteratively shrinking it by a contraction factor until the Armijo (sufficient decrease) condition is satisfied. The advantage of this approach is that the curvature condition needs not be considered, and the step length found at each line search iterate is ensured to be short enough to satisfy sufficient decrease but large enough to still allow the algorithm to make reasonable progress towards convergence. | ||

The backtracking algorithm involves control parameters <math>\rho\in(0,1)</math> and <math>c\in(0,1)</math> | The backtracking algorithm involves control parameters <math>\rho\in(0,1)</math> and <math>c\in(0,1)</math>, and it is roughly as follows: | ||

:Choose <math>\alpha_0>0, \rho\in(0,1), c\in(0,1)</math> | |||

:Set <math>\alpha\leftarrow\alpha_0</math> | |||

:'''While''' <math>f(x_{k}+\alpha p_{k}) > f(x_{k})+c\alpha\nabla f_{k}^{\top}p_{k}</math> | |||

::<math>\alpha\leftarrow\rho\alpha</math> | |||

:'''End while''' | |||

:'''Return''' <math>\alpha_k=\alpha</math> | |||

==Numeric Example== | ==Numeric Example== | ||

As an example, we can use line search method to solve the following unconstrained optimization problem: | |||

<math>\min_{x_1,x_2}\ f(x)=x_1-x_2+2x_1x_2+2x_1^2+x_2^2 </math>. | |||

'''First iteration:''' | |||

We have <math>\nabla f(x)=\begin{bmatrix} 1+2x_2+4x_1\\ -1+2x_1+2x_2 \end{bmatrix}</math>. | |||

Starting from <math>x_0=\begin{bmatrix} 0\\0 \end{bmatrix}</math> gives <math>-\nabla f(x_0)=\begin{bmatrix}-1\\1\end{bmatrix}</math>. | |||

We then have <math>f(x_0-\alpha\nabla f(x_0))=f(-\alpha,\alpha)=\alpha^2-2\alpha</math>. | |||

Taking partial derivative of the above equation with respect to <math>\alpha</math> and set it to zero to find the minimizer | |||

<math>\alpha_0=1 </math>. | |||

Therefore, <math>x_1=\begin{bmatrix}0\\0\end{bmatrix} +\alpha_0 \begin{bmatrix}-1\\1\end{bmatrix} =\begin{bmatrix}-1\\1\end{bmatrix} </math>. | |||

'''Second iteration:''' | |||

Given <math>x_1=\begin{bmatrix}-1\\1\end{bmatrix} </math>, we have <math>-\nabla f(x_1)=\begin{bmatrix}1\\1\end{bmatrix}</math>. | |||

Then from <math>\min_\alpha f(x_1-\alpha\nabla f(x_1))=5\alpha^2-2\alpha-1</math>, | |||

finding the minimizer <math>\alpha_1=0.2</math>. | |||

Hence, <math>x_2=\begin{bmatrix}-1\\1\end{bmatrix} +\alpha_1\begin{bmatrix}1\\1\end{bmatrix}=\begin{bmatrix}-0.8\\1.2\end{bmatrix} </math>. | |||

'''Third iteration:''' | |||

Given <math>x_2=\begin{bmatrix}-0.8\\1.2\end{bmatrix}</math>, we have have <math>-\nabla f(x_2)=\begin{bmatrix}-0.2\\0.2\end{bmatrix}</math>. | |||

Then from <math>\min_\alpha f(x_2-\alpha\nabla f(x_2))=0.04\alpha^2-0.08\alpha-1.2</math>, | |||

finding the minimizer <math>\alpha_2=1</math>. | |||

Hence, <math>x_3=\begin{bmatrix}-0.8\\1.2\end{bmatrix} +\alpha_2\begin{bmatrix}-0.2\\0.2\end{bmatrix}=\begin{bmatrix}-1\\1.4\end{bmatrix} </math>. | |||

'''Fourth iteration:''' | |||

Given <math>x_3=\begin{bmatrix}-1\\1.4\end{bmatrix}</math>, we have have <math>-\nabla f(x_3)=\begin{bmatrix}0.2\\0.2\end{bmatrix}</math>. | |||

Then from <math>\min_\alpha f(x_3-\alpha\nabla f(x_3))=0.2\alpha^2-0.08\alpha-1.24</math>, | |||

finding the minimizer <math>\alpha_3=0.2</math>. | |||

Hence, <math>x_4=\begin{bmatrix}-1\\1.4\end{bmatrix} +\alpha_3\begin{bmatrix}0.2\\0.2\end{bmatrix}=\begin{bmatrix}-0.96\\1.44\end{bmatrix} </math>. | |||

'''Fifth iteration:''' | |||

Given <math>x_4=\begin{bmatrix}-0.96\\1.44\end{bmatrix}</math>, we have have <math>-\nabla f(x_4)=\begin{bmatrix}-0.04\\0.04\end{bmatrix}</math>. | |||

Then from <math>\min_\alpha f(x_4-\alpha\nabla f(x_4))=0.0016 \alpha^2-0.0032 \alpha-1.248</math>, | |||

finding the minimizer <math>\alpha_4=1</math>. | |||

Hence, <math>x_5=\begin{bmatrix}-0.96\\1.44\end{bmatrix} +\alpha_4\begin{bmatrix}-0.04\\0.04\end{bmatrix}=\begin{bmatrix}-1\\1.48\end{bmatrix} </math>. | |||

'''Termination:''' | |||

At this point, we find <math>\nabla f(x_5)=\begin{bmatrix}-0.04\\-0.04\end{bmatrix}</math>. | |||

Check to see if the convergence is sufficient by evaluating <math>||\nabla f(x_5)||</math>: | |||

<math>|| \nabla f(x_5)||=\sqrt{(-0.04)^2+(-0.04)^2}=0.0565 </math>. | |||

Since <math>0.0565</math> is relatively small and is close enough to zero, the line search is complete. | |||

The derived optimal solution is <math>x^*=\begin{bmatrix}-1\\1.48\end{bmatrix}</math>, and the optimal objective value is found to be <math>-1.25</math>. | |||

==Applications== | ==Applications== | ||

A common application of line search method is in minimizing the loss function in training machine learning models. For example, when training a classification model with logistic regression, gradient descent algorithm (GD), which is a classic method of line search, can be used to minimize the logistic loss and compute the coefficients by iteration till the loss function reaches converges to a local minimum. <ref name="GD in LR">A. A. Tokuç, "[https://www.baeldung.com/cs/gradient-descent-logistic-regression Gradient Descent Equation in Logistic Regression]."</ref> An alternative of gradient descent in machine learning domain is [https://optimization.cbe.cornell.edu/index.php?title=Stochastic_gradient_descent stochastic gradient descent] (SGD). The difference is on computation expense that instead of using all training set to compute the descent, SGD simply sample one data point to compute the descent. The application of SGD reduces the computation costs greatly in large dataset compared to gradient descent. | |||

Line search methods are also used in solving nonlinear least squares problems, <ref>M. Al-Baali and R. Fletcher, "[https://link.springer.com/content/pdf/10.1007/BF00940566.pdf An Efficient Line Search for Nonlinear Least Squares]," ''Journal of Optimization Theory and Applications'', vol. 48, no. 3, pp. 359-377, 1986. </ref> <ref>P. Lindström and P. Å. Wedin, "[https://link.springer.com/article/10.1007/BF02591997 A New Linesearch Algorithm for Nonlinear Least Squares Problems]," ''Mathematical Programming'', vol. 29, no. 3, pp. 268-296, 1984.</ref> in adaptive filtering in process control, <ref> C. E. Davila, "[https://ieeexplore.ieee.org/abstract/document/224257?casa_token=e4KLi0ERhCoAAAAA:D12Yp4HaOmO1WcDF46rCoDKLGOdwyQJ531KJ29Wla3NFPjYe2HyQB75d1sjhK7-Dz0Kg7of_ Line Search Algorithms for Adaptive Filtering]," ''IEEE Transactions on Signal Processing'', vol. 41, no. 7, pp. 2490-2494, 1993.</ref> in relaxation method with which to solve generalized Nash equilibrium problems, <ref> A. von Heusinger and C. Kanzow, "[https://link.springer.com/article/10.1007/s10957-009-9553-0 Relaxation Methods for Generalized Nash Equilibrium Problems with Inexact Line Search]," ''Journal of Optimization Theory and Applications'', vol. 143, pp. 159–183, 2009. </ref> in production planning involving non-linear fitness functions, <ref>P. Vasant and N. Barsoum, "[https://www.sciencedirect.com/science/article/pii/S0952197609000669 Hybrid Genetic Algorithms and Line Search Method for Industrial Production Planning with Non-linear Fitness Function]," ''Engineering Applications of Artificial Intelligence'', vol. 22, no. 4-5, pp. 767-777, 2009.</ref> and more. | |||

==Conclusion== | |||

Line Search is a useful strategy to solve unconstrained optimization problems. The success of the line search algorithm depends on careful consideration of the choice of both the direction <math>p_k</math> and the step size <math>\alpha_k</math>.This page has introduced the basic algorithm firstly, and then includes the exact search and inexact search. The exact search contains the steepest descent, and the inexact search covers the Wolfe and Goldstein conditions, backtracking, and Zoutendijk's theorem. More approaches to solve unconstrained optimization problems can be found in [https://optimization.cbe.cornell.edu/index.php?title=Trust-region_methods trust-region methods], [https://optimization.cbe.cornell.edu/index.php?title=Conjugate_gradient_methods conjugate gradient methods], Newton's method and [https://optimization.cbe.cornell.edu/index.php?title=Quasi-Newton_methods Quasi-Newton method]. | |||

==Reference== | ==Reference== | ||

<references /> | <references /> | ||

Latest revision as of 05:35, 16 December 2021

Authors: Lihe Cao, Zhengyi Sui, Jiaqi Zhang, Yuqing Yan, and Yuhui Gu (6800 Fall 2021).

Introduction

Line search method is an iterative approach to find a local minimum of a multidimensional nonlinear function using the function's gradients. It computes a search direction and then finds an acceptable step length that satisfies certain standard conditions. [1] Line search method can be categorized into exact and inexact methods. The exact method, as in the name, aims to find the exact minimizer at each iteration; while the inexact method computes step lengths to satisfy conditions including Wolfe and Goldstein conditions. Line search and trust-region methods are two fundamental strategies for locating the new iterate given the current point. With the ability to solve the unconstrained optimization problem, line search is widely used in many cases including machine learning, game theory and other fields.

Generic Line Search Method

Basic Algorithm

- Pick the starting point

- Repeat the following steps until coverges to a local minimum :

- Choose a descent direction Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle p_k} starting at Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_k} , defined as: Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \nabla f_{k}^\top p_{k}<0} for Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \nabla f_k \not =0}

- Find a step length Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha_k>0} so that Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(x_k+\alpha_kp_k)<f_k}

- Set Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_{k+1}=x_k+\alpha_k p_k}

Search Direction for Line Search

The direction of the line search should be chosen to make Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f} decrease moving from point Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_k} to Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_{k+1}} , and it is usually related to the gradient Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \nabla f_k} . The most obvious direction is the Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle - \nabla f_k} because it is the one to make Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f} decreases most rapidly. This claim can be verified by Taylor's theorem:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(x_k+\alpha)=f(x_k)+\alpha p^\top\nabla f_k+\frac{1}{2}\alpha^2p^\top f(x_k+tp)p} , where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle t\in (0, \alpha) } .

The rate of change in Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f} along the direction Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle p} at Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_k} is the coefficient of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha} . Therefore, the unit direction Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle p} of most rapid decrease is the solution to

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \min\ p^\top\nabla f_k}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \text{s.t.}\ \ ||p||=1} .

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle p=\frac{-\nabla f_k}{||\nabla f_k||}} is the solution and this direction is orthogonal to the contours of the function. In the following sections, this will be used as the default direction of the line search.

However, the steepest descent direction is not the most efficient, as the steepest descent method does not pass the Rosenbrock test (see Figure 1). [2] Carefully designed descent directions deviating from the steepest direction can be used in practice to produce faster convergence. [3]

Step Length

The step length is a non-negative value such that Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(x_k+\alpha_k p_k)<f(x_k)} . When choosing the step length Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha_k} , there is a trade off between giving a substantial reduction of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f} and not spending too much time finding the solution. If Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha_k} is too large, then the step will overshoot, while if the step length is too small, it is time consuming to find the convergent point. An exact line search and inexact line search are needed to find the value of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha} and more detail about these approaches will be introduced in the next section.

Convergence

For a line search algorithm to be reliable, it should be globally convergent, that is the gradient norms, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle ||\nabla f(x_{k})||} , should converge to zero with each iteration, i.e., Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \lim_{k\to\infty} ||\nabla f(x_{k})|| = 0} .

It can be shown from Zoutendijk's theorem [1] that if the line search algorithm satisfies (weak) Wolfe's conditions (similar results also hold for strong Wolfe and Goldstein conditions) and has a search direction that makes an angle with the steepest descent direction that is bounded away from 90°, the algorithm is globally convergent.

Zoutendijk's theorem states that, given an iteration where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle p_k} is the descent direction and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha_k} is the step length that satisfies (weak) Wolfe conditions, if the objective Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f} is bounded below on Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathbb{R}^{n}} and is continuously differentiable in an open set Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{N}} containing the level set Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{L}:=\{x\ |\ f(x)\leq f(x_0)\}} , where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_0} is the starting point of the iteration, and the gradient Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \nabla f} is Lipschitz continuous on Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{N}} , then

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \sum_{k=0}^{\infty}\cos^{2}\theta_{k}||\nabla f_{k}||^2 < \infty} ,

where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \theta_{k}} is the angle between Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle p_k} and the steepest descent direction Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -\nabla f(x_{k})} .

The Zoutendijk condition above implies that

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \lim_{k\to\infty}\cos^{2}\theta_{k}||\nabla f_{k}||^2=0} ,

by the n-th term divergence test. Hence, if the algorithm chooses a search direction that is bounded away from 90° relative to the gradient, i.e., given Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \epsilon>0} ,

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \cos\theta_{k}\geq\epsilon>0,\ \forall k} ,

it follows that

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \lim_{k\to\infty}||\nabla f_{k}||=0} .

However, the Zoutendijk condition doesn't guarantee convergence to a local minimum but only stationary points. Hence, additional conditions on the search direction is necessary, such as finding a direction of negative curvature whenever possible, to avoid converging to a nonminimizing stationary point.

Exact Search

Steepest Descent Method

Given the intuition that the negative gradient Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle - \nabla f_k} can be an effective search direction, steepest descent follows the idea and establishes a systematic method for minimizing the objective function. Setting Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle - \nabla f_k} as the direction, steepest descent computes the step-length Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha_k} by minimizing a single-variable objective function. More specifically, the steps of Steepest Descent Method are as follows:

Steepest Descent Algorithm

Set a starting point

Set a convergence criterium Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \epsilon>0}

Set Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle k = 0}

Set the maximum iteration Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle N}

While Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle k \le N} :

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \nabla f(x_k) = \left.\frac{\partial f(x)}{\partial x}\right\vert_{x=x_k}}

- If Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \nabla f(x_k)\le \epsilon} :

- Break

- End if

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha_k=\underset{\alpha}{\arg\min} f(x_k-\alpha \nabla f(x_k))}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_{k+1}=x_k-\alpha_k \nabla f(x_k)}

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle k = k + 1}

End while

Return Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_{k}} , Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(x_{k})}

One advantage of the steepest descent method is the convergency. For a steepest descent method, it converges to a local minimum from any starting point. [4]

Theorem: Global Convergence of Steepest Descent

Let the gradient of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f \in C^1} be uniformly Lipschitz continuous on Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathbb{R}^{n}} . Then, for the iterates with steepest-descent search directions, one of the following situations occurs:

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \nabla f(x_k) = 0} for some finite Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle k}

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \lim_{k \to \infty} f(x_k) = -\infty}

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \lim_{k \to \infty} \nabla f(x_k) = 0}

However, steepest descent has disadvantages in that the convergence is always slow and numerically may be not convergent (see Figure 1.)

Steepest descent method is a special case of gradient descent in that the step-length is analytically defined. However, step-lengths cannot always be computed analytically; in this case, inexact methods can be used to optimize Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha} at each iteration.

Inexact Search

While minimizing an objective function using numeric methods, in each iteration, the updated objective is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \phi(\alpha) = f(x_k+\alpha p_k)} , which is a function of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha} after fixing the search direction. The goal is to minimize this objective with respect to Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha} . However, if one wants to solve for the exact minimum in each iteration, it could be computationally expensive and the algorithm will be time-consuming. Therefore, in practice, it is easier solve the subproblem

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \underset{\alpha}{min} \quad \phi(\alpha) = f(x_k + \alpha p_k)}

numerically and find a reasonable step length Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha} instead, which will decrease the objective function. That is, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha} satisfies Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(x_k + \alpha p_k) \leq f(x_k)} . However, convergence to the function's minimum cannot be guaranteed, so Wolfe or Goldstein conditions need to be applied when searching for an acceptable step length. [1]

Wolfe Conditions

This condition is proposed by Phillip Wolfe in 1969. [5] It provide an efficient way of choosing a step length that decreases the objective function sufficiently. It consists of two conditions: Armijo (sufficient decrease) condition and the curvature condition.

Armijo (Sufficient Decrease) Condition

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(x_k + \alpha p_k) \leq f(x_k) + c_1 \alpha_{k} p^\top_k \nabla{f(x_k)}} ,

where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle c_1\in(0,1)} and is often chosen to be of a small order of magnitude around Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 10^{-3}} . This condition ensures the computed step length can sufficiently decrease the objective function Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(x_k)} . Only using this condition, however, it cannot be guaranteed that Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_k} will converge in a reasonable number of iterations, since Armijo condition is always satisfied with step length being small enough. Therefore, the second condition below needs to be paired with the sufficient decrease condition to keep Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha_k} from being too short.

Curvature Condition

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \nabla{f(x_k + \alpha p_k)}^\top p_k \geq c_2 \nabla{f(x_k)}^\top p_k} ,

where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle c_2\in(c_1,1)} is much greater than Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle c_1} and is typically on the order of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.1} . This condition ensures a sufficient increase of the gradient.

This left hand side of the curvature condition is simply the derivative of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \phi(\alpha)} , thus ensuring Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha_k} to be in the vicinity of a stationary point of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \phi(\alpha)} .

Strong Wolfe Curvature Condition

The (weak) Wolfe conditions can result in an Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha} value that is not close to the minimizer of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \phi(\alpha)} . The (weak) Wolfe conditions can be modified by using the following condition called Strong Wolfe condition, which writes the curvature condition in absolute values:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle |p_k \nabla{f(x_k + \alpha p_k)| \leq c_2 |p^\top_k f(x_k)}|} .

The strong Wolfe curvature condition restricts the slope of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \phi(\alpha)} from getting too positive, hence excluding points far away from the stationary point of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \phi} .

Goldstein Conditions

Another condition to find an appropriate step length is called Goldstein conditions:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(x_k) + (1-c) \alpha_k \nabla{f^\top_k} p_k \leq f(x_k + \alpha p_k) \leq f(x_k) + c \alpha_k \nabla{f^\top_k} p_k}

where Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0 \leq c \leq 1/2} . The Goldstein condition is quite similar with the Wolfe condition in that, its second inequality ensures that the step length Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha} will decrease the objective function sufficiently and its first inequality keep Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha} from being too short. In comparison with Wolfe condition, one disadvantage of Goldstein condition is that the first inequality of the condition might exclude all minimizers of Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \phi} function. However, usually it is not a fatal problem as long as the objective decreases in the direction of convergence. As a short conclusion, the Goldstein and Wolfe conditions have quite similar convergence theories. Compared to the Wolfe conditions, the Goldstein conditions are often used in Newton-type methods but are not well-suited for quasi-Newton methods that maintain a positive definite Hessian approximation.

Backtracking Line Search

The backtracking method is often used to find the appropriate step length and terminate line search based. The backtracking method starts with a relatively large initial step length (e.g., 1 for Newton method), then iteratively shrinking it by a contraction factor until the Armijo (sufficient decrease) condition is satisfied. The advantage of this approach is that the curvature condition needs not be considered, and the step length found at each line search iterate is ensured to be short enough to satisfy sufficient decrease but large enough to still allow the algorithm to make reasonable progress towards convergence.

The backtracking algorithm involves control parameters and Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle c\in(0,1)} , and it is roughly as follows:

- Choose Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha_0>0, \rho\in(0,1), c\in(0,1)}

- Set Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha\leftarrow\alpha_0}

- While Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(x_{k}+\alpha p_{k}) > f(x_{k})+c\alpha\nabla f_{k}^{\top}p_{k}}

- Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha\leftarrow\rho\alpha}

- End while

- Return Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha_k=\alpha}

Numeric Example

As an example, we can use line search method to solve the following unconstrained optimization problem:

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \min_{x_1,x_2}\ f(x)=x_1-x_2+2x_1x_2+2x_1^2+x_2^2 } .

First iteration:

We have Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \nabla f(x)=\begin{bmatrix} 1+2x_2+4x_1\\ -1+2x_1+2x_2 \end{bmatrix}} .

Starting from Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_0=\begin{bmatrix} 0\\0 \end{bmatrix}} gives Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -\nabla f(x_0)=\begin{bmatrix}-1\\1\end{bmatrix}} .

We then have Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f(x_0-\alpha\nabla f(x_0))=f(-\alpha,\alpha)=\alpha^2-2\alpha} .

Taking partial derivative of the above equation with respect to Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha} and set it to zero to find the minimizer Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha_0=1 } .

Therefore, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_1=\begin{bmatrix}0\\0\end{bmatrix} +\alpha_0 \begin{bmatrix}-1\\1\end{bmatrix} =\begin{bmatrix}-1\\1\end{bmatrix} } .

Second iteration:

Given Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_1=\begin{bmatrix}-1\\1\end{bmatrix} } , we have Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -\nabla f(x_1)=\begin{bmatrix}1\\1\end{bmatrix}} .

Then from Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \min_\alpha f(x_1-\alpha\nabla f(x_1))=5\alpha^2-2\alpha-1} , finding the minimizer Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha_1=0.2} .

Hence, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_2=\begin{bmatrix}-1\\1\end{bmatrix} +\alpha_1\begin{bmatrix}1\\1\end{bmatrix}=\begin{bmatrix}-0.8\\1.2\end{bmatrix} } .

Third iteration:

Given Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_2=\begin{bmatrix}-0.8\\1.2\end{bmatrix}} , we have have Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -\nabla f(x_2)=\begin{bmatrix}-0.2\\0.2\end{bmatrix}} .

Then from Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \min_\alpha f(x_2-\alpha\nabla f(x_2))=0.04\alpha^2-0.08\alpha-1.2} , finding the minimizer Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha_2=1} .

Hence, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_3=\begin{bmatrix}-0.8\\1.2\end{bmatrix} +\alpha_2\begin{bmatrix}-0.2\\0.2\end{bmatrix}=\begin{bmatrix}-1\\1.4\end{bmatrix} } .

Fourth iteration:

Given Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_3=\begin{bmatrix}-1\\1.4\end{bmatrix}} , we have have Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -\nabla f(x_3)=\begin{bmatrix}0.2\\0.2\end{bmatrix}} .

Then from Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \min_\alpha f(x_3-\alpha\nabla f(x_3))=0.2\alpha^2-0.08\alpha-1.24} , finding the minimizer Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha_3=0.2} .

Hence, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_4=\begin{bmatrix}-1\\1.4\end{bmatrix} +\alpha_3\begin{bmatrix}0.2\\0.2\end{bmatrix}=\begin{bmatrix}-0.96\\1.44\end{bmatrix} } .

Fifth iteration:

Given Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_4=\begin{bmatrix}-0.96\\1.44\end{bmatrix}} , we have have Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -\nabla f(x_4)=\begin{bmatrix}-0.04\\0.04\end{bmatrix}} .

Then from Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \min_\alpha f(x_4-\alpha\nabla f(x_4))=0.0016 \alpha^2-0.0032 \alpha-1.248} , finding the minimizer Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha_4=1} .

Hence, Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_5=\begin{bmatrix}-0.96\\1.44\end{bmatrix} +\alpha_4\begin{bmatrix}-0.04\\0.04\end{bmatrix}=\begin{bmatrix}-1\\1.48\end{bmatrix} } .

Termination:

At this point, we find Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \nabla f(x_5)=\begin{bmatrix}-0.04\\-0.04\end{bmatrix}} .

Check to see if the convergence is sufficient by evaluating Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle ||\nabla f(x_5)||} :

Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle || \nabla f(x_5)||=\sqrt{(-0.04)^2+(-0.04)^2}=0.0565 } .

Since Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.0565} is relatively small and is close enough to zero, the line search is complete.

The derived optimal solution is Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x^*=\begin{bmatrix}-1\\1.48\end{bmatrix}} , and the optimal objective value is found to be Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -1.25} .

Applications

A common application of line search method is in minimizing the loss function in training machine learning models. For example, when training a classification model with logistic regression, gradient descent algorithm (GD), which is a classic method of line search, can be used to minimize the logistic loss and compute the coefficients by iteration till the loss function reaches converges to a local minimum. [6] An alternative of gradient descent in machine learning domain is stochastic gradient descent (SGD). The difference is on computation expense that instead of using all training set to compute the descent, SGD simply sample one data point to compute the descent. The application of SGD reduces the computation costs greatly in large dataset compared to gradient descent.

Line search methods are also used in solving nonlinear least squares problems, [7] [8] in adaptive filtering in process control, [9] in relaxation method with which to solve generalized Nash equilibrium problems, [10] in production planning involving non-linear fitness functions, [11] and more.

Conclusion

Line Search is a useful strategy to solve unconstrained optimization problems. The success of the line search algorithm depends on careful consideration of the choice of both the direction Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle p_k} and the step size Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha_k} .This page has introduced the basic algorithm firstly, and then includes the exact search and inexact search. The exact search contains the steepest descent, and the inexact search covers the Wolfe and Goldstein conditions, backtracking, and Zoutendijk's theorem. More approaches to solve unconstrained optimization problems can be found in trust-region methods, conjugate gradient methods, Newton's method and Quasi-Newton method.

Reference

- ↑ 1.0 1.1 1.2 1.3 1.4 1.5 J. Nocedal and S. Wright, Numerical Optimization, Springer Science, 1999, p. 30-44.

- ↑ "Rosenbrock Function," Cornell University.

- ↑ R. Fletcher and M. Powell, "A Rapidly Convergent Descent Method for Minimization," The Computer Journal, vol. 6, no. 2, pp. 163–168, 1963.

- ↑ 4.0 4.1 R. Hauser, "Line Search Methods for Unconstrained Optimization," Oxford University Computing Laboratory, 2007.

- ↑ P. Wolfe, "Convergence Conditions for Ascent Methods," SIAM Review, vol. 11, no. 2, pp. 226–235, 1969.

- ↑ A. A. Tokuç, "Gradient Descent Equation in Logistic Regression."

- ↑ M. Al-Baali and R. Fletcher, "An Efficient Line Search for Nonlinear Least Squares," Journal of Optimization Theory and Applications, vol. 48, no. 3, pp. 359-377, 1986.

- ↑ P. Lindström and P. Å. Wedin, "A New Linesearch Algorithm for Nonlinear Least Squares Problems," Mathematical Programming, vol. 29, no. 3, pp. 268-296, 1984.

- ↑ C. E. Davila, "Line Search Algorithms for Adaptive Filtering," IEEE Transactions on Signal Processing, vol. 41, no. 7, pp. 2490-2494, 1993.

- ↑ A. von Heusinger and C. Kanzow, "Relaxation Methods for Generalized Nash Equilibrium Problems with Inexact Line Search," Journal of Optimization Theory and Applications, vol. 143, pp. 159–183, 2009.

- ↑ P. Vasant and N. Barsoum, "Hybrid Genetic Algorithms and Line Search Method for Industrial Production Planning with Non-linear Fitness Function," Engineering Applications of Artificial Intelligence, vol. 22, no. 4-5, pp. 767-777, 2009.